|

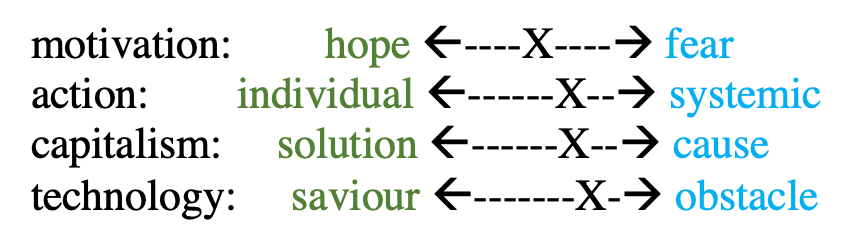

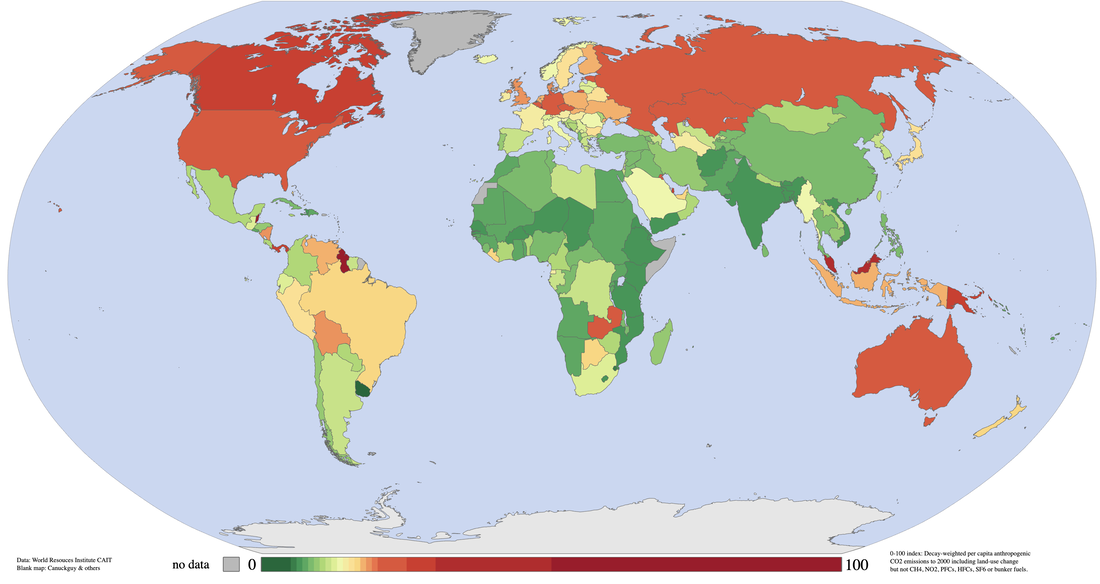

This is the third post in the series Historians Confront the Climate Emergency, hosted by ActiveHistory.ca, NiCHE (Network in Canadian History & Environment), Historical Climatology, and Climate History Network. Dr. Daniel Macfarlane, Western Michigan University We’re in a climate emergency. This isn’t just rhetorical hyperbole, but a statement backed by more than 13,000 scientists. Even the venerable publication Scientific American agreed to adopt the term earlier this year. Canada is particularly culpable for this crisis because of its petro-state status and hyper-consumerism. My research deals with the transborder history and politics of Canada-U.S. water and energy issues, lately involving climate change. But it is in my teaching role that I spend the most time addressing the climate emergency since I’m in an environmental and sustainability studies department (which has a climate change minor). This includes an introductory course that features a major climate change component, as well as senior courses such as the seminar I’m teaching this fall that concentrates on my campus’s carbon emissions. True, I have the advantage of teaching in an environment-focused setting that looks as much at the present and the future as the past. But all historians, regardless of experience in environmental history or history of science, can bring the climate emergency into their classroom. What I would like to do in this post is to suggest some areas, based on my teaching experiences and reading of recent literature, where climate change could be injected into Canadian history survey courses. During the pandemic, I’ve read a wide range of new popular books about the climate crisis – from the technocratic solutions of Bill Gates to the Green New Deal advocacy of Kate Aronoff. Within those public-facing books, I noticed four key debates – or spectrums since they don’t have to be either-or questions – about tackling climate. These are illustrated below, with the ‘X’ marking where I land within each: I could write a whole post about why I fall where I do. But that isn’t the point here. Instead, I’m going to offer strategies for infusing lectures, discussions, and assignments with aspects of those four debates. Probably the most obvious way to do that is enhance the emphasis on natural resources. Climate change, as well as our other big ecological problems, are tightly linked to how we use and consume resources. The most relevant type of resource when it comes to greenhouse gases is energy. Canadians have long been amongst the most profligate people in the history of the globe in terms of energy consumption (only some of which can be explained by Canada’s widely dispersed population and cold climate). Canada has also been a global production leader in several different energy forms, with hydroelectricity and oil likely the most well-known (though we could count uranium as well because of its role in nuclear power). To incorporate energy history, university instructors could go several routes, such as peppering it into lectures throughout the term, or create an entire section or module. It just so happens that the major energy eras and transitions line up pretty well with major periods of Canadian history extending deep back into the premodern period: animal/human muscle, wood, coal, hydro, fossil fuels, etc. Truthfully, energy could be the organizing principle for an entire survey course (and this book would make a great text). The development of energy is intrinsically tied to common classroom themes in Canadian political history: federalism (disputes over provincial rights to harvest hydropower and fossil fuels); regional identity (Ontario Hydro, Hydro-Québec, and BC Hydro; the prairies and oil; coal on the east coast; etc.); Canada-U.S. relations (electricity, fossil fuel, and uranium exports). Many major topics that normally come up anyway, from the railways to free trade, are inherently about energy and environment, providing segues to wrestle with climate change and Canada’s contribution and response to it.

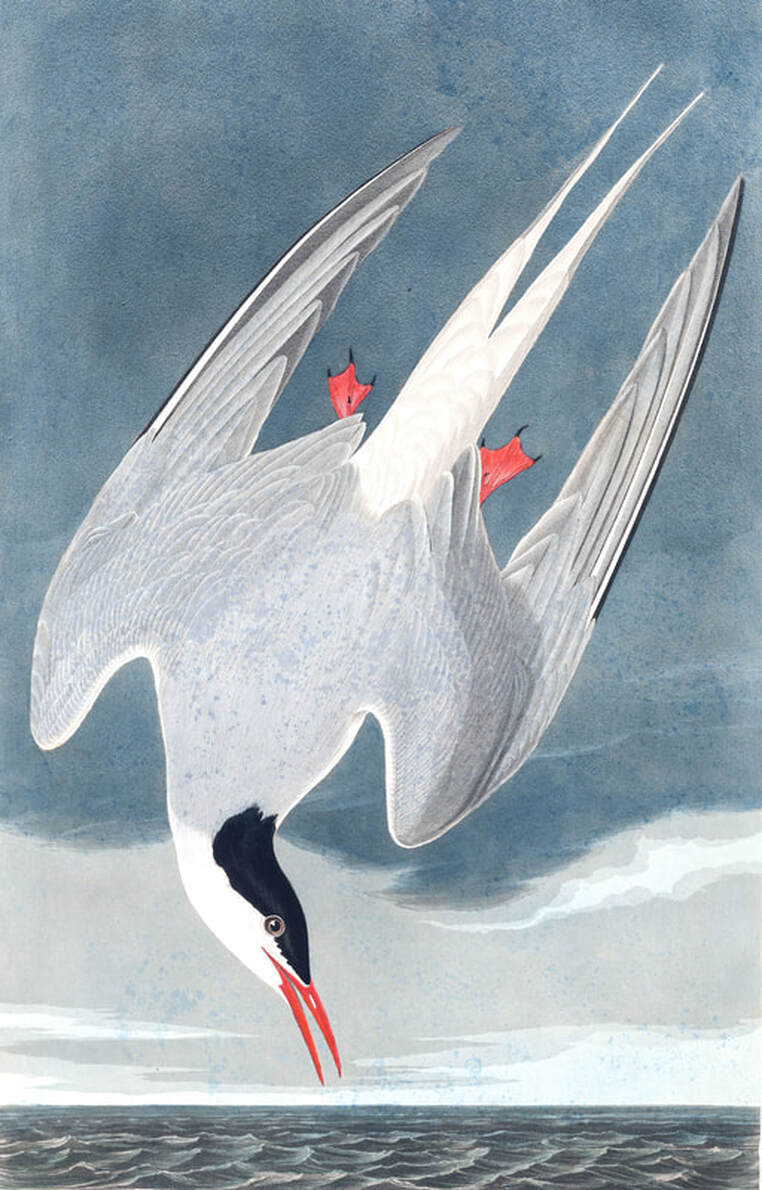

Hydroelectric dams, fossil fuels, and pipelines have long wreaked havoc on First Nations communities. The history of energy developments therefore also dovetails with Canada’s history of settler and extractive colonialism. And discussions about reconciliation can relate to contemporary calls for a just transition away from fossil fuels. Tying in with the systemic vs. individual debate, other aspects of the history of social movements and human rights in Canada could be an entrée for grappling with climate change. Sticking with the systemic angle, another existing theme in the Canadian curriculum where climate change could clearly piggyback is the role of the state. The history of capitalism is yet another prevalent theme that can be merged with exploring climate change. The tension between government regulation and intervention versus the free market is already apparent in Canadian history and lends itself to talking about capitalism as the solution or the cause. For those addressing the history of technology, Canada has been at the forefront of a number of technological changes dealing with the extraction and burning of energy, from the world’s biggest hydropower stations a century ago to the tar sands today. Technology is why we have cheap energy. Cheap energy is a sine qua non of “modern” Canadian lifestyles, the reason why consumer products are (relative to their true cost) inexpensive and available. And cheap energy is a major reason, maybe the main reason, we have climate change. The extraction and production of energy – e.g., coal miners and oil roughnecks – connects with important themes in labour and class history, while the ways energy is consumed – such as household work or petro-masculinity – can be combined with themes in social and gender history. And how has cheap energy and the attendant assumptions of abundance practically and conceptually shaped modern Canadian society and Canadian identity? Students are frequently interested in studying the differences between Canada and the United States. Canada’s social safety net and collectivism are often held up as distinctive from American individualism and privatization. But that may be the narcissism of small differences relative to the historic exploitation of natural resources, per capita levels of consumption, and greenhouse gas emissions; on those scores, to the rest of the globe the U.S. and Canada look pretty identical. When it comes time to teach about Canadian international history, for all the claims about Canada’s middle power or peacekeeper proclivities, it is in the realm of fossil fuels and climate change that Canada might actually be a superpower (but the bad kind). Another pedagogical approach is to look at past adaptations to a changing climate. That is, the climate has shifted in the past, and peoples living in the territory now called Canada had to figure out ways to cope. If you’re teaching the pre-Confederation survey, the Little Ice Age (roughly the 16th to the 18th centuries) caused changes to food acquisition and agriculture strategies, which in turn had social, political, and military knock-on effects. Resilience emerges as a theme to interlink cultural responses to climate change in the past and present. For those doing post-Confederation history, many of the climactic shifts we currently recognize were already occurring by the Second World War. The start of the Cold War is one of the candidates for dating the beginning of the Anthropocene, which could serve as an organizing topic or concept (this series will have more on the Anthropocene in future posts). What about some specific class exercises and assignments? Try integrating historical sources as indirect proxies for comparing climate from past to present. You might have heard of using ice cores, sediments, or tree rings to infer historic climatic conditions. But the types of records historians are likely more familiar with, such as paintings, diaries, ship or whaling log books, and fur trade fort journals, can also be revealing and double as a unique way to introduce undergrads to the use of primary sources. (A contributor to this series, Dagomar Degroot, has written extensively about the use of various textual records for climate history). Artistic representations can show glacial retreat or pollution levels, for example. Or, given the prominence of the fur trade in Canada’s history, using fort journals to compare past temperatures and change of seasons with the present could be an instructive way to expose students to both climate history and primary sources. There are places online where some of those types of information are already organized for easy integration into your curriculum. For the pre-Confederation period, check out Canada’s Year Without a Summer. The titular year in question is 1816; the Tambora volcano erupted the previous year in Indonesia, leading to subsequent cold weather in early eastern Canada. This website was created by Alan MacEachern and Michael O’Hagan with course instructors in mind: it offers more than 120 sources, as well as guidance for how teachers could incorporate these into class. For the post-Confederation period, try Environment Canada’s National Climate Data and Information Archive which has (digital) reams of historical climate data. A professor could create an exercise or assignment, for example, in which students pick a location – such as their hometown or their university – and compare past and present weather/climate conditions. This is not an exhaustive list of ideas, but some easy ways to get started. Given the reality and urgency of the climate crisis, and its relevance to the lives and concerns of students, it is something all introductory history courses need to address. Daniel Macfarlane is an Associate Professor in the Institute of the Environment and Sustainability at Western Michigan University, co-editor of The Otter-La loutre, and a member of the executive board of NiCHE, Network in Canadian History & Environment.

0 Comments

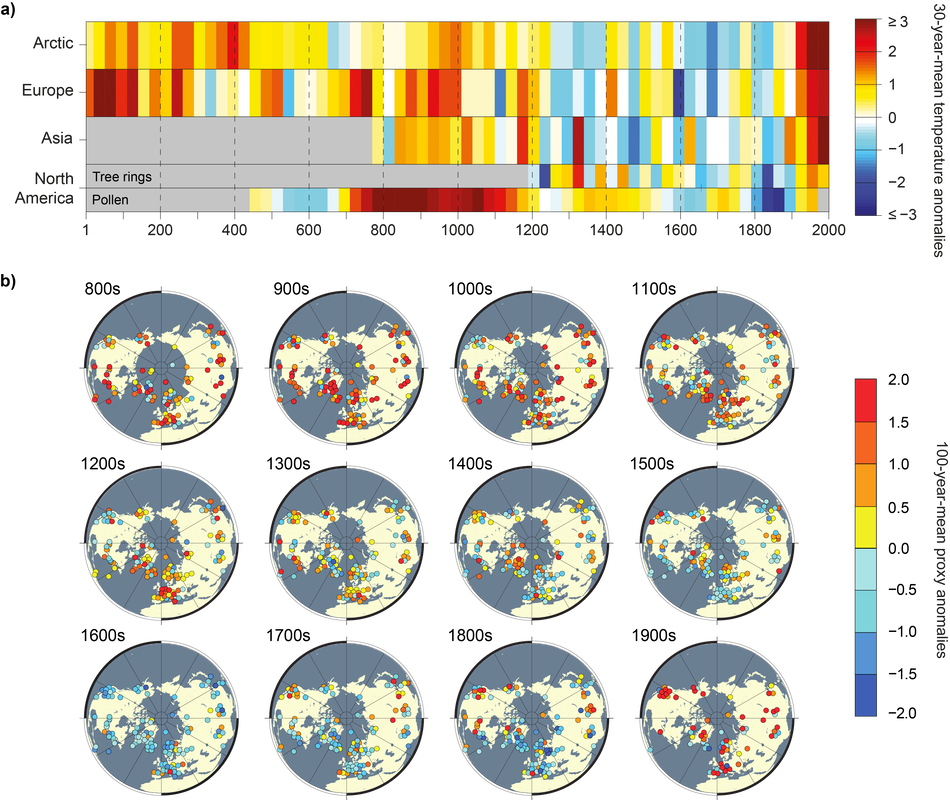

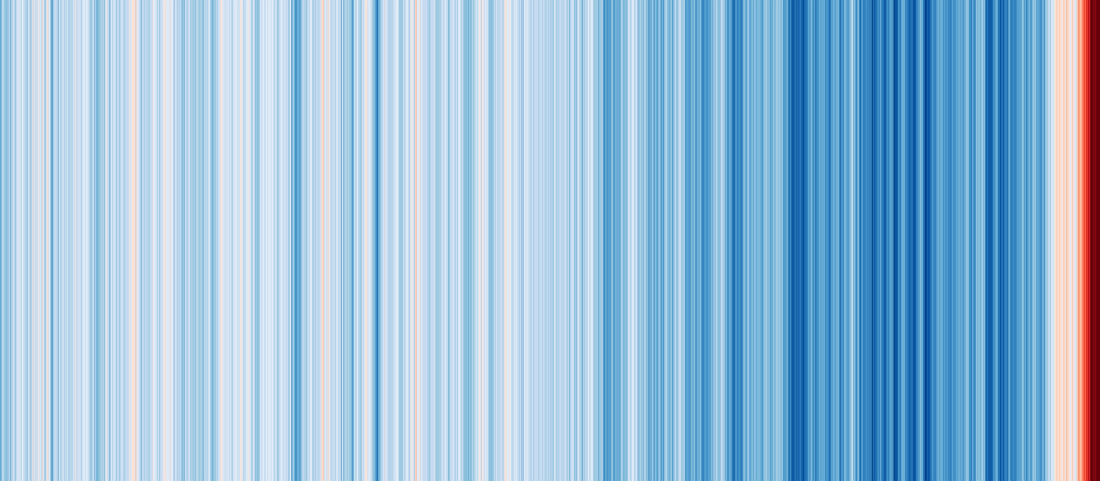

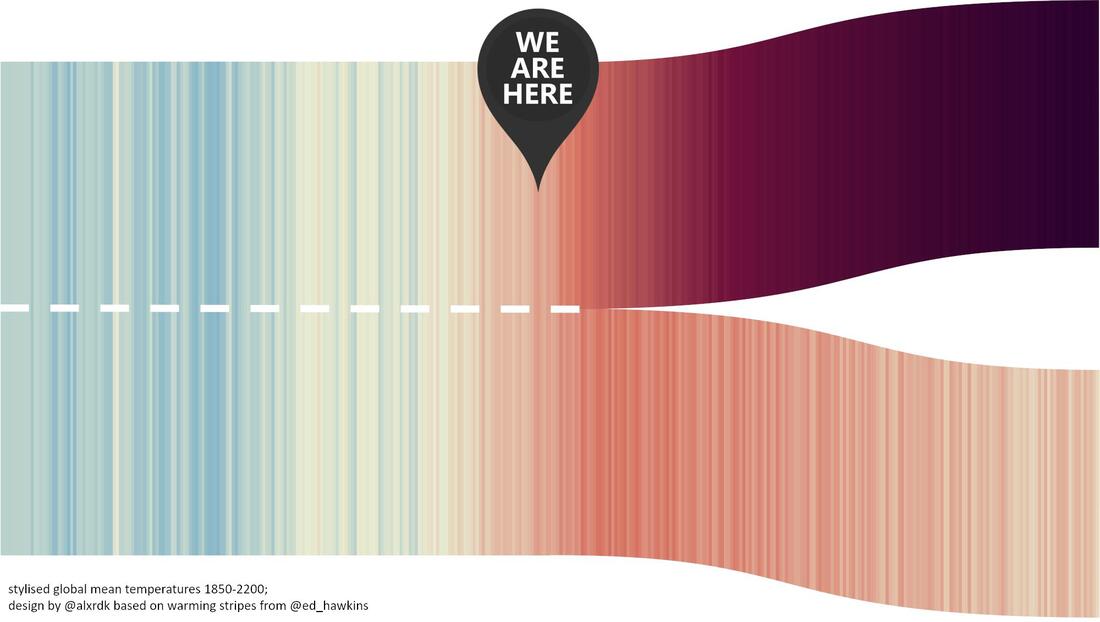

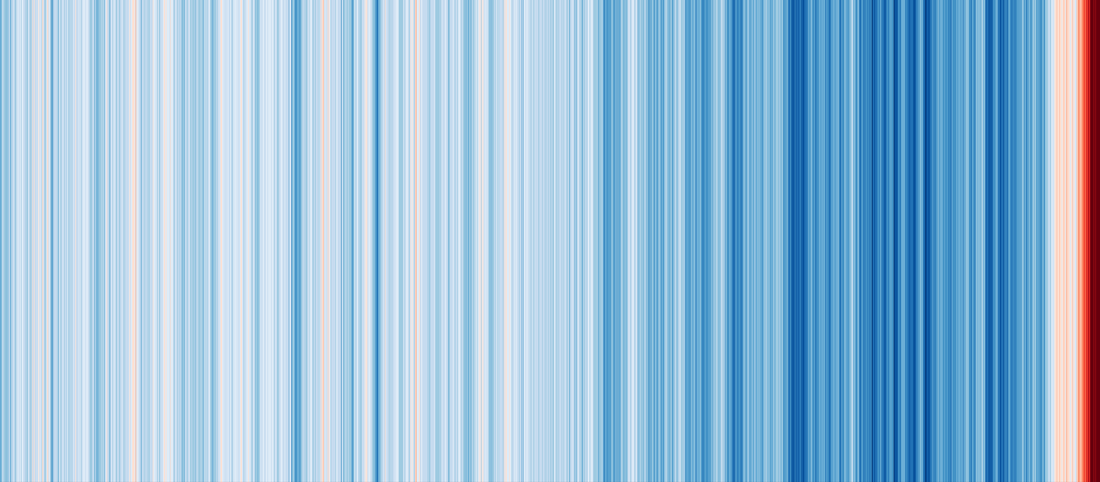

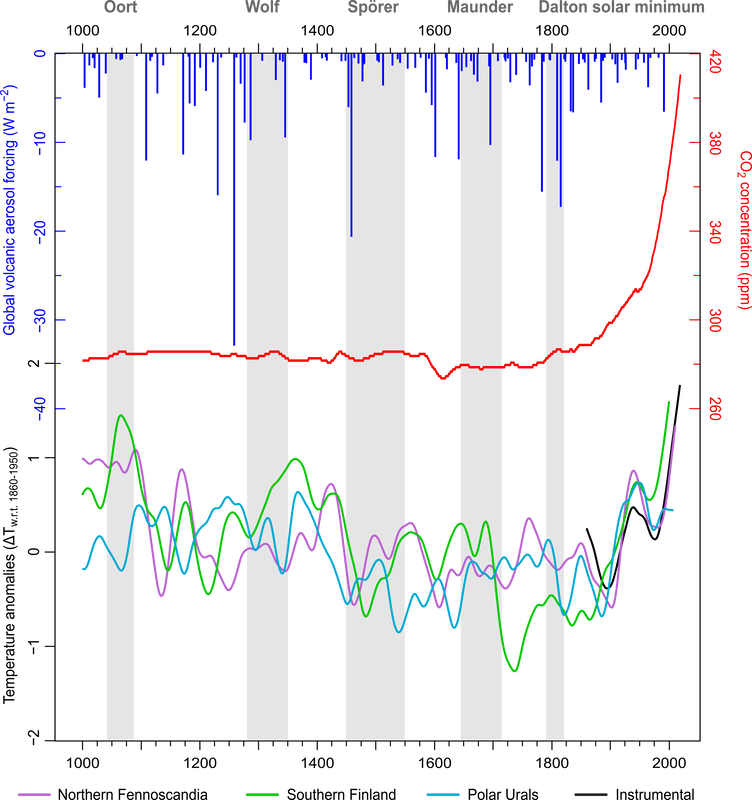

This is the second post in the series Historians Confront the Climate Emergency, hosted by ActiveHistory.ca, NiCHE (Network in Canadian History & Environment), Historical Climatology, and Climate History Network. Dr. Dagomar Degroot, Georgetown University Historians have always concerned themselves as much with the present as the past. Some do so explicitly, their work guided by a conscious desire to provide context for a matter of present concern. Others do so implicitly. They may study the past because it makes them curious, but that curiosity is inevitably shaped by their day-to-day lives. One way or another, history as a discipline is the outcome of the history historians must live through. Today no challenge seems more daunting than the climate crisis. Earth’s average temperature has warmed by over one degree Celsius since the nineteenth century, and it is likely – though not inevitable – that much more warming is on its way. Global temperature changes of this magnitude, with this speed, profoundly alter both the local likelihood and severity of extreme weather. Human-caused heating will reverberate through the Earth for millennia – by slowly melting ice sheets and raising sea levels, for example. Our lives and livelihoods will be – in many cases, are already – shaped by this crisis. No surprise, then, that ever more historians now think urgently and seriously about the implications of climate change for their scholarship. Forecasts of the warmer future are still dominated by economics and climate science, but few now deny that scholars of the past – including historians – can offer unique perspectives on how we entered this crisis, where it might be taking us, and how we can avoid its greatest dangers. As this series reveals, historians grapple with climate change in increasingly diverse ways, and here it is again useful to draw a distinction between implicit and explicit approaches. In a sense, just about every kind of history has relevance to the present crisis, because climate affects every aspect of the human experience. Climate change alters the environments that sustain and shape our lives, shifting the basic conditions that channel our actions and thoughts. The climate crisis is therefore as much about the transformation of the planet as it is about the reshaping of human relationships. Any history that deals with those relationships – all history, by definition – implicitly tells us something about climate change and its social consequences. If you are a historian, your work is about global warming. The editors of this series, however, asked me to describe how scholars of the past explicitly engage with the climate crisis. Arguably, the most influential and numerous publications have originated in both the history of science and the related fields of climate history and historical climatology. A quick and necessarily incomplete overview of just these two kinds of scholarship reveals the value and diversity of historical approaches to climate change. Historians of science initially concentrated on how global warming was discovered and understood by scientists with increasing certainty. While it is simplistic to conclude that scientists “knew” about global warming for many decades, or even over a century – as is often stated in popular media and occasionally in scholarship – historians of science nevertheless revealed the deep roots of today’s scientific understanding of global warming. Led by Naomi Oreskes, they also found that serious debate among climate scientists over the existence of human-caused global warming ended in the 1990s, or perhaps even earlier. Although a majority of the public doesn’t know it, just about every climate scientists understands that humans are today responsible for the rapid heating of the planet. Confirming the scientific consensus on global warming led some historians of science to argue that fossil fuel companies and far-right scientists cynically promoted climate denial in order to serve their regressive political or economic interests. This was – and remains – perhaps the most influential and politically potent argument proposed by historians about the climate crisis. Climate scientists, in this narrative, bravely announced their inconvenient truths but could not overcome the falsehoods propagated by their more media-savvy antagonists. Nevertheless, other historians soon pointed out that climate science has long involved more than the apolitical discovery and communication of truth. Historians of science revealed for example how models and simulations came to dominate climate science – rather than other ways of knowing – and argued that climate scientists themselves failed to choose communications strategies that could mobilize grassroots, local action. Historians have long traced the emergence of ideas about climate from antiquity through the present. Recently, historians of science have revealed that the climate ideas most view as characteristically modern in fact have deep roots. They have shown, for example, that legends of ancient climate changes caused by human sin or shortsightedness helped influence the development of early modern science, and – with new practices in forestry and agriculture – contributed to the emergence of what we might now call sustainability thinking. Climate historians and historical climatologists take an entirely different approach to the climate crisis. Historians active in historical climatology search for human records – usually documents – that either describe past weather or activities that must have been strongly influenced by weather. By finding enough of these records – by scouring what they call the “archives of society” – historical climatologists uncover how climate changed over decades or even centuries. Working with those who uncover evidence for past weather in the “archives of nature” – tree rings, ice cores, or lakebed sediments, for example – they can develop remarkably precise “reconstructions” that reveal the existence of substantial climatic fluctuations even before the onset of today’s extreme warming. The antecedents of this work date back to the nineteenth century, but it was really only in the 1970s that Christian Pfister and other pioneers proposed ranking qualitative accounts of weather on simple ordinal scales. These scales allowed for the closer integration of the archives of society with the archives of nature, especially in Europe, but historical climatologists studying different parts of the world continued to use distinct methods for quantifying historical evidence. Only now are they working towards developing a common approach. In any case, reconstructions permit histories of human responses to past climate changes. While these climate changes have been relatively modest in global scale – the Little Ice Age, the most studied period of preindustrial climate change, only cooled the Earth by several tenths of a degree Celsius between the fifteenth and eighteenth centuries – some were nevertheless sufficient to dramatically alter local environments. Archaeologists, economists, geneticists, geographers, linguists, literary scholars, and paleoscientists have all used distinct methods and sources to uncover how these alterations shaped the history of human populations. Yet few disciplines have contributed more to this scholarship – recently coined the “History of Climate and Society” – than history. Historians have in recent years increasingly avoided making simple connections between climate changes, harvest failures, and demographic disasters: a chain of events that scholars of past climate have long emphasized. New climate histories instead uncover wellsprings of both vulnerability and resilience within communities, and they increasingly consider the full range of possible relationships between climate change and human history across the entire world and into the twentieth century. Together, historians of science and climate historians have done much more than add a little climate to historians’ understanding of the past. Histories of climate science help uncover why governments and corporations have not responded quickly or adequately to the climate crisis. They also reveal how the crisis might be communicated more effectively, or how more diverse ways of knowing could be incorporated within climate science. They show us why we should nevertheless believe the forecasts of climate science – and how we can best act on that belief. Historical climatology helps reveal the baselines against which human emissions are changing Earth’s climate today, and helps uncover the likely response of local environments to global warming. Climate historians can suggest what strategies may succeed or fail when climates change, and add complexity to forecasts of the future that too often assume simple social responses to shifting environmental conditions. Many tell stories about the influence of climate on human affairs that capture public attention more vividly than frightening statistics ever could. Yet both climate history and historical climatology also demonstrate the fundamental discontinuity between climates present and past. The speed, magnitude, and cause of present-day warming simply has no parallel in the history of human civilization. History is a guide to climate action, but it also warns us that to a large extent we are in uncharted waters. Ten years ago, when I started this website, my message was something like: believe me, history helps us understand global warming! I could not have imagined that, in just ten years, climate change would be widely recognized as a serious subject for historical study – or that historians such as Ruth Morgan would earn a seat in a working group of the Intergovernmental Panel on Climate Change. In some ways, the sea change in the historical discipline has mirrored a broader transformation in social attitudes towards climate change: a transformation driven in part by the courage of young activists, but also by the growing severity of the climate crisis. Climate scholarship in history is now more numerous and more diverse – in its topics and authorship – than it ever has been, a shift that also echoes developments in climate activism.

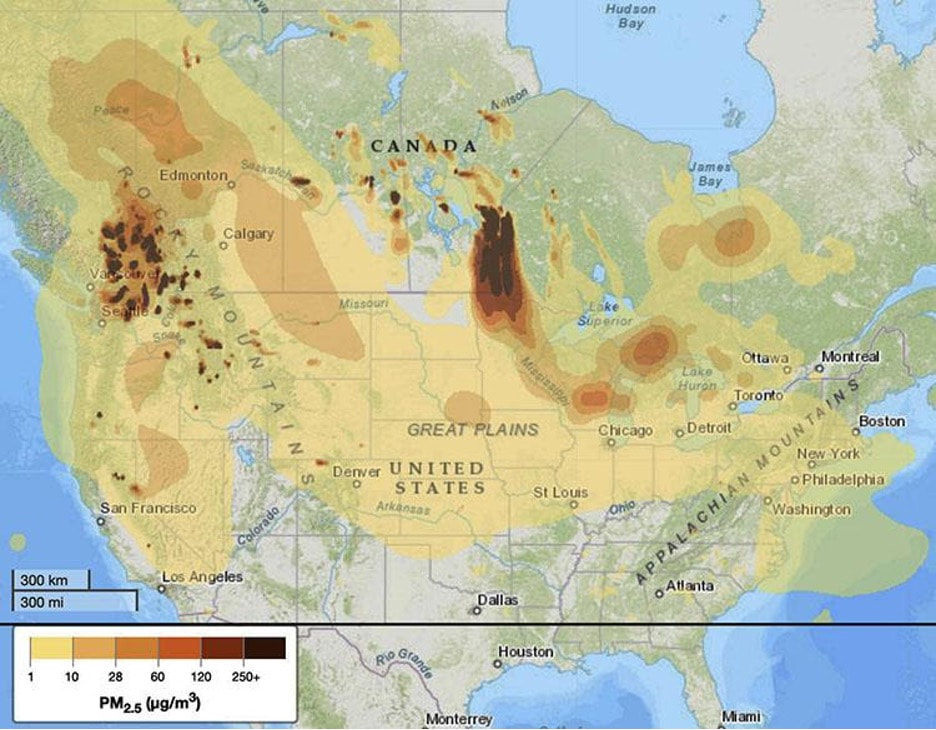

I expect these trends to continue. When future historians write about our time, you can be sure that climate change will play a central role in the story. As we live through that history, our scholarship will be climate scholarship – whether we see it that way or not. This is the introductory post to the series, “Historians Confront the Climate Emergency,” hosted by ActiveHistory.ca, NiCHE (Network in Canadian History & Environment), Historical Climatology and Climate History Network. Dr. Edward Dunsworth and Dr. Daniel Macfarlane What a summer. In late June, a “heat dome” stalked the Pacific regions of Canada and the United States, pushing thermometers close to the 50-degree mark and causing the sudden death of 570 people in British Columbia alone. By July, hundreds of forest fires raged throughout the west coast and in the prairie regions of northern North America, their smoke billowing out across much of the rest of the continent. Parts of Turkey, Macedonia, Italy, Greece, and Tunisia were also devastated by forest fires (with Argentina hit during the southern hemisphere’s summer months earlier in the year). Deadly floods in China, Niger, Somalia, India, Germany, Brazil, and Guyana, killing hundreds and causing damage in the tens of billions of dollars. Drought in Iraq, Syria, Ethiopia, Iran, and Madagascar, driving millions towards food insecurity and starvation (400,000 people in Madagascar alone are at risk of starvation). Hurricanes, tropical storms, and cyclones battering the Caribbean and United States; Laos, the Philippines, and Indonesia; India and Bangladesh; Mozambique and Tanzania.[i] In case the burning mountains, sinking villages, swimming subway trains, and starving masses weren’t evidence enough, the Intergovernmental Panel on Climate Change (IPCC) released yet another alarming report stressing the imperative urgency of reducing greenhouse gas emissions. The 2015 Paris Agreement had laid out a goal of limiting global warming to well below 2, preferably to 1.5 degrees Celsius, compared to pre-industrial levels. But this newest IPCC report made it clear that those targets were by now almost unattainable, and devoted significant attention to the looming risk of “tipping points” – such as the melting of Arctic permafrost – that are likely to trigger cascading events and result in fundamental, and potentially irreversible, transformations to Earth systems. The media rolled out phrases like ‘code red’ or ‘emergency’ or ‘wake-up call’. “The alarm bells are deafening,” said the UN Secretary-General. And – oh yeah – a pandemic whose genesis was almost certainly linked to climate change continued to ravage the globe, claiming its four millionth victim and showing little signs of abating – certainly not among the vast majority of the planet’s people who have not yet had the privilege of vaccination. Meanwhile, amid this ever-growing list of ominous events, we – the authors of this post, but also many of you readers – carried out our work as historians: researching and writing books and articles, delivering conference papers, preparing syllabi, grading papers, processing evermore perplexing emails from university administrators, and all the rest of it. It all seemed a bit disjointed, a bit discordant, a bit surreal. Here was Ed, gazing up at a Montreal sky darkened by smoke from forest fires along the Manitoba-Ontario border, nearly 2,000 kilometres away, working away at revisions on a book about tobacco farm labour in 20th century Ontario. There was Dan, preparing a syllabus on carbon emissions and interviewing people about historical climate change impacts on Lake Ontario, taking a break to read the dire warnings of the IPCC about the very survival of our planet. We are in a climate emergency. But still the humdrum – and at times not so humdrum – beat of everyday life carries on. The dissonance of it all can prompt some big questions, even existential ones, for historians. What does it mean to be a historian on a planet on fire? What does it mean to study the human past when the human future is in dire peril? This series does not claim to answer these questions. Indeed, the answers to them will be worked out by all of us, in collective dialogue and debate, over the decades to come. Instead, we have more modest aims, but ones that we nonetheless hope will encourage scholars of the past – in whatever discipline they find themselves – to think in new ways about how the work of historians might speak to the totalizing crisis at whose precipice humanity now stands. For this ten-part series, we asked a wide-ranging and interdisciplinary roster of contributors to respond to the following animating questions: how should we historians and academics respond to the climate crisis? And how are we already doing so, in our research and our teaching? From its conception, a key aim of this series has been to bring scholars from fields other than environmental or climate history into the conversation about the climate emergency and how it relates to the work of historians. (Indeed, the very editorship of the series reflects this intention, with environmental historian Dan joining labour and migration historian Ed as co-editors). It struck us that since the climate emergency is not simply an environmental development, but also a social and political one, one that intersects with innumerable other processes – from colonialism to capitalism to migration to gender to racism to global inequality to diplomacy and so on – then it follows that historians from a diverse range of subfields should have lots to say about the crisis, leveraging their expertise towards a richer understanding of its origins, thereby enabling sounder approaches towards its resolution. In practice, however, we found this to be a more difficult task than anticipated. In putting together the series a trend quickly emerged in which historians in the usual-suspect subfields readily said “yes” to participating, while bringing in voices from other fields proved more challenging (though well worth the effort given the stellar contributors we ultimately lined up). Perhaps this was a fluke and no grand lessons should be gleaned from it. But perhaps it speaks to some of the barriers that exist to engaging with the climate emergency as historians. Incorporating a technical and science-heavy subject – and one that may seem more contemporary and future-oriented than historical – into writing or teaching can be intimidating for those who aren’t environmental or climate historians. With this in mind, we in part envision this series as aimed at those folks who want to engage with the climate crisis in their work as historians but may be unsure about where to start. We hope this series can provide some avenues for thinking about, and incorporating, the climate crisis into their work as teachers, researchers, and writers. The series will run over the next five weeks, with posts appearing on Tuesdays and Thursdays on both ActiveHistory.ca and NiCHE (and cross-posted on HistoricalClimatology.com and ClimateHistory.net). The contributions can be loosely grouped into two main categories: “Critical perspectives on the climate emergency” and “Climate in the classroom.” The series features contributors from a range of scholarly disciplines with a diverse array of research interests, who run the gamut from emerging scholars to renowned experts in their fields. In titling the series, “Historians Confront the Climate Emergency,” we are claiming an expansive definition of historian that has less to do with disciplinary boundaries and instead simply refers to anyone who studies the past; and more to the point, for our purposes as “active historians,” to anyone who studies the past with an eye to better understanding, and thus better informing, the present. Over the next five weeks, we are thrilled to share contributions from:

Stay tuned. Daniel Macfarlane is an Associate Professor in the Institute of the Environment and Sustainability at Western Michigan University, co-editor of The Otter-La loutre, and a member of the executive board of NiCHE, Network in Canadian History & Environment. Edward Dunsworth is an Assistant Professor in the Department of History and Classical Studies at McGill University and a member of the editorial committee at ActiveHistory.ca. [i] Examples drawn from a number of news reports and from the impressive International Disaster Database, from the Centre for Research on the Epidemiology of Disasters at the Université catholique de Louvain, Belgium.

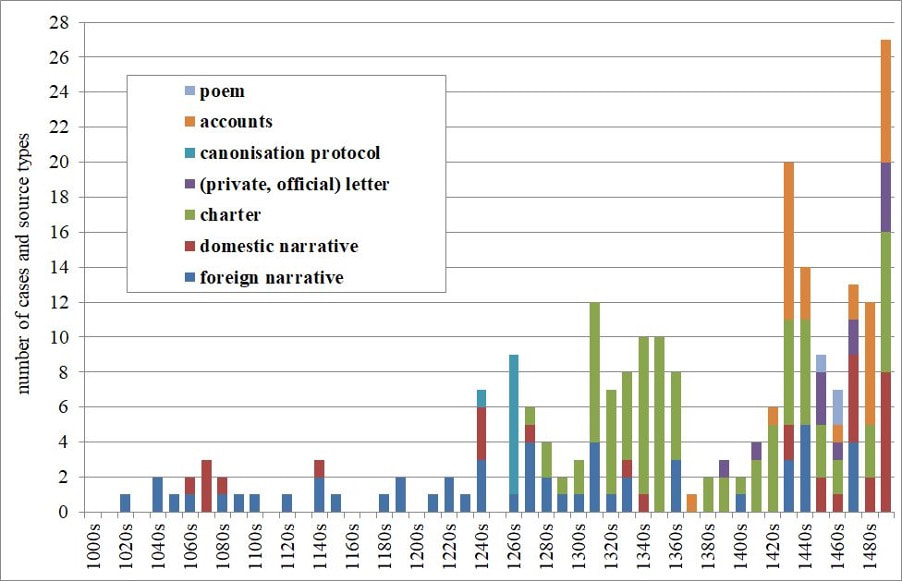

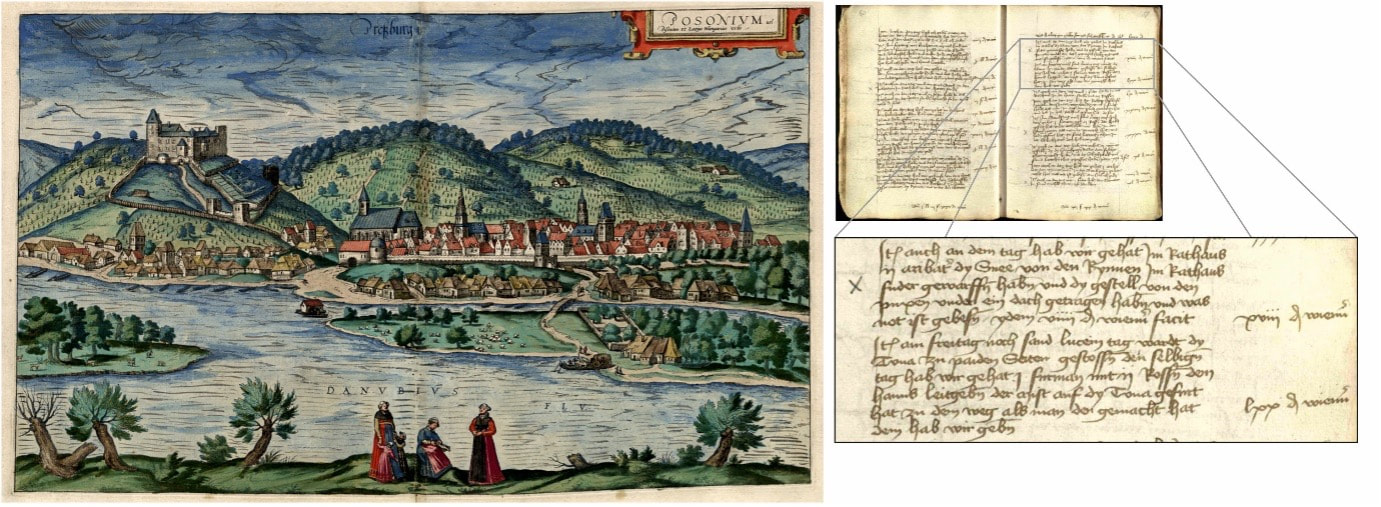

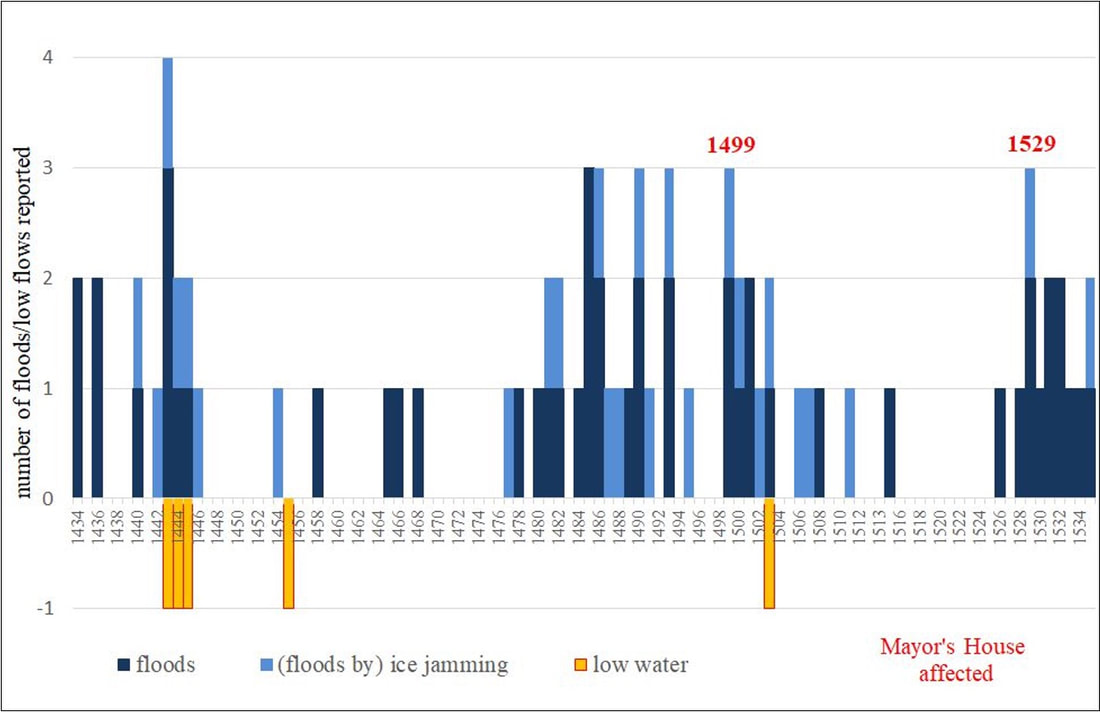

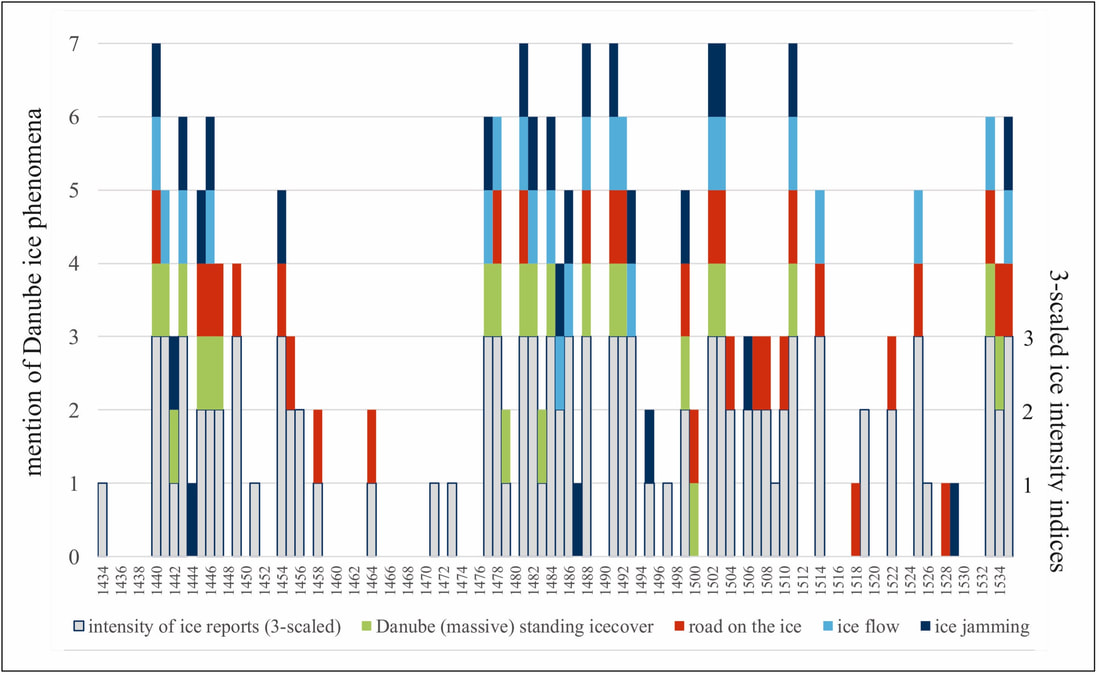

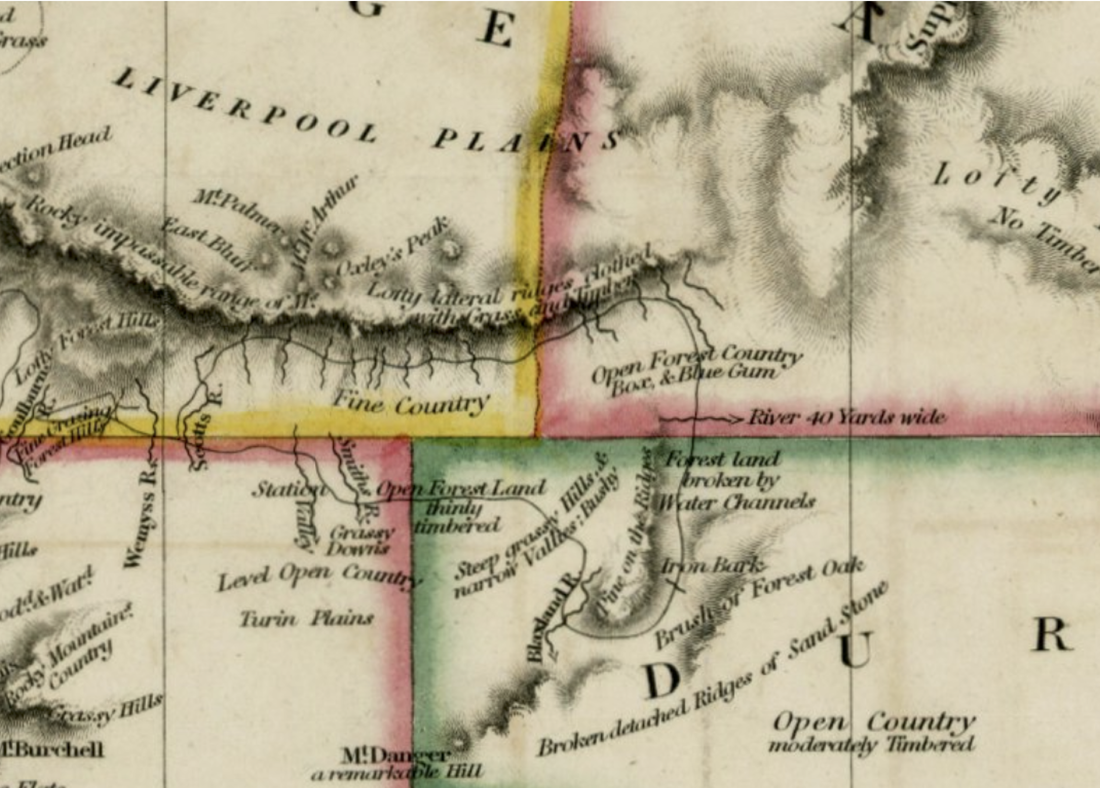

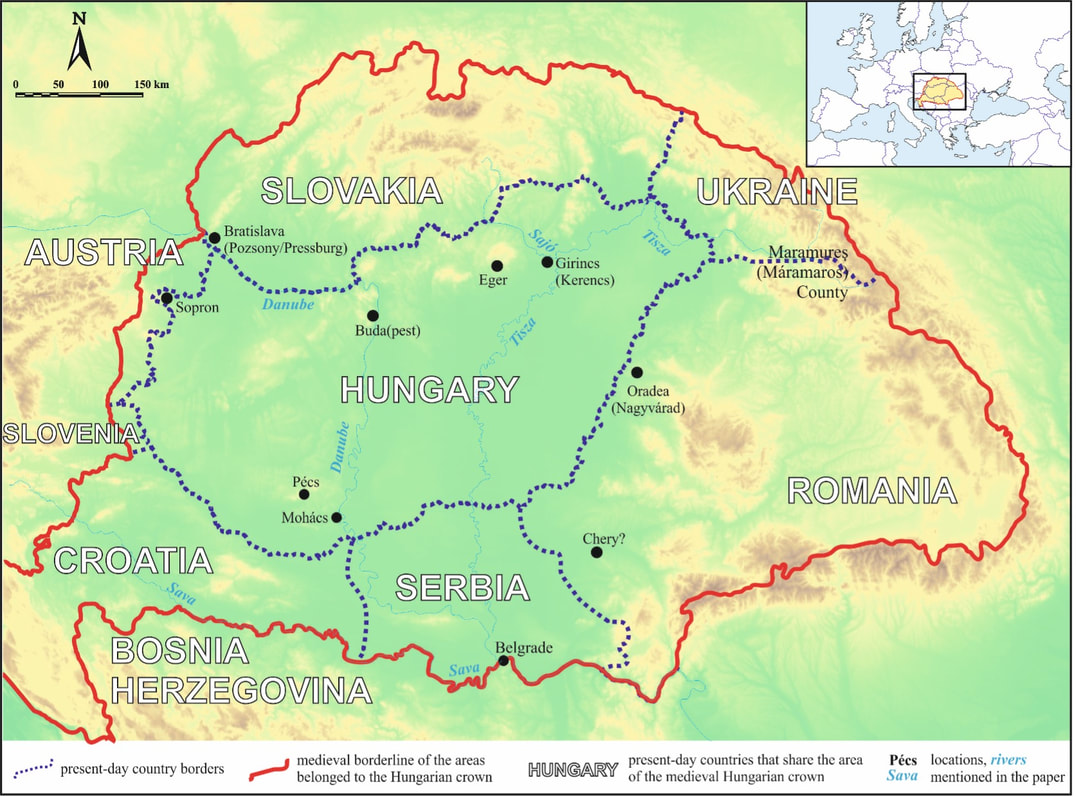

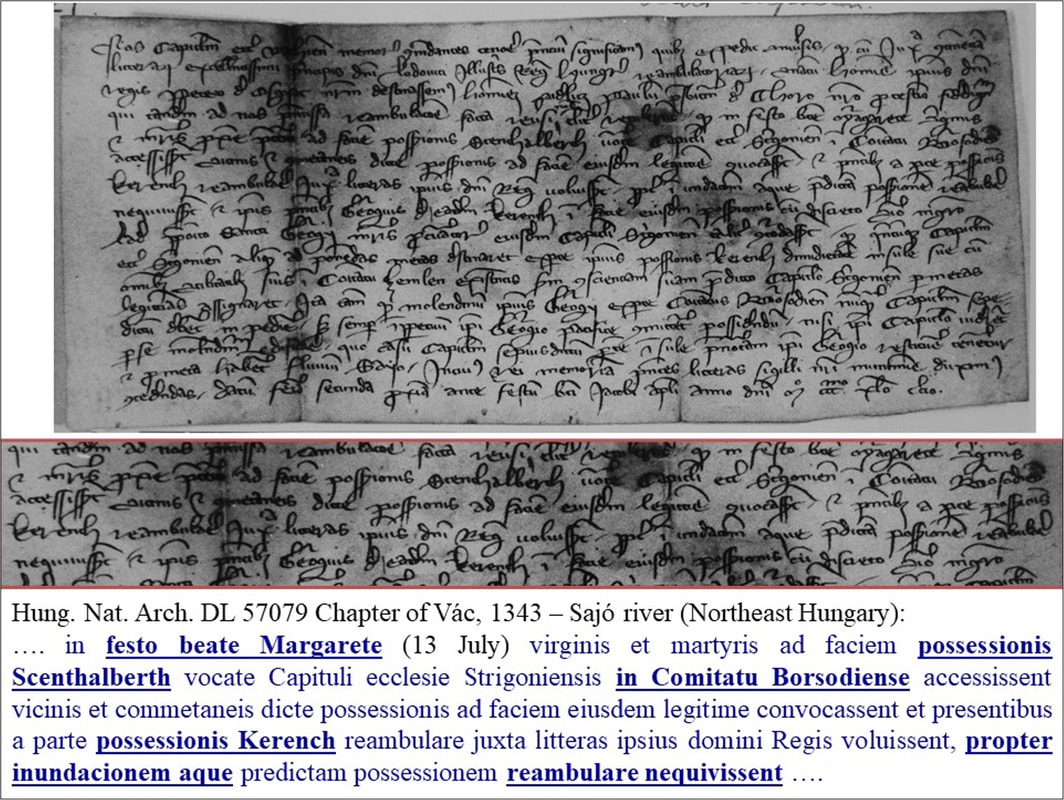

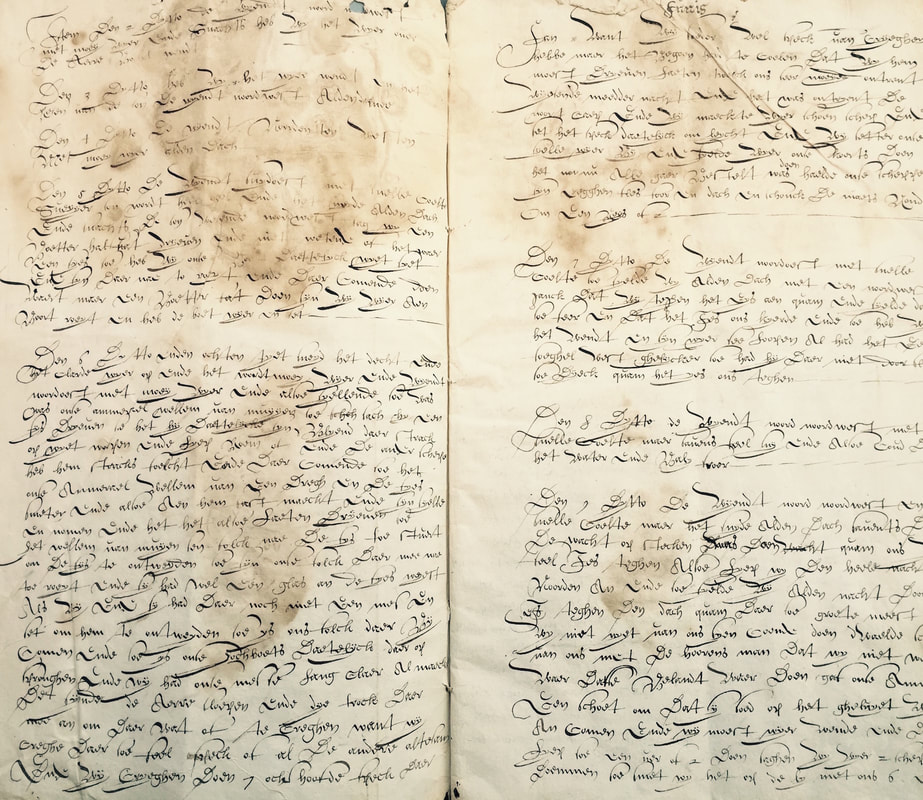

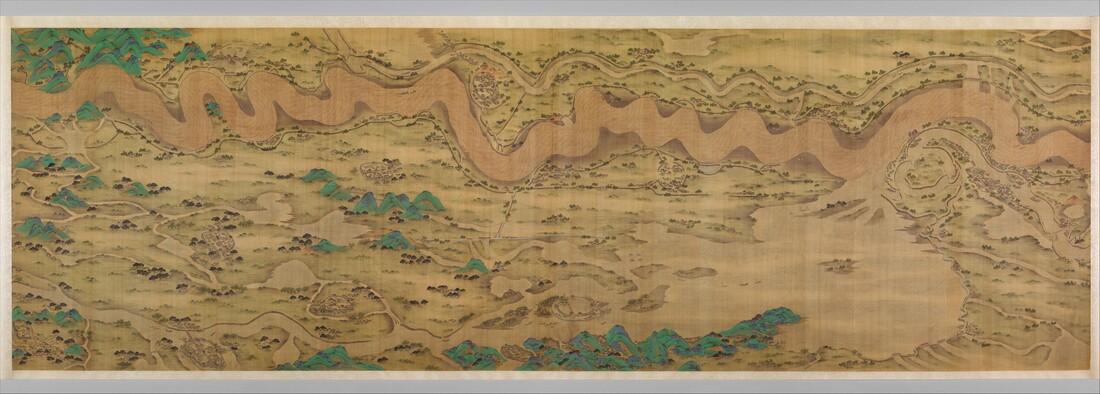

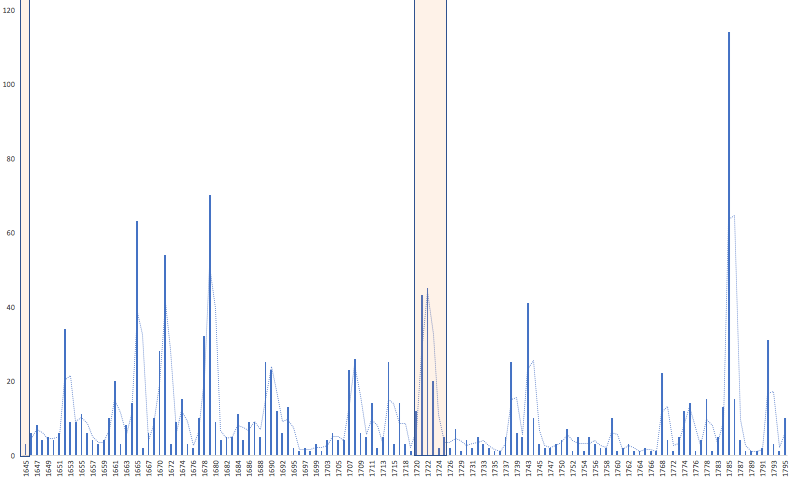

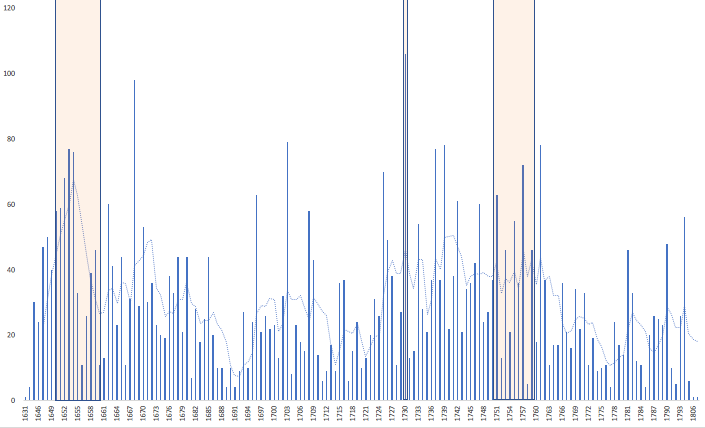

Dr. Andrea Kiss, Technische Universität Wien General background The countries of the Hungarian Crown - with the Hungarian kingdom the most prominent - represented one of the key political and military powers in East-Central Europe in the Middle Ages. The Hungarian kingdom alone (which included present-day Hungary, Slovakia, Eastern Austria, Eastern Slovenia, Western Ukraine, Western and Central Romania, Northern Serbia, and Northern Croatia) occupied an area (325,000 km2) larger than the entire British Isles. When combined with the 42,000 km2 Slavonia (present-day Central, Northern Croatia and North-west Bosnia), it covered the entire Carpathian Basin (Fig. 1). Population density was well under the high-medieval average for Western Europe, but the region was less affected by the Black Death than other areas, so by the mid-14th century, the figure was more comparable. The end of the Middle Ages was marked by the Battle of Mohács (1526), when the Hungarian royal army was crushed by the incomparably larger Ottoman forces. Isolated examples of documents referring to the weather survive from the high medieval (11th-13th century) period; records are more frequent from the mid-to-late 13th century and especially from the early 14th century. What makes the Carpathian Basin truly unique compared with the rest of Europe is the composition of contemporary source types, above all those containing weather-related information. Contemporary sources – vivid real-life weather and flood reports The medieval climate- and environment-related sources from the Carpathian Basin are rather special because, except for the mid-to-late 15th century, the main European sources of historical climate analysis (i.e., chronicles and annals) are mostly lacking. Instead, there are tens of thousands of particularly rich and detailed charters dating from the 13th and especially the early 14th centuries containing lengthy field surveys and descriptions of environmental and weather conditions. Additionally, economic-administrative documents are available sporadically from the 14th and systematic from the 1430s onwards; among these, especially the chamberlain accounts of Bratislava (historical Pozsony/Pressburg; hereafter Pressburg accounts) provide a great deal of weather-related information (Fig. 2).  Fig. 2 Decadal distribution of weather-related source types (floods excluded; for floods, see: Kiss, 2019) in the medieval Carpathian Basin (1000-1500; work in progress) each source with weather report taken as one case. Published data: Kiss 2013, 2014, 2016, 2017, 2020a; Kiss and Nikolić-Jakus, 2015 Charters, letters, and town, estate and tithe accounts occasionally contain short but very realistic and punctual reports on actual weather conditions (e.g., rainy, snowy, foggy, hot, cold), weather extremes (e.g., storm, thunderstorm, hail, and so on), and weather-related extremes (especially floods and some of the great droughts); floods and hard winters tend to receive the most attention. Further data on weather and weather-related extreme events in Hungary are occasionally found in foreign narratives, mainly in the German-speaking areas and neighboring countries. Thus, while narratives are overwhelmingly important in medieval European climate-history research, an almost complete lack of weather-related information in the few high- and late-medieval narratives relating to Hungary and the Carpathian Basin means that a historian of the region has to use sources rarely used elsewhere. This is especially true for the period up to the mid-15th century, from which point other major European source types – particularly narratives and town accounts – assume a greater significance. Unique legal documentation of weather and floods: field surveys, perambulations and other charter types Charters are a unique medieval legal-administrative source type for climate history research in Hungary, which fact originates from the differences in legal documentation practices from most other parts of Europe. While charter production practices in high medieval times show no significant differences from other Central European types (East-Central European in particular), the documents are longer, more detailed, and often even annalistic from the 13th century. Nevertheless, it is the new royal dynasty in the early 14th century – the Italian Angevins, Charles Robert I in specific – that brings the real change, also responsible for the quantity boom of charters particularly from the 1320s (more details: Kiss 2019). Apart from the (donation) charter reports on famous political-military events also mentioning weather extremes, the most distinctive branch of charters contains field surveys and perambulations. In a field survey or perambulation charter the surveyors describe in detail processes and landscape features while walking along the boundaries, and point out obstacles – including weather- or flood-related – that hinder or obstruct them (example: Fig. 3). Moreover, these official reports were subject to further inspections that give further legitimation to the reliability and accuracy of their contents. Perambulators often made repeated attempts to complete their work; sometimes it had to be abandoned altogether because of extreme weather(-related) environmental conditions, for example, great floods, deep snow, or other extreme weather conditions, or a combination of such hindrances (e.g., Kiss, 2009, 2019). Although charters are key sources for weather research for the period between the 13th and early-to-mid-16th centuries, they are crucially important in any climate reconstruction for the 1320s-1420s (Fig. 2; see also Kiss, 2014, 2016, 2020). Nevertheless, other types of charter (e.g., prorogation and inquisitorial) can also contain weather-related entries, especially regarding travel and transportation. A particularly interesting, smaller group of charters is the royal donations: occasionally taking the form of narratives, with annalistic, detailed descriptions of important events or military campaigns, donation charters may also refer to the weather when it has an impact on the story. For example, information on the thawing of the snow and the subsequent downpour that led to the catastrophic defeat of the Mongols in Transylvania during the Second Mongol Invasion in 1285 was included in donation charters (see Kiss, 2014). Another important group of contemporary weather-related records is the legal documents issued by ecclesiastical jurisdictions. The canonization trial protocols of Saint Margaret, the Dominican nun (and royal princess), are a rare example: the official investigations – which were carried out by papal legates – took place in Buda(pest area) in 1276, seven years after her death, and contain the testimonies of over a hundred eyewitnesses. The most important miracle (as described by eyewitnesses) concerned two Danube ice jam floods that occurred in December 1267-January 1268; some of the witnesses also described sudden rain, muddy, inundated terrain, and gloomy weather. The church had its own legal system within (and beyond) the country: complaints against a member of the church, applications for a modification of ecclesiastical rights (e.g., establishing new parishes or changing boundaries), or inquiries into particular incidents were usually addressed to the local bishop or his administration. Avoiding the local bishop, some of these applications were directly addressed and sent to the pope, and are preserved in Avignon or Rome. Reaching their peak around the late 14th and the early 15th centuries, these sometimes contain references to weather or floods, while data on specific prayers and processions (rogation ceremonies) asking for rain are also known in one-one cases (e.g., Kiss, 2016, 2017). Official in the private and private in the official: (un)official letters and medieval memoires Official and/or private letters occasionally contain particularly important reports on weather extremes; these become more frequent in the 15th century. Their importance lies not only in their realistic and clearly dated eyewitness descriptions of these events but also in the information they provide on their social context and consequences. They offer insights into the private individual and/or popular perceptions of the events (in terms of religious belief or practical understanding). Authorship ranges from town citizens through medium- and high-status churchmen and noblemen up to kings and queens; occasionally, the letters of foreign legates provide interesting information on the subject. With rare exceptions, the letters show a rather practical, ‘survival mode’ approach to unusual/unexpected extreme weather. In addition to describing events, they usually focus on practical issues, for instance offering solutions and/or reorganizing subsequent activities. A good example is the extreme early ice-cover case that was described in a letter written by a Florentine legate in 1427. Ottoman troops had crossed the icy Danube near Belgrade in the south in mid-November (!) and caused great destruction in the region. In the meantime, the legate was waiting to cross the Sava River, which was impassable because of strong ice drifts (C. Tóth et al., 2020). Another interesting letter – written by the castellan to his lord, John of Hunyad – comes from the south-east (see Fig. 1), where a castle called Chery was swept away (!) by torrential waters just before mid-April 1443 (HNA DL 55253). No further details of this extraordinary event were supplied; the communication was essentially a request for a (good) carpenter who could supervise and help in rebuilding the castle. A third typical example is the letter of Queen Beatrix (HNA DL 98454) written in March 1496 in which she asked the salt chamber of Máramaros (Maramureş-Ro) about a large consignment of salt that never arrived. This was a result of the very low water level (‘lack of water’) of the Tisza River in 1494 – a famous drought year that was also described by Antonio Bonfini (Kiss, 2019, 2020; Kiss and Nikolić-Jakus, 2015). Amongst the private or semi-official documentation of the late medieval period, memoirs play a special role: not written for any orders, memoires provide down-to-earth accounts and allow their authors to express their own private views, understanding, and perception of events. They also present ample data on the physical-social environments in which the events described can be understood and interpreted. Written by an eyewitness of Italian origin in 1243, about the First Mongol Invasion (1241–1242), the Carmen miserabile (Szentpétery, 1999) is, without doubt, the most famous high-medieval memoir (or letter) of East Central Europe. The author, Master Rogerius, a clergyman (an archdean) living in (Nagy)Várad (Oradea-Ro), provides a shockingly realistic description of events: in addition to describing the Tatars and their massacres, and his own capture and successful escape, he includes precious information about the unusually cold winter, the deeply frozen Danube, and other weather-related environmental conditions that both helped and hindered the Mongols in their 1241-1242 invasion of the Carpathian Basin. Another, particularly interesting example is the memoire of Helene Kottanner – the wetnurse of the Hungarian king, Ladislaus V (1440-1458) – who provides us with detailed accounts of the weather during her travels in the central and western part of the country in the winter through summer of 1440 (Mollay, 1971). She writes about traveling in snow, moving through the Danube ice (almost disastrously), the rain and then heat, and the alternating dry and muddy roads in spring while crossing the Transdanubia, and presents a vivid description of a huge early-summer torrential downfall near Sopron on the western border of the country. Her daily/weekly observations parallel those of the Pressburg accounts (see Kiss, 2020a). Economic sources and the Pressburg chamberlain accounts (1434-1596) The medieval and early modern estate and manorial accounts, tithe rolls, and income and spending accounts of (mainly royal) towns occasionally contain weather data from the late 14th century. With one exception, until the end of the 15th century the reports tend to be sporadic, occasionally describing the consequences of a one-off rainfall event or period, expenses incurred in ice cutting in estate or town accounts, or a major flood event that resulted in partial tax relief, documented in a tithe account. With the exception of the extensive estate accounts of the Bishop of Eger from the beginning of the 1500s – which contain an unusually high number of weather-related references for certain years, including the drought and dearth years of 1506 and 1507 (E. Kovács, 1992; Kiss, 2020b) – perhaps the most important weather-related economic sources are town chamberlain accounts.  Fig. 4 The view of historical Pozsony/Pressburg (Bratislava-Sk) from the south with the Danube in the forefront (Braun and Hogenberg, 1594), and a sample page (AMB K5, 25) from the chamberlain accounts from 14 (Gregorian calendar: 23) December 1442, with reports on snow-cleaning, the freezing of the Danube and road preparation The present-day capital of Slovakia, Bratislava, historical Pozsony (capital of the Hungarian kingdom after 1541), Pressburg in German – the main language of the population and that of the local administrative documents in the 15th-16th centuries (1434-1596) – has by far the most detailed systematically presented town chamberlain accounts. Weather parameters such as precipitation (e.g., snow and rainfall), temperature (e.g., cold, freezing, various ice phenomena, Danube ice cover), strong winds, extreme weather (e.g., storms, torrential rain and flash floods) and related socio-economic information (e.g., high prices and transportation difficulties) are often mentioned when they have increased the cost of some of the everyday activities paid for by the town (Fig. 4). Because of its riverine position and the many kinds of riverine-related economic, travel, and transportation interests and activities in the Danube floodplain area and on the numerous islands, flooding and low water levels receive particular attention (Fig. 5).  Fig. 5 Example of medieval Danube floods and low flows mainly based on the Pressburg accounts, 1434–1535 (work in progress; source: AMB K1-86; see also Kiss, 2019, 2020a). Note three anomalous periods: the 1430s-early/mid-1440s (with both floods and low flows) marking the early Spörer solar minimum, the late 1470s–early 1500s and a period from the mid-1520s – coinciding with the mid- (and late?) Spörer minimum. Two extraordinary intensity events highlighted; heights of the 1501 ‘deluge’ remain unreported Although in Central Europe one-one flood and grain-harvest-based temperature reconstructions have already used data from town accounts (e.g., Wels, Austria: Rohr, 2006; Basel, Switzerland: Wetter and Pfister, 2011), the present research is novel because it contains not only one-one systematic chapters or phenology-/flood-related data types extracted from individual town accounts, but also a systematic survey of the entire manuscript series containing all direct and indirect information on the weather and extreme weather-related conditions. The accounts are of great importance because they cover almost the entire Spörer Minimum (and beyond), an unusually long period of low solar activity. With only a few (mainly half-half year) gaps, the accounts straddle the late medieval and early modern periods, during which the administrative system and the methods of recording income and expenditure and related information remain mainly the same. Because of the frequency and high-resolution of detail they contain, they are indispensable for pinpointing Danube flood events. Allowing the extension of the annual-/seasonal-resolution flood (frequency, intensity, seasonality) reconstruction of a major European 500-year river flood series with almost a hundred year, it is one of the series of inevitable importance in large-scale multi-centennial flood analyses and reconstructions (see e.g. Blöschl et al., 2020).  Fig. 6 Example of Danube-ice-related information between 1434 and 1535 in the Pressburg accounts (work in progress; source: AMB K1-86). Colors: systematic mentions of Danube ice-related phenomena and activities. 3-scaled ice intensity indices (mixed accounts): 1=sporadic mention of ice over the winter: 1-3 cases; 2=average mention of ice (ca. 4-6), also in multiple place and time; 3=ice frequently mentioned and in great spatial, temporal (and vertical) extent. Note two anomalous periods around the 1430s-1440s and the 1480s-1510s marking the hard winters of the early and mid-Spörer Minimum, and the cold winters of the (early) 1530s Within the chamberlain accounts almost all chapters contain weather-related information, but perhaps the most important source of information comes from the ferry and bridge accounts, where data is available on daily and weekly basis (bridges existed here over the entire Danube from the early 1430s). Furthermore, in separate chapters, references were regularly made to ice being cut in the town moat, snow-clearing works around the town hall, ships, and bridges, and floods (data included in Figs. 5, 6). The early parts of the accounts also contain a chapter that refers to payments to town messengers who sometimes had to endure harsh weather conditions, flooding, or the dangers associated with crossing the ice-covered Danube to carry messages to other areas of Hungary or Austria. Individual extreme events, sometimes real ‘delicacies’, occasionally are also described: for example, a case of mass soil erosion that occurred in summer 1458 when it appears that the earth on the hillside(s) over and around the town was washed down by torrential rain(s). On that occasion, the town paid to clean the streets and some of the roads of the sediment (maybe also of a Danube flood?; source: AMB K26). Although such expenditure is often listed in separate chapters, weather-related information can be found in all kinds of payments, most especially those of the (longest) category: the miscellaneous expenses (e.g., Kiss, 2019, 2020a). Animated rivers, rainfall-triggered erosion, and the mid-winter blossoming of an almond tree: the brave new trend of humanism The Italian Renaissance spread to and blossomed in Hungary from the second half of the 15th century; referring to real-life extreme weather(-related) events, the perception of nature often appeared in humanist writing. Humanists such as Janus Pannonius (the Bishop of Pécs, who studied in Italy) and the Italian Antonio Bonfini (who was living in Hungary at the time) used weather and weather-related phenomena in their allegories, the details of which help us to date the occurrence of unusual events such the mid-winter blossoming of an almond tree, winter snow-cover, or a great flood. In addition to his descriptions of significant late 15th-century droughts, Bonfini, in the Rerum Ungaricarum Decades (Fógel, 1941), refers to the flood that occurred after the death of King Matthias - which is also mentioned in Austrian sources and the Pressburg accounts (see Fig. 5) – where the Danube appears as a protective, living entity. A real ‘delicacy’ is the poem De inundacione of Janus Pannonius who describes great torrential rainfall and a subsequent flood event involving the largest rivers in the South Carpathian Basin, and connects the event to the arrival of a famous comet (V. Kovács, 1972). His dating of the event to the arrival of a famous comet – reported in Europe and all over the Northern Hemisphere (e.g. Hasegawa and Nakano, 1995; Kak, 2003, Martínez and Marco, 2016; de Carvalho, 2021) in autumn 1468 – has made it possible to identify and connect this reference to a Danube flood reported around the same time in the Pressburg accounts. In this poem, as an allegory of the approaching apocalypse, Janus Pannonius presents a strikingly realistic ‘environmental catastrophe’ domino-model based on the cumulative effects of heavy rainfall, floods, subsequent soil degradation and mass erosion, the aggregation of infertile river sediments, the multiannual destruction of grain and grapevine harvests (and stocks) that result famine (see Kiss, 2019). References