|

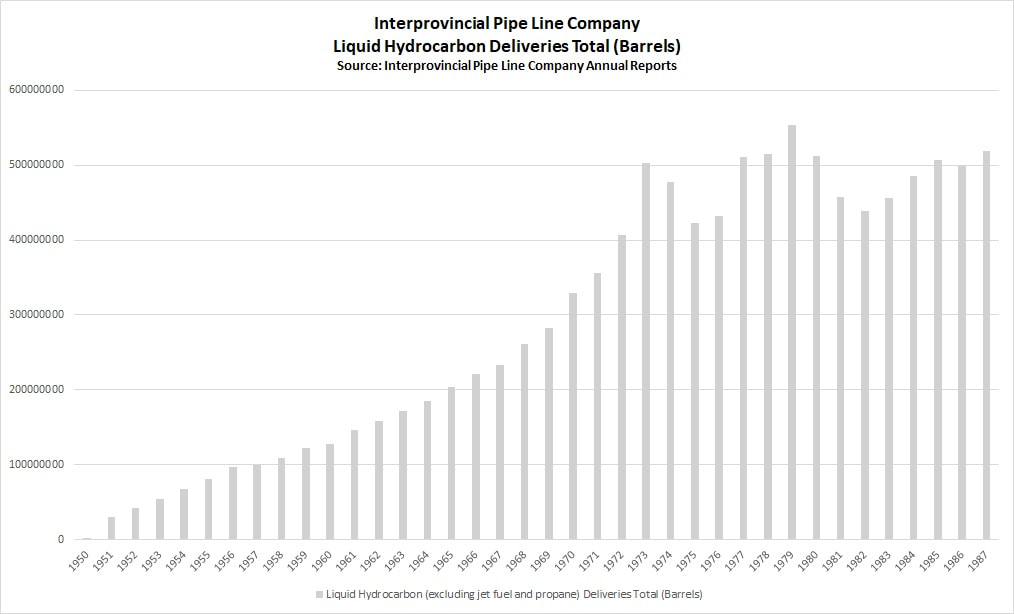

Prof. Sean Kheraj, York University. This is the fifth post in a collaborative series titled “Environmental Historians Debate: Can Nuclear Power Solve Climate Change?” hosted by the Network in Canadian History & Environment, the Climate History Network, and ActiveHistory.ca. If nuclear power is to be used as a stop-gap or transitional technology for the de-carbonization of industrial economies, what comes next? Energy history could offer new ways of imagining different energy futures. Current scholarship, unfortunately, mostly offers linear narratives of growth toward the development of high-energy economies, leaving little room to imagine low-energy futures. As a result, energy historians have rarely presented plausible ideas for low-energy futures and instead dwell on apocalyptic visions of poverty and the loss of precious, ill-defined “standards of living.” The fossil fuel-based energy systems that wealthy, industrialized nation states developed in the nineteenth and twentieth centuries now threaten the habitability of the Earth for all people. Global warming lies at the heart of the debate over future energy transitions. While Nancy Langston makes a strong case for thinking about the use of nuclear power as a tool for addressing the immediate emergency of carbon pollution of the atmosphere, her arguments left me wondering what energy futures will look like after de-carbonization. Will industrialized economies continue with unconstrained growth in energy consumption, expand reliance on nuclear power, and press forward with new technological innovations to consume even more energy (Thorium reactors? Fusion reactors? Dilithium crystals?)? Or will profligate energy consumers finally lift their heads up from an empty trough and start to think about ways of living with less energy? Unfortunately, energy history has not been helpful in imagining low-energy possibilities. For the past couple of years, I’ve been getting familiar with the field of energy history and, for the most part, it has been the story of more. [1] Energy history is a related field to environmental history, but also incorporates economic history, the history of capitalism, social history, cultural history and gender history (and probably more than that). My particular interest is in the history of hydrocarbons, but I’ve tried to take a wide view of the field and consider scholarship that examines energy history in deeper historical contexts. There are several scholars who have written such books that consider the history of human energy use in deep time. For example, in 1982, Rolf Peter Sieferle started his long view of energy history in The Subterranean Forest: Energy Systems and the Industrial Revolution by considering Paleolithic societies. Alfred Crosby’s Children of the Sun: A History of Humanity’s Unappeasable Appetite for Energy (2006) begins its survey of human energy history with the advent of anthropogenic fire and its use in cooking. Vaclav Smil goes back to so-called “pre-history” at the start of Energy and Civilization: A History (2017) to consider the origins of crop cultivation. In each of these surveys energy historians track the general trend of growing energy use. While they show some dips in consumption and global regional variation, the story they tell is precisely as Crosby puts it in his subtitle, a tale of humanity’s unappeasable appetite for greater and greater quantities of energy. The narrative of energy history in the scholarship is remarkably linear, verging on Malthusian. According to Smil: “Civilization’s advances can be seen as a quest for higher energy use required to produce increased food harvests, to mobilize a greater output and variety of materials, to produce more, and more diverse, goods, to enable higher mobility, and to create access to a virtually unlimited amount of information. These accomplishments have resulted in larger populations organized with greater social complexity into nation-states and supranational collectives, and enjoying a higher quality of life.” [2] Indeed, from a statistical point of view, it’s difficult not to reach the conclusion that humans have proceeded inexorably from one technological innovation to another, finding more ways of wrenching power from the Sun and Earth. The only interruptions along humanity’s path to high-energy civilization were war, famine, economic crisis, and environmental collapse. Canada’s relatively short energy history appears to tell a similar story. As Richard W. Unger wrote in The Otter~la loutre recently, “Canadians are among the greatest consumers of energy per person in the world.” And the history of energy consumption in Canada since Confederation shows steady growth and sudden acceleration with the advent of mass hydrocarbon consumption between the 1950s and 1970s. Steve Penfold’s analysis of Canadian liquid petroleum use focuses on this period of extraordinary, nearly uninterrupted growth in energy consumption. Only in 1979 did Canadian petroleum consumption momentarily dip in response to an economic recession. “What could have been an energy reckoning…” Penfold writes, “ultimately confirmed the long history of rising demand.” [2] I’ve seen much of what Penfold finds in my own research on the history of oil pipeline development in Canada. Take, for instance, the Interprovincial pipeline system, Canada’s largest oil delivery system. For much of Canada’s “Great Acceleration” the history of more couldn’t be clearer: This view of energy history as the history of more informs some of the conclusions (and predictions) of energy historians. Crosby is, perhaps, the most optimistic about the potential of technological innovation to resolve what he describes as humanity’s unsustainable use of fossil fuels. In Crosby’s view, “the nuclear reactor waits at our elbow like a superb butler.” [4] For the most part, he is dismissive of energy conservation or radical reductions in energy consumption as alternatives to modern energy systems, which he admits are “new, abnormal, and unsustainable.” [5] Instead, he foresees yet another technological revolution as the pathway forward, carrying on with humanity’s seemingly endless growth in energy use. Energy historians, much like historians of the Anthropocene, have a habit of generalizing humanity in their analysis of environmental change. As I wrote last year in The Otter~la loutre, “To understand the history of Canada’s Anthropocene, we must be able to explain who exactly constitutes the “anthropos.”” Energy historians might consider doing the same. The history of human energy use appears to be a story of more when human energy use is considered in an undifferentiated manner. The pace of energy consumption in Canada, for instance, might look different when considering the rich and the poor, settlers and Indigenous people, rural Canadians and urban Canadians. Globally, energy histories around the world tell different stories beyond the history of more including histories of low-energy societies and histories of energy decline. Most global energy histories focus on industrialized societies and say little about developing nations and the persistence of low-energy, subsistence economies. If Smil is correct and “Indeed, higher energy use by itself does not guarantee anything except greater environmental burdens,” then future decisions about energy use should probably consider lower energy options. [6] Transitioning away from burning fossil fuels by using nuclear power may alleviate the immediate existential crisis of global warming, but confronting the environmental implications of high-energy societies may be the bigger challenge. To address that challenge, we may need to look back at histories of less. Sean Kheraj is the director of the Network in Canadian History and Environment. He’s an associate professor in the Department of History at York University. His research and teaching focuses on environmental and Canadian history. He is also the host and producer of Nature’s Past, NiCHE’s audio podcast series and he blogs at http://seankheraj.com. [1] I’m borrowing from Steve Penfold’s pointed summary of the history of gasoline consumption in Canada: “Indeed, at one level of approximation, you could reduce the entire his-tory of Canadian gasoline to a single keyword: more.” See Steve Penfold, “Petroleum Liquids” in Powering Up Canada: A History of Power, Fuel, and Energy from 1600 ed. R. W. Sandwell (Montreal: McGill-Queen’s University Press, 2016), 277.

[2] Vaclav Smil, Energy and Civilization: A History (Cambridge: MIT Press, 2017), 385. [3] Penfold, “Petroleum Liquids,” 278. [4] Alfred W. Crosby, Children of the Sun: A History of Humanity’s Unappeasable Appetite for Energy (New York: W.W. Norton, 2006), 126. [5] Ibid, 164. [6] Smil, Energy and Civilization, 439. Dr. Robynne Mellor. This is the third post in a collaborative series titled “Environmental Historians Debate: Can Nuclear Power Solve Climate Change?” hosted by the Network in Canadian History & Environment, the Climate History Network, and ActiveHistory.ca. Shortly before uranium miner Gus Frobel died of lung cancer in 1978 he said, “This is reality. If we want energy, coal or uranium, lives will be lost. And I think society wants energy and they will find men willing to go into coal or uranium.”[1] Frobel understood that economists and governments had crunched the numbers. They had calculated how many miners died comparatively in coal and uranium production to produce a given amount of energy. They had rationally worked out that giving up Frobel’s life was worth it. I have come across these tables in archives. They lay out in columns the number of deaths to expect per megawatt year of energy produced. They weigh the ratios of deaths in uranium mines to those in coal mines. They coolly walk through their methodology in making these conclusions. These numbers will show you that fewer people died in uranium mines to produce a certain amount of energy. But the numbers do not include the pages and pages I have read of people remembering spouses, parents, siblings, children who died in their 30s, 40s, 50s, and so on. The numbers do not include details of these miners’ hobbies or snippets of their poetry; they don’t reveal the particulars of miners’ slow and painful wasting away. Miners are much easier to read about as death statistics. The erasure of these people trickles into debates about nuclear energy today. Any argument that highlights the dangers of coal mining but ignores entirely the plight of uranium miners is based on this reasoning. Rationalizations that say coal is more risky are based on the reduction of lives to ratios. If we are going to make these arguments, we must first acknowledge entirely what we are doing. We must be okay with what Gus Frobel said and meant: that someone is going to have to assume the risk of energy production and we are just choosing whom. We must realize that it is no accident that these Cold War calculations permeate our discourse today, and what that means moving forward. Promoters of nuclear energy have always tapped into fears about the environment in order to get us to stop worrying and learn to love the power plant. The awesome power of the atom announced itself to the world in a double flash of death and destruction when the United States dropped nuclear bombs on Hiroshima and Nagasaki in August 1945. Following the end of World War II, growing tensions between the United States and the Soviet Union and the consequent Cold War helped spur on a proliferation of nuclear weapons production. As nuclear technology became more important and sought after, governments around the world fought against nuclear energy’s devastating first impressions, which were difficult to dislodge from the minds of the public. From the earliest days, in order to combat the atom’s fearsome reputation and put a more positive spin on things, policymakers began pushing its potential peaceful applications. Nuclear technology and the environment were intertwined in many complex and mutually reinforcing ways. From as early as the 1940s, as historian Angela Creager has shown, the US Atomic Energy Commission used the potential ecological and biological application of radioisotopes as proof of the atom’s promising, non-militant prospects. By the 1950s, many hailed nuclear power as a way to escape resource constraint, underlining the comparatively small amount of uranium needed to produce the same amount of energy as coal. Using uranium was a way to conserve oil and coal for longer. In the 1960s, as the popular environmental movement grew, nuclear boosters appealed to the public’s concern for the planet by emphasizing the clean-burning qualities of nuclear energy. Environmentalism spread around the world, with environmental protection slowly being enshrined in law in several different countries. Environmental concern and protection also became an important part of the Cold War battle for hearts and minds. Nuclear advocates successfully appealed to environmentalist sentiments by avoiding certain problems, such as the intractable waste that the nuclear cycle produced, and emphasizing others, namely, the way it did not pollute the air. The main arguments of Cold War-era nuclear champions live on to this day. For many pro-nuclear environmentalists, who found these arguments appealing, the reasons to support nuclear energy were and continue to be: less uranium is needed than coal to produce the same amount of energy, nuclear energy is clean burning, radiation is “natural” and not something to be feared, and using nuclear energy will give us time to figure out different solutions to the energy crisis, which was once thought of as fossil fuel shortage and now leans more towards global warming. In broad strokes, then, these arguments are a Cold War holdover, and so are the anachronistic blind spots that accompany them. They portray nuclear power production as a single snapshot of a highly complex cycle. Nuclear is framed as “clean burning” for a reason; the period when it is burning is the only point when it can be considered clean. This reasoning made more sense when first promulgated because there was a hubris that accompanied nuclear technology, and part of this hubris was to assume that all of the issues that arose due to nuclear technology could and would be solved. Though that confidence is long-gone in general, it still lurks as an assumption that undergirds the argument for nuclear energy. One of the biggest problems that we were once sure we could solve is nuclear waste disposal. This problem has not been solved. It becomes more and more complex all the time, and the complexities tied up in the problem continue to multiply. Nuclear waste storage is still a stopgap measure, and most waste is still held on or near the surface in various locations, usually near where it is produced. The best long-term solution is a deep geological repository, but there are no such storage facilities for high-level radioactive waste yet. Several countries that have tried to build permanent repositories have faced both political and geological obstacles, such as the Yucca Mountain project in the United States, which the government defunded in 2012. Finland’s Onkalo repository is the most promising site. Many people who pay attention to these issues commend the Finnish government for successfully communicating with, and receiving consent from, the local community. But questions remain about why and how the people alive today can make decisions for people who will live on that land for the next 100,000 years. This timescale opens up various other questions about how to communicate risk through the millennia. Either way, we will not know if Onkalo is ultimately successful for a really long time, while the kitty litter accident at the Waste Isolation Pilot Plant in New Mexico, USA, where radioactive waste blew up in 2014, hints at how easily things can go wrong and defy careful models of risk. Promoters continue to use language that clouds this issue. Words such as “storage” and “disposal” obfuscate the inadequacies tied up in these so-called solutions. The truth is, disposal amounts to trying to keep waste from migrating by putting it somewhere and then trying to model the movements of the planet thousands of years into the future to make sure it stays where we put it. It is a catch-22. By ignoring the disposal problem, we kick the same can down the road that was kicked to us. By developing a disposal system, we just kick it really, really far into the future. Either way, there is an antiquated optimism that still persists in the belief that,one way or another, we will work it out, or have successfully planned for every contingency with our current solutions. Even if they do so inadequately, advocates of nuclear power often do acknowledge the back-end of the nuclear cycle. They usually only do so to dismiss it, but at least it is addressed. By contrast, they entirely ignore the front-end of the cycle. This tendency is particularly strange because when uranium is judged against fossil fuels, the ways that coal and oil are extracted enter the conversation while uranium, in contrast, is rarely considered in such terms. We think of coal and oil as things that come from the earth, uranium also is mined and its processing chain is just as complex as the other forms of fuel we seek to replace with it. Discussions of nuclear energy hardly ever mention uranium mining, possibly because uranium mining increasingly occurs in marginalized landscapes that are out of sight and out of mind (northern Saskatchewan in Canada and Kazakhstan are currently the biggest producers). But even for those who do pay attention to uranium mining, the problems associated with it are officially understood as something we have “figured out.” The prevailing narrative is that, yes, many uranium miners died from lung cancer linked to their work in uranium mines, and yes, there was a lot of waste produced and then inadequately disposed of due to the pressures and expediencies of the Cold War nuclear arms race. But when officials acknowledged these problems, they implemented regulations and fixed them. It follows that, because there is no longer a nuclear arms race, and because health and environmental authorities understand and accept the risks associated with mining activities, they have appropriately addressed and mitigated the problems linked to uranium production. Moreover, nuclear power generation, because it is separate from the arms race and the nefarious human radiation experiments that accompanied it, is safer and better for miners and communities that surround mines. Some aspects of this narrative are true. Uranium miners around the world did labor with few protections through at least the late 1960s, after which conditions improved moderately in some places. Several governments introduced and standardized maximum radon progeny (the decay products of uranium that cause cancer among miners) exposure levels. More mines had ventilation, monitoring increased, and many places banned miners from smoking underground. By the 1970s and 1980s, many countries considered the health problem solved. The issue with this portrayal is that the effectiveness of the introduction of these regulations is not very clear. Allowing a few years for the implementation of regulations, most countries did not have mines at regulated exposure levels until at least the mid-1970s. If we then allow for at least a fifteen-year latency period of lung cancer—which is the accepted minimum even with very high exposures—then lung cancer would not begin to show until, at the very least, around the late 1980s or early 1990s. By this period, however, the uranium-mining industry was collapsing. The Three Mile Island accident in 1979, the Chernobyl accident 1986, and the end of the Cold War arms race meant that plans for nuclear energy stalled and the demand for uranium plummeted. The uranium that did continue to be produced came from new mining regions and new cohorts of workers, or it affected people and places that the public and media ignored, or technology shifted and so fewer people faced the risks of underground uranium mining. There is little information about how and if the risks miners faced changed. There is also a dearth of information about how these post-regulation miners compare to their pre-regulation counterparts. One preliminary examinationof Canadian uranium miners, however, shows that miners who began work after 1970 had similar increased risk of mortality from lung cancer as those who began work in earlier decades. This suggests that there was either ineffective radon progeny reduction and erroneous reporting of radon progeny levels in mines or that there is something about the health risks in mines that are not quite understood. There is another relatively well-known narrative about uranium mining that some commenters point to as something we have figured out and corrected. Due to the extremely effective activism of the Navajo Nation, beginning in the 1970s and continuing through to the present, many people are aware of the hardships Navajo uranium miners faced and, to a lesser degree, the continued legacy of abandoned mines and tailings piles with which they have to contend. High-profile advocates for the Navajo, such as former secretary of the interiorStewart Udall and several journalistic and scholarly books on Navajos and uranium mining, have added to this awareness. Few people realize when pointing to the Navajo case that there is still a lot of confusion surrounding the long-term effects of uranium mining on Navajo land. It is an ongoing problem with unsatisfactory answers. Moreover, even though Navajo activists were adept at attracting attention to the problems they faced, many other uranium-mining communities cannot, do not want to, or have not been able to garner the same attention. Uranium mining happened and continues to happen around the world, even though the health risks are poorly understood. It is changing human bodies and landscapes to this day and affecting thousands of miners and communities. Those who work in mines are still making the trade-off between the employment the mine offers on the one hand, and the higher risk of lung cancer on the other. The environmental effects of uranium mining also are poorly understood and inadequately managed with a view to the long-term. When mines are in operation, the waste from uranium mills, called tailings, are usually stored in wet ponds or dry piles. Those who operate uranium mills try to keep these tailings from moving, and there are often government authorities that regulate these efforts, but tailings still seep into water, spread into soil, and migrate through food chains. These problems relate to mines and mills in operation, but there are also several problems that companies and governments face with regards to mines and mills that are no longer in operation. The production of uranium has led to landscapes with several abandoned mines that are neglected, as well as millions of tons of radioactive and toxic tailings. There are no good numbers for worldwide uranium tailings, but the International Atomic Energy Agency has estimatedthat the United States alone has produced 220 million tons of mill tailings and 220 million tons of uranium mine wastes. Waste from uranium production is managed in similar ways around the world. Using the same euphemistic language employed for nuclear waste coming out of the back-end of the nuclear cycle, tailings from uranium mills are often “disposed.” What disposal usually means is gathering tailings in one area, creating some kind of barrier to prevent erosion—this barrier can be vegetation, water, or rock—and then monitoring the tailings indefinitely to ensure they do not move. The question that follows is whether or not these tailings are harmful, and the truly unsatisfactory answer is that we do not know. Studies of communities surrounding uranium tailings that consider how tailings affect community health are scarce, while those that do exist are conflicting, inconclusive, and often problematic. While some studies, with a particular focus on cancer and death, argue that there are no increased illnesses linked to living in former uranium-mining areas, others have connected wastes from uranium production to various ailments, including kidney disease, hypertension, diabetes, and compromised immune system function. Now, half of all uranium production around the world uses in situ leaching or in situ recovery to extract uranium. Basically, uranium companies inject an oxidizing agent into an ore body, dissolve the uranium, and then pump the solution out and mill it without first having to mine it. The official line of thinking is that there are negligible environmental impacts stemming from this form of extraction. It certainly reduces risks for miners, but it is unlikely that it does not affect the environment. The environmentalist argument for nuclear energy, particularly the clean-burning component, is very appealing in a time when our biggest concern is climate change. Still, nuclear power is a band-aid technofix with many unknowns. The discussion surrounding nuclear energy has never fully grappled with the entire scope of the nuclear cycle, nor has it addressed the unique aspects of production of energy from metals that does not have parallels with fossil fuels. Making an argument about nuclear energy means examining all its risks in comparison with fossil fuels, and then coming to terms with the wealth of unknowns. It also means remembering and keeping in mind the bodies and landscapes making this option possible. To be a nuclear power advocate, especially as an environmentalist, one most also be an advocate for the safety of all nuclear workers. The problems uranium miners and uranium mining communities faced were never fully resolved and they are not fully understood. To promote nuclear power means to pay attention to the people and places that produce uranium and fighting to make sure they receive the protections they deserve for helping us carve our way out of this current problem. Robynne Mellor received her PhD in environmental history from Georgetown University, and she studies the intersection of the environment and the Cold War. Her research focuses on the environmental history of uranium mining in the United States, Canada, and the Soviet Union. She tweets at @RobynneMellor. [1] Gus Frobel, quoted in Lloyd Tataryn, Dying for a Living (Deneau and Greenberg Pubishers, 1979), 100.

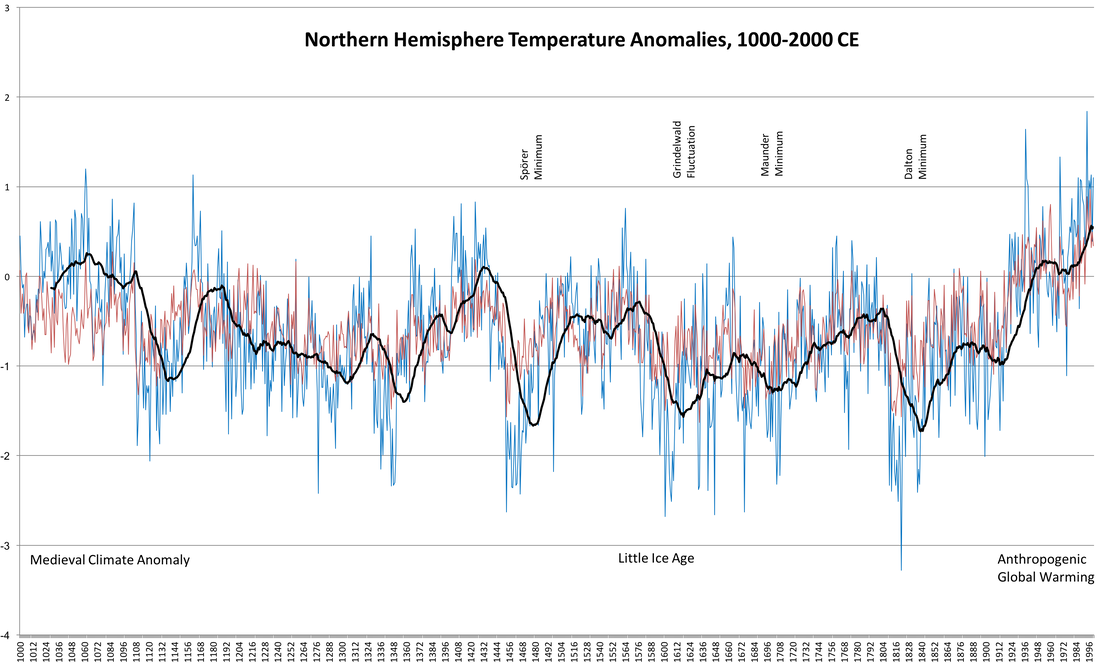

Prof. Dagomar Degroot, Georgetown University. Roughly 11,000 years ago, rising sea levels submerged Beringia, the vast land bridge that once connected the Old and New Worlds. Vikings and perhaps Polynesians briefly established a foothold in the Americas, but it was the voyage of Columbus in 1492 that firmly restored the ancient link between the world’s hemispheres. Plants, animals, and pathogens – the microscopic agents of disease – never before seen in the Americas now arrived in the very heart of the western hemisphere. It is commonly said that few organisms spread more quickly, or with more horrific consequences, than the microbes responsible for measles and smallpox. Since the original inhabitants of the Americas had never encountered them before, millions died. The great environmental historian Alfred Crosby first popularized these ideas in 1972. It took over thirty years before a climatologist, William Ruddiman, added a disturbing new wrinkle. What if so many people died so quickly across the Americas that it changed Earth’s climate? Abandoned fields and woodlands, once carefully cultivated, must have been overrun by wild plants that would have drawn huge amounts of carbon dioxide out of the atmosphere. Perhaps that was the cause of a sixteenth-century drop in atmospheric carbon dioxide, which scientists had earlier uncovered by sampling ancient bubbles in polar ice sheets. By weakening the greenhouse effect, the drop might have exacerbated cooling already underway during the “Grindelwald Fluctuation:” an especially frigid stretch of a much older cold period called the “Little Ice Age." Last month, an extraordinary article by a team of scholars from the University College London captured international headlines by uncovering new evidence for these apparent relationships. The authors calculate that nearly 56 million hectares previously used for food production must have been abandoned in just the century after 1492, when they estimate that epidemics killed 90% of the roughly 60 million people indigenous to the Americas. They conclude that roughly half of the simultaneous dip in atmospheric carbon dioxide cannot be accounted for unless wild plants grew rapidly across these vast territories. On social media, the article went viral at a time when the Trump Administration’s wanton disregard for the lives of Latin American refugees seems matched only by its contempt for climate science. For many, the links between colonial violence and climate change never appeared clearer – or more firmly rooted in the history of white supremacy. Some may wonder whether it is wise to quibble with science that offers urgently-needed perspectives on very real, and very alarming, relationships in our present. Yet bold claims naturally invite questions and criticism, and so it is with this new article. Historians – who were not among the co-authors – may point out that the article relies on dated scholarship to calculate the size of pre-contact populations in the Americas, and the causes for their decline. Newer work has in fact found little evidence for pan-American pandemics before the seventeenth century. More importantly, the article’s headline-grabbing conclusions depend on a chain of speculative relationships, each with enough uncertainties to call the entire chain into question. For example, some cores exhumed from Antarctic ice sheets appear to reveal a gradual decline in atmospheric carbon dioxide during the sixteenth century, while others apparently show an abrupt fall around 1590. Part of the reason may have to do with local atmospheric variations. Yet the difference cannot be dismissed, since it is hard to imagine how gradual depopulation could have led to an abrupt fall in 1590. To take another example, the article leans on computer models and datasets that estimate the historical expansion of cropland and pasture. Models cited in the article suggest that the area under human cultivation steadily increased from 1500 until 1700: precisely the period when its decline supposedly cooled the Earth. An increase would make sense, considering that the world’s human population likely rose by as many as 100 million people over the course of the sixteenth century. Meanwhile, merchants and governments across Eurasia depleted woodlands to power new industries and arm growing militaries. Changes in the extent and distribution of historical cropland, 3000 BCE to the present, according to the HYDE 3.1 database of human-induced global land use change. In any case, models and datasets may generate tidy numbers and figures, but they are by nature inexact tools for an era when few kept careful or reliable track of cultivated land. Models may differ enormously in their simulations of human land use; one, for example, shows 140 million more hectares of cropland than another for the year 1700. Remember that, according to the new article, the abandonment of just 56 million hectares in the Americas supposedly cooled the planet just a century earlier! If we can make educated guesses about land use changes across Asia or Europe, we know next to nothing about what might have happened in sixteenth-century Africa. Demographic changes across that vast and diverse continent may well have either amplified or diminished the climatic impact of depopulation in the Americas. And even in the Americas, we cannot easily model the relationship between human populations and land use. Surging populations of animals imported by Europeans, for example, may have chewed through enough plants to hold off advancing forests. Moreover, the early death toll in the Americas was often also especially high in communities at high elevations: where the tropical trees that absorb the most carbon could not go. In short, we cannot firmly establish that depopulation in the Americas cooled the Earth. For that reason, it is missing the point to think of the new article as either “wrong” or “right;” rather, we should view it as a particularly interesting contribution to an ongoing academic conversation. Journalists in particular should also avoid exaggerating the article’s conclusions. The co-authors never claim, for example, that depopulation “caused” the Little Ice Age, as some headlines announced, nor even the Grindelwald Fluctuation. At most, it worsened cooling already underway during that especially frigid stretch of the Little Ice Age. For all the enduring questions it provokes, the new article draws welcome attention to the enormity of what it calls the “Great Dying” that accompanied European colonization, which was really more of a “Great Killing” given the deliberate role that many colonizers played in the disaster. It also highlights the momentous environmental changes that accompanied the European conquest. The so-called “Age of Exploration” linked not only the Americas but many previously isolated lands to the Old World, in complex ways that nevertheless reshaped entire continents to look more like Europe. We are still reckoning with and contributing to the resulting, massive decline in plant and animal biomass and diversity. Not for nothing do some date the “Anthropocene,” the proposed geological epoch distinguished by human dominion over the natural world, to the sixteenth century. All of these issues also shed much-needed light on the Little Ice Age. Whatever its cause, we now know that climatic cooling had profound consequences for contemporary societies. Cooling and associated changes in atmospheric and oceanic circulation provoked harvest failures that all too often resulted in famines. In community after community, the malnourished repeatedly fell victim to outbreaks of epidemic disease, and mounting misery led many to take up arms against contemporary governments. Some communities and societies were resilient, even adaptive in the face of these calamities, but often partly by taking advantage of the less fortunate. Whether or not the New World genocide led to cooling, the sixteenth and seventeenth centuries offer plenty of warnings for our time. My thanks to Georgetown environmental historians John McNeill and Timothy Newfield for their help with this article, to paleoclimatologist Jürg Luterbacher for answering my questions about ice cores, and to colleagues who responded to my initial reflections on social media. Works Cited:

Archer, S. "Colonialism and Other Afflictions: Rethinking Native American Health History." History Compass 14 (2016): 511-21. Crosby, Alfred W. “Conquistador y pestilencia: the first New World pandemic and the fall of the great Indian empires.” The Hispanic American Historical Review 47:3 (1967): 321-337. Crosby, Alfred W. The Columbian Exchange: Biological and Cultural Consequences of 1492. Westport: Greenwood Press, 1972. Alfred W. Crosby, Ecological Imperialism: The Biological Expansion of Europe, 900-1900, 2nd Edition. Cambridge: Cambridge University Press, 2004. Degroot, Dagomar. “Climate Change and Society from the Fifteenth Through the Eighteenth Centuries.” WIREs Climate Change Advanced Review. DOI:10.1002/wcc.518 Degroot, Dagomar. The Frigid Golden Age: Climate Change, the Little Ice Age, and the Dutch Republic, 1560-1720. New York: Cambridge University Press, 2018. Gade, Daniel W. “Particularizing the Columbian exchange: Old World biota to Peru.” Journal of Historical Geography 48 (2015): 30. Goldewijk, Kees Klein, Arthur Beusen, Gerard Van Drecht, and Martine De Vos, “The HYDE 3.1 spatially explicit database of human‐induced global land‐use change over the past 12,000 years.” Global Ecology and Biogeography 20:1 (2011): 73-86. Jones, Emily Lena. “The ‘Columbian Exchange’ and landscapes of the Middle Rio Grande Valley, AD 1300– 1900.” The Holocene (2015): 1704. Kelton, Paul. "The Great Southeastern Smallpox Epidemic, 1696-1700: The Region's First Major Epidemic?". In R. Ethridge and C. Hudson, eds., The Transformation of Southeastern Indians, 1540-1760. Koch, Alexander, Chris Brierley, Mark M. Maslin, and Simon L. Lewis. “Earth system impacts of the European arrival and Great Dying in the Americas after 1492.” Quaternary Science Reviews 207 (2019): 13-36 McCook, Stuart. “The Neo-Columbian Exchange: The Second Conquest of the Greater Caribbean, 1720-1930.” Latin American Research Review 46: 4 (2011): 13. McNeill, J. R. “Woods and Warfare in World History.” Environmental History, 9:3 (2004): 388-410. Melville, Elinor G. K. A Plague of Sheep: Environmental Consequences of the Conquest of Mexico. Cambridge: Cambridge University Press, 1997. PAGES2k Consortium, “A global multiproxy database for temperature reconstructions of the Common Era.” Scientific Data 4 (2017). doi:10.1038/sdata.2017.88. Parker, Geoffrey. Global Crisis: War, Climate Change and Catastrophe in the Seventeenth Century. New Haven: Yale University Press, 2013. Sigl, Michael et al., "Timing and climate forcing of volcanic eruptions for the past 2,500 years." Nature 523:7562 (2015): 543. Riley, James C. "Smallpox and American Indians Revisited." Journal of the History of Medicine and Allied Sciences 65 (2010): 445-77. Ruddiman, William. “The Anthropogenic Greenhouse Era Began Thousands of Years Ago.” Climatic Change 61 (2003): 261–93. Ruddiman, William. Plows, Plagues, and Petroleum: How Humans Took Control of Climate. Princeton, NJ: Princeton University Press, 2005 Williams, Michael. Deforesting the Earth: From Prehistory to Global Crisis. Chicago: University of Chicago Press., 2002. |

Archives

March 2022

Categories

All

|

RSS Feed

RSS Feed