|

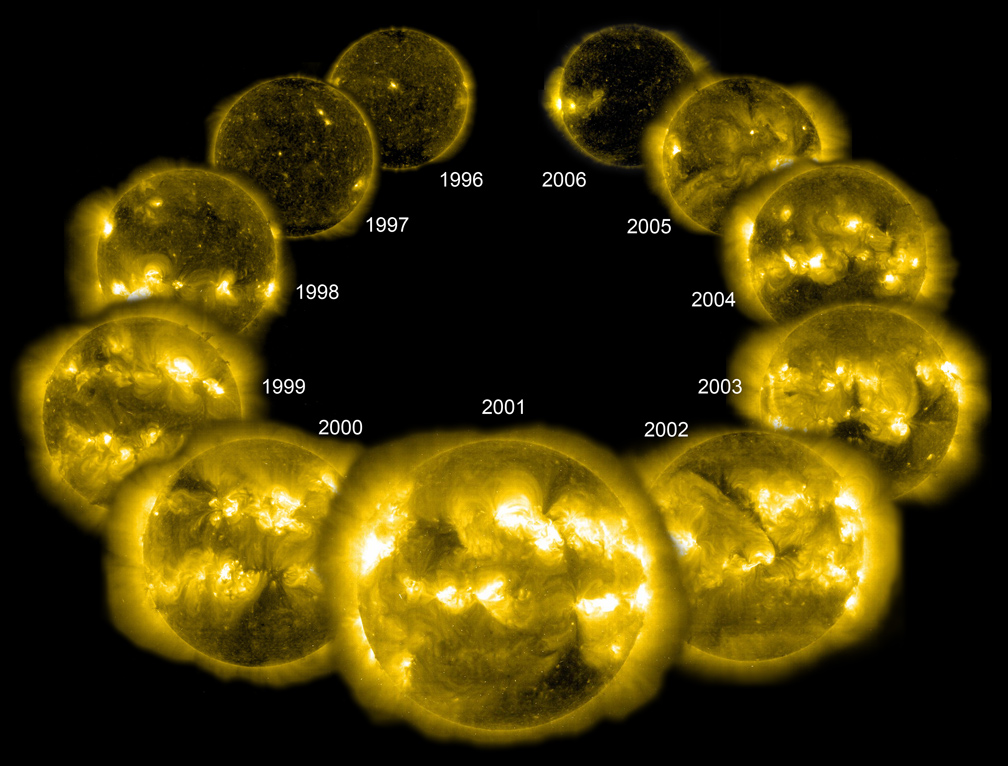

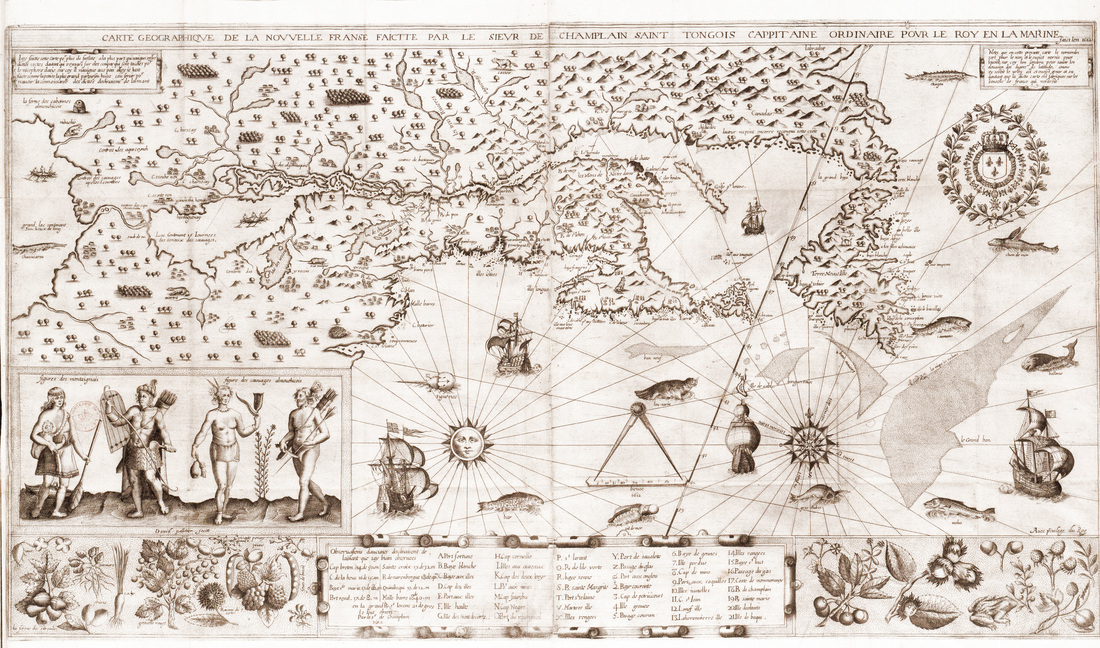

It's Maunder Minimum Month at HistoricalClimatology.com. This is our first of two feature articles on the Maunder Minimum. The second, by Gabriel Henderson of Aarhus University, will examine how astronomer John Eddy developed and defended the concept. Although it may seem like the sun is one of the few constants in Earth’s climate system, it is not. Our star undergoes both an 11-year cycle of waning and waxing activity, and a much longer seesaw in which “grand solar minima” give way to “grand solar maxima.” During the minima, which set in approximately once per century, solar radiation declines, sunspots vanish, and solar flares are rare. During the maxima, by contrast, the sun crackles with energy, and sunspots riddle its surface. The most famous grand solar minimum of all is undoubtedly the Maunder Minimum, which endured from approximately 1645 until 1720. It was named after Edward Maunder, a nineteenth-century astronomer who painstakingly reconstructed European sunspot observations. The Maunder Minimum has become synonymous with the Little Ice Age, a period of climatic cooling that, according to some definitions, endured from around 1300 to 1850, but reached its chilliest point in the seventeenth century. During the Maunder Minimum, temperatures across the Northern Hemisphere declined, relative to twentieth-century averages, by about one degree Celsius. That may not sound like much – especially in a year that is, globally, still more than one degree Celsius hotter than those same averages – but consider: seventeenth-century cooling was sufficient to contribute to a global crisis that destabilized one society after another. As growing seasons shortened, food shortages spread, economies unraveled, and rebellions and revolutions were quick to follow. Cooling was not always the primary cause for contemporary disasters, but it often played an important role in exacerbating them. Many people – scholars and journalists included – have therefore assumed that any fall in solar activity must lead to chillier temperatures. When solar modelling recently predicted that a grand solar minimum would set in soon, some took it as evidence of an impending reversal of global warming. I even received an email from a heating appliance company that encouraged me to hawk their products on this website, so our readers could prepare for the cooler climate to come! Of course, the warming influence of anthropogenic greenhouse gases will overwhelm any cooling brought about by declining solar activity. In fact, scientists still dispute the extent to which grand solar minima or maxima actually triggered past climate changes. What seems certain is that especially warm and cool periods in the past overlapped with more than just variations in solar activity. Granted, many of the coldest decades of the Little Ice Age coincided with periods of reduced solar activity: the Spörer Minimum, from around 1450 to 1530; the Maunder Minimum, from 1645 to 1720; and the Dalton Minimum, from 1790 to 1820. However, one of the chilliest periods of all – the Grindelwald Fluctuation, from 1560 to 1630 – actually unfolded during a modest rise in solar activity. Volcanic eruptions, it seems, also played an important role in bringing about cooler decades, as did the natural internal variability of the climate system. Both the absence of eruptions and a grand solar maximum likely set the stage for the Medieval Warm Period, which is now more commonly called the Medieval Climate Anomaly. This gets to the heart of what we actually mean when we use a term like “Maunder Minimum” to refer to a period in Earth’s climate history. Are we talking about a period of low solar activity? Or are we referring to an especially cold climatic regime? Or are we talking about chilly temperatures and the changes in atmospheric circulation that cooling set in motion? In other words: what do we really mean when we say that the Maunder Minimum endured from 1645 to 1720? How does our choice of dates affect our understanding of relationships between climate change and human history in this period? To find an answer to these questions, we can start by considering the North Sea region. This area has yielded some of the best documentary sources for climate reconstructions. They allow environmental historians like me to dig into exactly the kinds of weather that grew more common with the onset of the Maunder Minimum. In Dutch documentary evidence, for example, we see a noticeable cooling trend in average seasonal temperatures that begins around 1645. On the surface of things, it seems like declining solar activity and climate change are very strongly correlated. And yet, other weather patterns seem to change later, one or two decades after the onset of regional cooling. Weather variability from year to year, for example, becomes much more pronounced after around 1660, and that erraticism is often associated with the Maunder Minimum. Severe storms were more frequent only by the 1650s or perhaps the 1660s, and again, such storms are also linked to the Maunder Minimum climate. In the autumn, winter, and spring, easterly winds – a consequence, perhaps, of a switch in the setting of the North Atlantic Oscillation – increased at the expense of westerly winds in the 1660s, not twenty years earlier.  A depiction of William III boarding his flagship prior to the Glorious Revolution of 1688. Persistent easterly, "Protestant" winds brought William's fleet quickly across the Channel, and thereby made possible the Dutch invasion of England. For more, read my forthcoming book, "The Frigid Golden Age." Source: Ludolf Bakhuizen, "Het oorlogsschip 'Brielle' op de Maas voor Rotterdam," 1688. All of these weather conditions mattered profoundly for the inhabitants of England and the Dutch Republic: maritime societies that depended on waterborne transportation. Rising weather variability made it harder for farmers to adapt to changing climates, but often made it more profitable for Dutch merchants to trade grain. More frequent storms sank all manner of vessels but sometimes quickened journeys, too. Easterly winds gave advantages to Dutch fleets sailing into battle from the Dutch coast, but westerly winds benefitted English armadas. If we define the Maunder Minimum as a climatic regime, not (just) a period of reduced sunspots, and if we care about its human consequences, what should we conclude? Did the Maunder Minimum reach the North Sea region in 1645, or 1660? These problems grow deeper when we turn to the rest of the world. Across much of North America, temperature fluctuations in the seventeenth century did not closely mirror those in Europe. There was considerable diversity from one North American region to another. Tree ring data suggests that northern Canada appears to have experienced the cooling of the Maunder Minimum. Western North America also seems to have been relatively chilly in the seventeenth century, although there chillier temperatures probably did not set in during the 1640s. By contrast, cooling was moderate or even non-existent across the northeastern United States. Chesapeake Bay, for instance, was warm for most of the seventeenth century, and only cooled in the eighteenth century. Glaciers advanced in the Canadian Rockies not in the seventeenth century, but rather during the early eighteenth century. Their expansion was likely caused by an increase in regional precipitation, not a decrease in average temperatures. Still, the seventeenth century was overall chillier in North America than the preceding or subsequent centuries, and landmark cold seasons affected both shores of the Atlantic. The consequences of such frigid weather could be devastating. The first settlers to Jamestown, Virginia had the misfortune of arriving during some of the chilliest and driest weather of the Little Ice Age in that region. Crop failures contributed to the dreadful mortality rates endured by the colonists, and to the brief abandonment of their settlement in 1610. Moreover, many parts of North America do seem to have warmed in the wake of the Maunder Minimum, in the eighteenth century. This too could have profound consequences. In the seventeenth century, settlers to New France had been surprised to discover that their new colony was far colder than Europe at similar latitudes. They concluded that its heavy forest cover was to blame, and with good reason: forests do create cooler, cloudier microclimates. Just as the deforestation of New France started transforming, on a huge scale, the landscape of present-day Quebec, the Maunder Minimum ended. Settlers in New France concluded that they had civilized the climate of their colony, and they used this as part of their attempts to justify their dispossession of indigenous communities. Despite eighteenth-century warming in parts of North America, the dates we assign to the Maunder Minimum do look increasingly problematic when we look beyond Europe. If we turn to China, we encounter a similar story. Much of China was actually bitterly cold in the 1630s and early 1640s, before the onset of the Maunder Minimum elsewhere. This, too, had important consequences for Chinese history. Cold weather and precipitation extremes ruined crops on a vast scale, contributing to crushing famines that caused particular distress in overpopulated regions. The ruling Ming Dynasty seemed to have lost the “mandate of heaven,” the divine sanction that, according to Confucian doctrine, kept the weather in check. Deeply corrupt, riven by factional politics, undermined by an obsolete examination system for aspiring bureaucrats, and scornful of martial culture, the regime could adequately address neither widespread starvation, nor the banditry it encouraged. Climatic cooling caused even more severe deprivations in neighboring, militaristic Manchuria. There, the solution was clear: to invade China and plunder its wealth. The first Manchurian raid broke through the Great Wall in 1629, a warm year in other parts of the Northern Hemisphere. Ultimately, the Manchus capitalized on the struggle between Ming and bandit armies by seizing China and founding the Qing (or "Pure") Dynasty in 1644.  China under the Ming Dynasty was arguably the most powerful empire of its time. Even as it unravelled in the early seventeenth century, its cultural achievements were impressive, as this painting of fog makes clear. Source: Anonymous, "Peach Festival of the Queen Mother of the West," early 17th century. This entire history of cooling and crisis predates the accepted starting date of the Maunder Minimum. Yet, the fall of the Ming Dynasty unfolded in one relatively small part of present-day China. Average temperatures in that region reached their lowest point in the 1640s. By contrast, average temperatures in the Northeast warmed by the middle of the seventeenth century. Average temperatures in the Northwest also warmed slightly during the mid-seventeenth century, and then cooled during the late Maunder Minimum. Smoothed graphs that show fluctuations in average temperature across centuries or millennia give the impression that dating decade-scale warm or cold climatic regimes is an easy matter. Actually, attempts to precisely date the beginning and end of just about any recent climatic regime are sure to set off controversy. This is not only because global climate changes have different manifestations from region to region, but also because climate changes, as we have seen, involve much more than shifts in average annual temperature. Did the Maunder Minimum reach northern Europe, for instance, when average annual temperatures declined, when storminess increased, when annual precipitation rose or fell, or when weather became less predictable? Historians such as Wolfgang Behringer have argued that, when dating climatic regimes, we should also consider the “subjective factor” of human reactions to weather. For historians, it makes little sense to date historical periods according to wholly natural developments that had little impact on human beings. Maybe historians of the Maunder Minimum should consider not when temperatures started declining, but rather when that decline was, for the first time, deep enough to trigger weather that profoundly altered human lives. When we consider climate changes in this way, we may be more inclined to subjectively date climatic regimes using extreme events, such as especially cold years, or particularly catastrophic storms. Dating climate changes with an eye to human consequences does take historians away from the statistical methods and conclusions pioneered by scientists, but it also draws them closer to the subjects of historical research. In my work, I do my best to combine all of these definitions, and incorporate many of these complexities. I date climatic regimes by considering their cause – solar, volcanic, or perhaps human – and by working with statisticians who can tell me when a trend becomes significant. However, I also try to consider the many different kinds of weather associated with a climatic shift, and the consequences that extremes in such weather could have for human beings. As you might expect, this is not always easy. I have long held that the Maunder Minimum, in the North Sea region, began around 1660. Increasingly, I find it easier to begin with the broadly accepted date of 1645, but distinguish between different phases of the Maunder Minimum. An earlier phase marked by cooling might have started in 1645, but a later phase marked by much more than cooling took hold around 1660. These are messy issues that yield messy answers. Yet we must think deeply about these problems. Not only can such thinking affect how we make sense of the deep past, but it can also provide new perspectives on modern climate change. When did our current climate of anthropogenic warming really start? At what point did it start influencing human history, and where? What can that tell us about our future? These questions can yield insights on everything from the contribution of climate change to present-day conflicts, to the timing of our transition to a thoroughly unprecedented global climate, to the urgency of mitigating greenhouse gas emissions. ~Dagomar Degroot Further Reading:

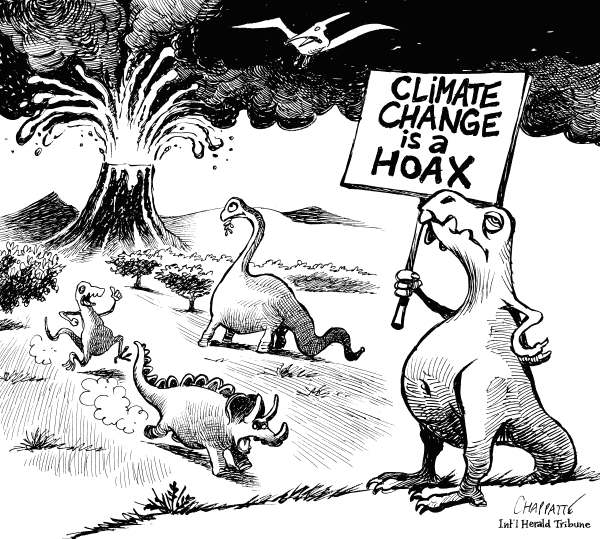

Behringer, Wolfgang. A Cultural History of Climate. Cambridge: Polity Press, 2010. Brooke, John. Climate Change and the Course of Global History: A Rough Journey. Cambridge: Cambridge University Press, 2014. Coates, Colin and Dagomar Degroot, “‘Les bois engendrent les frimas et les gelées:’ comprendre le climat en Nouvelle-France." Revue d'histoire de l'Amérique française 68:3-4 (2015): 197-219. Dagomar Degroot, “‘Never such weather known in these seas:’ Climatic Fluctuations and the Anglo-Dutch Wars of the Seventeenth Century, 1652–1674.” Environment and History 20.2 (May 2014): 239-273. Eddy, John A. “The Maunder Minimum.” Science 192:4245 (1976): 1189-1202. Parker, Geoffrey. Global Crisis: War, Climate Change and Catastrophe in the Seventeenth Century. London: Yale University Press, 2013. White, Sam. “Unpuzzling American Climate: New World Experience and the Foundations of a New Science.” Isis 106:3 (2015): 544-566.  This site explores interdisciplinary research into climate changes past, present, and future. Its articles express my conviction that diverse approaches, methodologies, and findings can yield the most accurate perspectives on complex problems. To contextualize modern warming, for example, we can reconstruct past climate change using models developed by computer scientists; tree rings or ice cores examined by climatologists; and documents interpreted by historians. We gain far more by using these sources in concert than we would by examining each in isolation. Yet we must approach such interdisciplinarity with caution. The problems presented by climate change scepticism provide lessons for academics crossing disciplinary boundaries, and for policymakers, journalists, and laypeople interpreting interdisciplinary findings. In academia, climate change scepticism is usually interdisciplinary. To attack climate change research, academic sceptics use credibility and, occasionally, scholarly methods accrued in relevant disciplines but often unrelated fields. For example, in the late 1980s and 1990s, eminent physicists William Nierenberg and Fred Seitz were among the most outspoken critics of the global warming hypothesis. According to historians of science Naomi Oreskes and Erik Conway, the scepticism expressed by Nierenberg and Seitz grew, in part, from their belief that the ends of supporting unfettered capitalism justified the means of deliberately distorting scientific evidence (Oreskes and Conway, 190). However, another part – perhaps the most damaging – emerged from the conviction that their disciplinary backgrounds gave them special insight into climate change research Physicists – including one of our own editors – play a crucial role in modelling and interpreting climate change. Yet not all physicists are alike. Nierenberg was a renowned nuclear physicist who oversaw the development of military and industrial technologies for exploiting the sea. Seitz developed one of the first quantum theories of crystals, and contributed to major innovations in solid-state physics. Owing to their similar academic backgrounds, both Nierenberg and Seitz distrusted the lack of certainty in climate modelling, and both would have despised the occasionally fuzzy probabilities that must accompany climate history. Their scepticism effectively takes the most tenuous elements of climate science and argues that they are not science, because “real scientists” know better. These attitudes highlight one of the most important but least appreciated aspects of interdisciplinary research: humility. When we step into another field, we step into another culture with characteristics that often have sound reasons for existing. Before challenging assumptions that inform a discipline, we should thoroughly learn the language, methods, and concepts of scholars in that discipline. Only then can we appreciate their findings. Climate scepticism can also reveal that differences between subfields within academic disciplines can be more significant than distinctions between those disciplines. An environmental historian, for instance, can have much more in common with a climatologist than a postmodern cultural historian. Another example: Fred Singer is an atmospheric physicist who played an important role in developing the first weather satellites. However, he has little respect for the methods or conclusions of mainstream climate research. In 2006, Singer told the CBC’s The Fifth Estate that “it was warmer a thousand years ago than it is today. Vikings settled Greenland. Is that good or bad? I think it's good.” In an interview with The Daily Telegraph three years later, he acknowledged that “we are certainly putting more carbon dioxide in the atmosphere.” However, he argued that “there is no evidence that this high CO2 is making a detectable difference. It should in principle, however the atmosphere is very complicated and one cannot simply argue that just because CO2 is a greenhouse gas it causes warming.” Vikings did settle in Greenland, and the atmosphere is very complicated. However, global temperatures are warmer now than they were a millennium ago, and we can certainly trace relationships between modern warming and atmospheric concentrations of CO2. Singer may be an atmospheric physicist, but he is hardly qualified to offer any observations on the state of climate change research. Distinctions between different kinds of scientists – and different agendas among scientists – are always worth remembering when exploring the study of climate change. In a recent article, the popular website Reporting Climate Science described a disagreement between physical oceanographer Jochem Marotzke and meteorologist Piers Forster on the one hand, and “climate scientist” Nic Lewis on the other. Marotzke and Forster recently published an article in the journal Nature that confirmed the reliability of climate models for predicting climate change. Lewis accused them of circular reasoning and basic mathematical and statistical errors.

Lewis is, in fact, a retired financier with a degree in mathematics, and a minor in physics, from Cambridge University. Does that make him a climate scientist? He is certainly qualified to challenge mathematical approaches, and he does not deny the basic physics of anthropogenic climate change. For policymakers, journalists, and even scholars in different disciplines, it can be difficult to discern with what authority he speaks. Similar problems are at work in another recent academic debate. This one unfolded in the pages of the Journal of Interdisciplinary History. As described on this website, two economists took issue with the notion of a “Little Ice Age” between the fourteenth and nineteenth centuries. They waded into an established field – climate history – and assailed one of its most important themes – the existence of a Little Ice Age – without appreciating the methods and findings of recent scholarship. For example, they used a graph of early modern British grain prices as a direct proxy for contemporary changes in temperature. But those grain prices were influenced by so many human variables that, for modern scholars, they can, at best, suggest only how one society responded to climate change. While attacking the rigour of climate reconstructions, the economists actually introduced data that is far less rigorous than climate historians use today. Within academia, most instances of climate change scepticism are case studies of interdisciplinary approaches gone wrong. Interdisciplinarity can help us approach thorny issues in new ways, but such work should be collaborative. When adherents of one discipline or field dictate to those in another, the results are usually destructive. ~Dagomar Degroot Note: the cover photo was taken by our editor, Benoit Lecavalier, during a recent trip to Greenland. |

Archives

March 2022

Categories

All

|

RSS Feed

RSS Feed