|

Prof. María Cristina García, Cornell University. People displaced by extreme weather events and slower-developing environmental disasters are often called “climate refugees,” a term popularized by journalists and humanitarian advocates over the past decade. The term “refugee,” however, has a very precise meaning in US and international law and that definition limits those who can be admitted as refugees and asylees. Calling someone a “refugee” does not mean that they will be legally recognized as such and offered humanitarian protection. The principal instruments of international refugee law are the 1951 United Nations Convention Relating to the Status of Refugees and its 1967 Protocol, which defined a refugee as: "any person who owing to well-founded fear of being persecuted for reasons of race, religion, nationality, membership of a particular social group or political opinion, is outside the country of his nationality and is unable, or owing to such fear, is unwilling to avail himself of the protection of that country; or who, not having a nationality and being outside the country of his former habitual residence as a result of such events, is unable or, owing to such fear, is unwilling to return to it." [i] This definition, on which current U.S. law is based, does not include any reference to the “environment,” “climate,” or “natural disaster,” that might allow consideration of those displaced by extreme weather events and/or climate change. In some regions of the world, other legal instruments have supplemented the U.N. Refugee Convention and Protocol, and these instruments offer more expansive definitions of refugee status that might offer protections to the environmentally displaced. The Organization of African Unity’s “Convention Governing the Specific Aspects of Refugee Problems in Africa (1969)” includes not only external aggression, occupation, and foreign domination as the motivating factors for seeking refuge, but also “events seriously disturbing the public order.”[ii] In the Americas, the non-binding Cartagena Declaration on Refugees (1984), crafted in response to the wars in Central America, set regional standards for providing assistance not just for those displaced by civil and political unrest but also those fleeing “circumstances which have seriously disturbed the public order.”[iii] The Organization of American States has also passed a series of resolutions offering member states additional guidance on how to respond to refugees, asylum seekers, stateless persons, and others in need of temporary or permanent protection. In Europe, the European Union Council Directive (2004) has identified the minimum standards for the qualification and status of refugees or those who might need “subsidiary protection.”[iv] Together, these regional and international conventions, protocols, and guidelines acknowledge that people are displaced for a wide range of reasons and that they deserve respect and compassion and, at the bare minimum, temporary accommodation. Climate change has been absent in these discussions perhaps because environmental disruptions such as hurricanes, earthquakes, and drought were long assumed to be part of the “natural” order of life, unlike war and civil unrest, which are considered extraordinary, man-made, and thus avoidable. The expanding awareness that societies are accelerating climate change to life-threatening levels requires that countries reevaluate the populations they prioritize for assistance, and adjust their immigration, refugee, and asylum policies accordingly. Under current U.S. immigration law, those displaced by sudden-onset disasters and environmental degradation do not qualify for refugee status or asylum unless they are able to demonstrate that they have also been persecuted on account of race, religion, nationality, membership in a particular social group, or political opinion. This wasn’t always the case: indeed, U.S. refugee policy once recognized that those displaced by “natural calamity” were vulnerable and deserved protection. The 1953 Refugee Relief Act, for example, defined a refugee as “any person in a country or area which is neither Communist nor Communist-dominated, who because of persecution, fear of persecution, natural calamity or military operations is out of his usual place of abode and unable to return thereto… and who is in urgent need of assistance for the essentials of life or for transportation.”[v] The 1965 Immigration Act (Hart-Celler Act) established a visa category for refugees that included persons “uprooted by catastrophic natural calamity as defined by the President who are unable to return to their usual place of abode.” [vi] Between 1965 and 1980, no refugees were admitted to the United States under the “catastrophic natural calamity” provision but that did not stop legislators from opposing its inclusion in the refugee definition. Some legislators argued that it was inappropriate to offer permanent resettlement to people who were only temporarily displaced; while others took issue on the grounds that it undermined the economic recovery of hard-hit countries by draining them of their most highly-skilled citizens. The 1980 Refugee Act subsequently eliminated any reference to natural calamity or disaster, in line with the United Nation’s definition of refugee status. In recent decades, scholars, advocates, and policymakers have called for a reevaluation of the refugee definition in order to grant temporary or permanent protection to a wider range of vulnerable populations, including those displaced by environmental conditions. At present, U.S. immigration law offers very few avenues for entry for the so-called “climate refugees”: options are limited to Temporary Protected Status (TPS), Delayed Enforced Departure (DED), and Humanitarian Parole. The 1990 Immigration Act provided the statutory provision for TPS: according to the law, those unable to return to their countries of origin because of an ongoing armed conflict, environmental disaster, or “extraordinary and temporary conditions” can, under some conditions, remain and work in the United States until the Attorney General (after 2003, the Secretary of Homeland Security) determines that it is safe to return home. [vii] There is one catch: in order to qualify for TPS one already has to be physically present in the United States—as a tourist, student, business executive, contract worker or even as an unauthorized worker. TPS is granted on a 6, 12, or 18-month basis, renewed by the Department of Homeland Security (DHS) if the qualifying conditions persists. TPS recipients do not qualify for state or federal welfare assistance but they are allowed to live and work in the United States until federal authorities determine that it’s safe to return. In the meantime, they can send much-needed remittances to their families and communities back home to assist in their recovery. TPS is one way, albeit imperfect, that United States exercises its humanitarian obligations to those displaced by environmental disasters and climate change. It is based on the understanding that countries in crisis require time to recover; if nationals living abroad return in large numbers, in a short period of time, they can have a destabilizing effect that disrupts that recovery. Countries affected by disaster must meet certain conditions in order to qualify: first, the Secretary of Homeland Security must determine that there has been a substantial disruption in living conditions as a result of a natural or environmental disaster, making it impossible for a government to accommodate the return of its nationals; and second, the country affected by environmental disaster must officially petition for its nationals to receive TPS status (a requirement that is not imposed on countries affected by political violence). However, environmental disaster does not automatically guarantee that a country’s nationals will receive temporary protection. The U.S. federal government has total discretion and the decision-making process is not immune to domestic politics. Deferred Enforced Departure (DED) is another status available to those unable to return to hard-hit areas: DED offers a stay of removal as well as employment authorization, but the status is most often used when TPS has expired. In such circumstances, the president has the discretionary (but rarely used) authority to allow nationals to remain in the United States in the interest of humanitarian or foreign policy, or until Congress can pass a law that offers a permanent accommodation. [viii] Humanitarian “parole” is yet another recourse for the environmentally displaced. The 1952 McCarran Walter Act granted the attorney general discretionary authority to grant temporary entry to individuals, on a case-by-case basis, if deemed in the national interest. Since 2002, humanitarian parole requests have been handled by the United States Citizenship and Immigration Services (USCIS), and are granted much more sparingly than during the Cold War. USCIS generally grants parole only for one year (renewable on a case-by-case basis). [ix] Parole does not place an individual on a path to permanent residency or citizenship, nor does it make applicants eligible for welfare benefits; only occasionally are “parolees” granted the right to work, allowing them to earn a livelihood and send remittances to communities hard hit by political and environmental disruptions. TPS, DED, and humanitarian parole are only temporary accommodations for select and small groups of people. They are an inadequate response to the humanitarian crisis that will develop in the decades to come. Scientists forecast that in an era of unmitigated and accelerated climate change, sudden-onset disasters will become fiercer, exacerbating poverty, inequality, and weak governance, and forcing many more people to seek safe haven elsewhere—perhaps in the hundreds of millions over the next half-century. In the current political climate, it’s hard to imagine that wealthier nations like the United States will open their doors to even a tiny fraction of these displaced peoples; however, the more economically developed countries must do more to honor their international commitments to provide refuge, especially to those in developing areas who are suffering from environmental conditions they did not create. In the decades to come, as legislators try to mitigate the effects of climate change and help their populations become resilient, they must also share the burden of a human displacement caused by the failure to act quickly enough. María Cristina García, an Andrew Carnegie Fellow, is the Howard A. Newman Professor of American Studies in the Department of History at Cornell University. She is the author of several books on immigration, refugee, and asylum policy. She is currently completing a book on the environmental roots of refugee migrations in the Americas. [i] United Nations, “Convention and Protocol Relating to the Status of Refugees,” 14, http://www.unhcr.org/en-us/3b66c2aa10. The 1951 Convention limited the focus of assistance to European refugees in the aftermath of the Second World War. The 1967 Protocol removed these temporal and geographic restrictions. The United States did not sign the 1951 Convention but it did sign the 1967 Protocol.

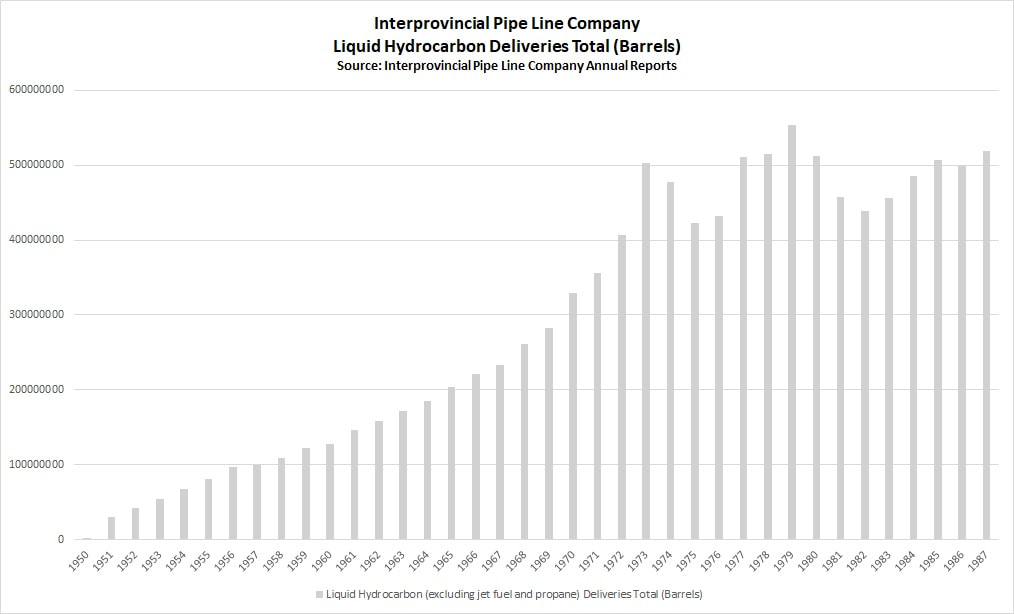

[ii] The OAU convention stated that the term refugee should also apply to “every person who, owing to external aggression, occupation, foreign domination or events seriously disturbing the public order in either part or the whole of his country or origin or nationality, is compelled to leave his place of habitual residence in order to seek refuge in another place outside his country of origin or nationality.” Organization of African Unity, Convention Governing the Specific Aspects of Refugee Problems in Africa,” http://www.unhcr.org/en-us/about-us/background/45dc1a682/oau-convention-governing-specific-aspects-refugee-problems-africa-adopted.html accessed September 15, 2017. [iii] The Cartagena Declaration stated that “in addition to containing elements of the 1951 Convention…[the definition] includes among refugees, persons who have fled their country because their lives, safety or freedom have been threatened by generalized violence, foreign aggression, internal conflicts, massive violations of human rights or other circumstances which have seriously disturbed the public order.” Cartagena Declaration on Refugees,” http://www.unhcr.org/en-us/about-us/background/45dc19084/cartagena-declaration-refugees-adopted-colloquium-international-protection.html [iv] European Union, “Council Directive 2004/83/EC,” April 29, 2004, https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A32004L0083 accessed March 20, 2018. [v] Refugee Relief Act of 1953 (P.L. 83-203), https://www.law.cornell.edu/topn/refugee_relief_act_of_1953. [vi] Immigration and Nationality Act of 1965 (P.L. 89-236), https://www.govinfo.gov/content/pkg/STATUTE-79/pdf/STATUTE-79-Pg911.pdf [vii] Immigration Act of 1990 (P.L.101-649), https://www.congress.gov/bill/101st-congress/senate-bill/358 [viii] USCIS, “Deferred Enforced Departure,” https://www.uscis.gov/humanitarian/temporary-protected-status/deferred-enforced-departure. [ix] The humanitarian parole authority was first recognized in the 1952 Immigration Act (more popularly known as the McCarran Walter Act). See http://library.uwb.edu/Static/USimmigration/1952_immigration_and_nationality_act.html. See also “§ Sec. 212.5 Parole of aliens into the United States,” https://www.uscis.gov/ilink/docView/SLB/HTML/SLB/0-0-0-1/0-0-0-11261/0-0-0-15905/0-0-0-16404.html Prof. Sean Kheraj, York University. This is the fifth post in a collaborative series titled “Environmental Historians Debate: Can Nuclear Power Solve Climate Change?” hosted by the Network in Canadian History & Environment, the Climate History Network, and ActiveHistory.ca. If nuclear power is to be used as a stop-gap or transitional technology for the de-carbonization of industrial economies, what comes next? Energy history could offer new ways of imagining different energy futures. Current scholarship, unfortunately, mostly offers linear narratives of growth toward the development of high-energy economies, leaving little room to imagine low-energy futures. As a result, energy historians have rarely presented plausible ideas for low-energy futures and instead dwell on apocalyptic visions of poverty and the loss of precious, ill-defined “standards of living.” The fossil fuel-based energy systems that wealthy, industrialized nation states developed in the nineteenth and twentieth centuries now threaten the habitability of the Earth for all people. Global warming lies at the heart of the debate over future energy transitions. While Nancy Langston makes a strong case for thinking about the use of nuclear power as a tool for addressing the immediate emergency of carbon pollution of the atmosphere, her arguments left me wondering what energy futures will look like after de-carbonization. Will industrialized economies continue with unconstrained growth in energy consumption, expand reliance on nuclear power, and press forward with new technological innovations to consume even more energy (Thorium reactors? Fusion reactors? Dilithium crystals?)? Or will profligate energy consumers finally lift their heads up from an empty trough and start to think about ways of living with less energy? Unfortunately, energy history has not been helpful in imagining low-energy possibilities. For the past couple of years, I’ve been getting familiar with the field of energy history and, for the most part, it has been the story of more. [1] Energy history is a related field to environmental history, but also incorporates economic history, the history of capitalism, social history, cultural history and gender history (and probably more than that). My particular interest is in the history of hydrocarbons, but I’ve tried to take a wide view of the field and consider scholarship that examines energy history in deeper historical contexts. There are several scholars who have written such books that consider the history of human energy use in deep time. For example, in 1982, Rolf Peter Sieferle started his long view of energy history in The Subterranean Forest: Energy Systems and the Industrial Revolution by considering Paleolithic societies. Alfred Crosby’s Children of the Sun: A History of Humanity’s Unappeasable Appetite for Energy (2006) begins its survey of human energy history with the advent of anthropogenic fire and its use in cooking. Vaclav Smil goes back to so-called “pre-history” at the start of Energy and Civilization: A History (2017) to consider the origins of crop cultivation. In each of these surveys energy historians track the general trend of growing energy use. While they show some dips in consumption and global regional variation, the story they tell is precisely as Crosby puts it in his subtitle, a tale of humanity’s unappeasable appetite for greater and greater quantities of energy. The narrative of energy history in the scholarship is remarkably linear, verging on Malthusian. According to Smil: “Civilization’s advances can be seen as a quest for higher energy use required to produce increased food harvests, to mobilize a greater output and variety of materials, to produce more, and more diverse, goods, to enable higher mobility, and to create access to a virtually unlimited amount of information. These accomplishments have resulted in larger populations organized with greater social complexity into nation-states and supranational collectives, and enjoying a higher quality of life.” [2] Indeed, from a statistical point of view, it’s difficult not to reach the conclusion that humans have proceeded inexorably from one technological innovation to another, finding more ways of wrenching power from the Sun and Earth. The only interruptions along humanity’s path to high-energy civilization were war, famine, economic crisis, and environmental collapse. Canada’s relatively short energy history appears to tell a similar story. As Richard W. Unger wrote in The Otter~la loutre recently, “Canadians are among the greatest consumers of energy per person in the world.” And the history of energy consumption in Canada since Confederation shows steady growth and sudden acceleration with the advent of mass hydrocarbon consumption between the 1950s and 1970s. Steve Penfold’s analysis of Canadian liquid petroleum use focuses on this period of extraordinary, nearly uninterrupted growth in energy consumption. Only in 1979 did Canadian petroleum consumption momentarily dip in response to an economic recession. “What could have been an energy reckoning…” Penfold writes, “ultimately confirmed the long history of rising demand.” [2] I’ve seen much of what Penfold finds in my own research on the history of oil pipeline development in Canada. Take, for instance, the Interprovincial pipeline system, Canada’s largest oil delivery system. For much of Canada’s “Great Acceleration” the history of more couldn’t be clearer: This view of energy history as the history of more informs some of the conclusions (and predictions) of energy historians. Crosby is, perhaps, the most optimistic about the potential of technological innovation to resolve what he describes as humanity’s unsustainable use of fossil fuels. In Crosby’s view, “the nuclear reactor waits at our elbow like a superb butler.” [4] For the most part, he is dismissive of energy conservation or radical reductions in energy consumption as alternatives to modern energy systems, which he admits are “new, abnormal, and unsustainable.” [5] Instead, he foresees yet another technological revolution as the pathway forward, carrying on with humanity’s seemingly endless growth in energy use. Energy historians, much like historians of the Anthropocene, have a habit of generalizing humanity in their analysis of environmental change. As I wrote last year in The Otter~la loutre, “To understand the history of Canada’s Anthropocene, we must be able to explain who exactly constitutes the “anthropos.”” Energy historians might consider doing the same. The history of human energy use appears to be a story of more when human energy use is considered in an undifferentiated manner. The pace of energy consumption in Canada, for instance, might look different when considering the rich and the poor, settlers and Indigenous people, rural Canadians and urban Canadians. Globally, energy histories around the world tell different stories beyond the history of more including histories of low-energy societies and histories of energy decline. Most global energy histories focus on industrialized societies and say little about developing nations and the persistence of low-energy, subsistence economies. If Smil is correct and “Indeed, higher energy use by itself does not guarantee anything except greater environmental burdens,” then future decisions about energy use should probably consider lower energy options. [6] Transitioning away from burning fossil fuels by using nuclear power may alleviate the immediate existential crisis of global warming, but confronting the environmental implications of high-energy societies may be the bigger challenge. To address that challenge, we may need to look back at histories of less. Sean Kheraj is the director of the Network in Canadian History and Environment. He’s an associate professor in the Department of History at York University. His research and teaching focuses on environmental and Canadian history. He is also the host and producer of Nature’s Past, NiCHE’s audio podcast series and he blogs at http://seankheraj.com. [1] I’m borrowing from Steve Penfold’s pointed summary of the history of gasoline consumption in Canada: “Indeed, at one level of approximation, you could reduce the entire his-tory of Canadian gasoline to a single keyword: more.” See Steve Penfold, “Petroleum Liquids” in Powering Up Canada: A History of Power, Fuel, and Energy from 1600 ed. R. W. Sandwell (Montreal: McGill-Queen’s University Press, 2016), 277.

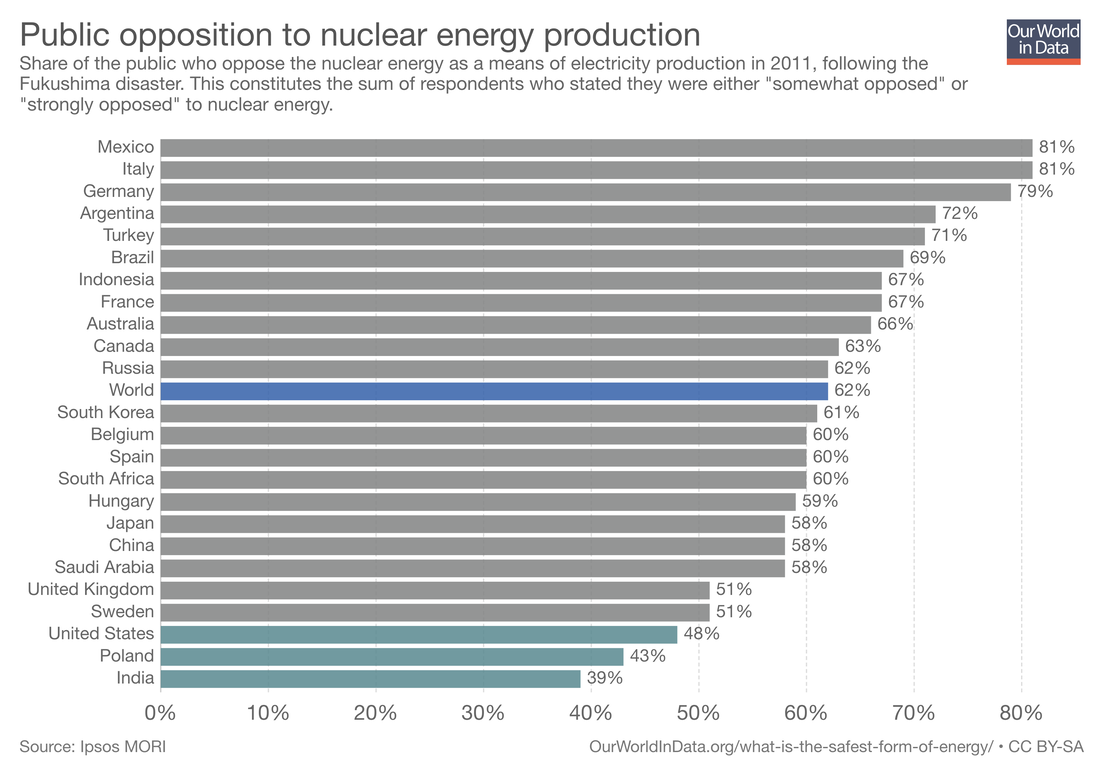

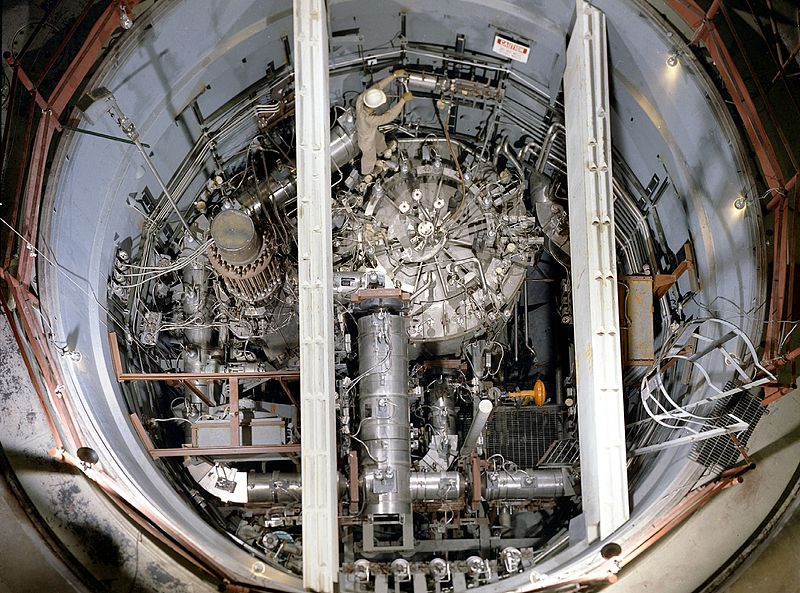

[2] Vaclav Smil, Energy and Civilization: A History (Cambridge: MIT Press, 2017), 385. [3] Penfold, “Petroleum Liquids,” 278. [4] Alfred W. Crosby, Children of the Sun: A History of Humanity’s Unappeasable Appetite for Energy (New York: W.W. Norton, 2006), 126. [5] Ibid, 164. [6] Smil, Energy and Civilization, 439. Prof. Toshihiro Higuchi, Georgetown University. This is the fifth post in a collaborative series titled “Environmental Historians Debate: Can Nuclear Power Solve Climate Change?” hosted by the Network in Canadian History & Environment, the Climate History Network, and ActiveHistory.ca. Nuclear power is back, riding on the growing fears of catastrophic climate change that lurks around the corner. The looming climate crisis has rekindled heated debate over the advantages and disadvantages of nuclear power. However, advocates and opponents alike tend to overlook or downplay a unique risk that sets atomic energy apart from all other energy sources: proliferation of nuclear weapons. Despite the lasting tragedy of the 2011 Fukushima disaster, the elusive goal of nuclear safety, and the stalled progress in radioactive waste disposal, nuclear power has once again captivated the world as a low-carbon energy solution. According to the latest IPCC report, released in October 2018, most of the 89 available pathways to limiting warming to 1.5 oC above pre-industrial levels see a larger role for nuclear power in the future. The median values in global nuclear electricity generation across these scenarios increase from 10.84 to 22.64 exajoule by 2050. The global nuclear industry, after many setbacks in selling its products, has jumped on the renewed interest of the climate policy community in atomic energy. The World Nuclear Association has recently launched an initiative called the Harmony Programme, which has established an ambitious goal of 25% of global electricity supplied by nuclear in 2050. Even some critics agree that nuclear power should be part of a future clean energy mix. The Union of Concerned Scientists, a U.S.-based science advocacy group and proponent of stronger nuclear regulations, recently published an op-ed urging the United States to “[k]eep safely operating nuclear plants running until they can be replaced by other low-carbon technologies.” But the justified focus on energy production vis-à-vis climate change obscures the debate that until recently had defined the nuclear issue: weapons proliferation. It is often said that a global nuclear regulatory regime, grounded on the 1968 Nuclear Non-Proliferation Treaty (NPT) and the International Atomic Energy Agency (IAEA)’s safeguards system, has proven successful as a check against the diversion of fissile materials from peaceful to military uses. There is indeed a good reason for this optimism. Since 1968, only three countries (India, Pakistan, North Korea) have publicly declared possession of nuclear weapons – a far cry from “15 or 20 or 25 nations” that President Kennedy famously predicted would go nuclear by the 1970s. Contrary to the impressions created by the biological metaphor, as political scientist Benoit Pelopidas has pointed out, the “proliferation” of nuclear weapons is also neither inevitable nor irreversible. South Africa, a non-NPT country which had secretly developed nuclear weapons by the 1980s, voluntarily dismantled its arsenal following the end of Apartheid. Belarus, Kazakhstan, and Ukraine, which inherited nuclear warheads following the collapse of the Soviet Union in 1991, also agreed to transfer them to Russia. Moreover, despite all the talk about the threat of nuclear terrorism, experts note a multitude of obstacles, both technical and political, for non-state actors to steal or assemble workable atomic devices.[1] Although many countries and terrorists are known to have harbored nuclear ambition at one point or another in the past – and some undoubtedly still do so today – we should not exaggerate the possibility of nuclear weapons acquisition by new countries and violent non-state actors and the potential threat that it might pose to international security. The real dangers of nuclear proliferation, however, lie elsewhere. The NPT is supposed to be a bargain between the nuclear haves and have-nots. The non-nuclear countries agreed not to acquire or manufacture nuclear weapons in exchange for the pledge made by all parties, including the five nuclear-weapon states designated by the NPT (United States, Soviet Union/Russia, United Kingdom, France, and China), to “pursue negotiations in good faith on effective measures relating to cessation of the nuclear arms race at an early date and to nuclear disarmament.” The nuclear-armed countries, however, have consistently failed to keep their end of the deal.[2] Meanwhile, the United States has repeatedly used or threatened to use military force to disarm hostile countries suspected to have clandestine nuclear weapons programs. Iraq is the most famous example of this, but as discussed below, U.S. officials also seriously considered preemptive attacks against nuclear facilities in China and North Korea. The United States is not alone in its penchant for unilateral military action. Israel, a U.S. ally widely believed to possess nuclear weapons, has also carried out a number of surprise airstrikes that destroyed an Iraqi nuclear reactor in 1981 and a suspected Syrian installation in 2007.[3] The alleged “success” of the repeated use of force and its threat to stem the tide of nuclear proliferation, however, comes at a high cost. Such action may not only deepen the insecurity of the threatened nation and make it all the more determined to develop its nuclear capabilities as a deterrent, but also entails a serious risk of unintended escalation to a large-scale conflict. Anyone who tries to weigh the value of nuclear power in coping with the climate crisis thus must take stock of the history of militarized counter-proliferation policy that reflects and reinforces what historian Shane J. Maddock has called “nuclear apartheid,” a hierarchy of nations grounded on power inequality between the nuclear haves and have-nots.[4] In October 1964, the People’s Republic of China successfully tested an atomic bomb, becoming the fifth country that demonstrated its nuclear weapons capabilities. The United States eventually acquiesced China’s nuclear status by the time it signed the NPT in 1968, which formally defined the nuclear-weapon state as a country that had manufactured and detonated a nuclear device prior to January 1, 1967. Washington’s decision to tolerate a nuclear China, however, did not come without resistance. In fact, as historian Francis J. Gavin has noted, there is a striking parallelism between the U.S. perception of China during the 1960s and that of a “rogue state” today: China had already clashed with the United States during the Korean War, twice shelled the outlying islands of Taiwan, and invaded India over a disputed border; it strongly disputed the Soviet Union’s leadership in the Communist world and aggressively supported revolutionary forces around the world; and it consolidated a one-party rule and embarked on a series of disastrous political, economic, and social campaigns, most notably the Great Leap Forward and the Cultural Revolution.[5] Operating from the Cold War mindset, and with little information shedding light on the complexity of China’s foreign and domestic policies, senior U.S. officials feared that China’s nuclear weapons program would post a serious threat to the stability of East Asia and the international effort to prevent the further spread of nuclear weapons around the world.[6] It is important to note that not all U.S. officials held such a grim view about China’s nuclear ambition and its consequences. Some believed that a nuclear-armed China would act rather cautiously, and President John F. Kennedy and Lyndon B. Johnson both tried to induce China by diplomatic means to abandon its nuclear program. As historians William Burr and Jeffrey T. Richelson have demonstrated, however, the Kennedy and Johnson administrations also developed contingency plans to disarm China by force. In a memo written in April 1963, the Joint Chiefs of Staff discussed a variety of military options, ranging from covert operations to the use of a tactical nuclear weapon, to coerce China into signing a test ban treaty.[7] While the military was skeptical about the effectiveness of unilateral action and also cautious about the risk of retaliation and escalation, Kennedy and some of his senior advisers remained keen on military and covert operations. For instance, the President showed his interest in enlisting the Republic of China in Taiwan as a proxy to launch a commando raid against Chinese nuclear installations.[8] William Foster, director of the Arms Control and Disarmament Agency, later recalled that Kennedy had been eager to consider the possibility of an airstrike in coordination with, or with tacit approval of, the Soviet Union.[9] The idea of an air raid resurfaced in September 1964, on the eve of the Chinese test. Although Johnson and his advisers ultimately decided against the proposal, all agreed that, “in case of military hostilities,” the United States should consider “the possibility of an appropriate military action against Chinese nuclear facilities.”[10] Despite all the talks about the use of force, the U.S. government ultimately refrained from taking such drastic action. The Soviet Union refused to discuss the possibility of joint military intervention, and the political costs and military risks of an unprovoked attack were too high. The failure to stop China’s nuclear weapons program, Gavin has pointed out, precipitated a major shift in U.S. nuclear policy toward creating a global nonproliferation regime with the NPT as its keystone.[11] However, these “proliferation lessons from the 1960s,” as Gavin has called, did not change the fundamental fact that the United States was willing to contemplate military action, to be carried out unilaterally if necessary, to prevent hostile countries from acquiring nuclear weapons. The NPT became a handy justification for such measures. This was abundantly clear when North Korea triggered another nuclear crisis thirty years later. In March 1993, North Korea startled the world by announcing its decision to withdraw from the NPT. At issue was the IAEA’s demand for special inspections at nuclear facilities in Yongbyon to account for the amount of plutonium generated in an earlier uninspected refueling operation. The tension briefly subsided when, after bilateral talks with the United States, Pyongyang suspended the process of pulling out of the NPT and agreed to allow inspections at a number of installations. In March 1994, however, North Korea suddenly reversed its attitude, blocking IAEA inspectors from conducting activities necessary to complete their mission. The United States responded by declaring its intention to ask the United Nations Security Council to impose economic sanctions against North Korea. As the confrontation between the United States and North Korea escalated, President Bill Clinton decided to take all necessary measures to coerce Pyongyang into full compliance with the IAEA safeguards. In his memoirs, Clinton wrote that “I was determined to prevent North Korea from developing a nuclear arsenal, even at the risk of war.”[12] To leave no room for misunderstanding about his resolve, Clinton let his senior advisers and military commanders openly discuss contingency plans for military action. On February 6, The New York Times broke news on updated U.S. defense plans for South Korea in the event of a North Korean attack, describing a newly added option for a counteroffensive to seize Pyongyang and overthrow the regime of Kim Il Sung.[13] Meanwhile, Secretary of Defense William Perry talked tough, telling the press that “we would not rule out a preemptive military strike.”[14] The talk about the use of force against North Korea was not an idle threat. In his memoirs, Perry has described contingency planning for military action. In May 1994, when North Korea began to remove the spent fuel rods containing plutonium from its reactor, the Defense Secretary ordered John Shalikhashvili (chairman of the Joint Chiefs of Staff) and Gary Luck (commander of the U.S. military forces in South Korea) to prepare a course of action for “a ‘surgical’ strike by cruise missiles on the reprocessing facility at Yongbyon.”[15] Three former U.S. officials, Joel S. Wit, Daniel B. Poneman, and Richard L. Gallucci, also confirmed that the strike plan was discussed at the highest level of the Clinton administration. On May 19, Perry, Shalikhashvili, and Luck briefed Clinton and his aides on the proposal for an air raid against the Yongbyon facilities, asserting that it would “set the North Korean nuclear program back by years.” Perry, however, reportedly stressed the “downside risk,” namely that “this action would certainly spark a violent reaction, perhaps even a general war.”[16] Clinton recalled that a “sobering estimate of the staggering losses both sides would suffer if war broke out” gave him pause.[17] As Perry has noted, the military option was still “‘on the table’, but very far back on the table.”[18] The self-restraint of the Clinton administration and its commitment to a diplomatic solution have earned praise from many scholars and pundits – in sharp contrast to George W. Bush’s aggressive unilateralism. But the fact remains that Clinton and his aides considered the threat of preventive military action as permissible, even essential, to pressure North Korea into refraining from any suspicious nuclear activities. And their willingness to go to the brink of actual conflict created the tense policy environment that greatly diminished room for quiet diplomacy for a possible compromise while drastically raising the risk of accidents and miscalculations. In this sense, the “peaceful” conclusion of the first North Korean nuclear crisis was a Pyrrhic one, reinforcing the belief widely held by the U.S. policy community that the United States must be prepared to use its military force unilaterally to uphold the global non-proliferation regime. It is thus no coincidence that, even after the disastrous outcomes of the Iraqi War fought in the name of nuclear nonproliferation, the U.S. government still continues to wage the dangerous game of brinkmanship with hostile powers suspected of pursuing the clandestine development of nuclear weapons. What, then, does the history of U.S. counter-proliferation policy mean for the future use of nuclear power to combat climate change? An answer, I believe, lies in an accelerating shift in the nuclear geography. The New Policies Scenario of the International Energy Agency’s World Energy Outlook 2018, a global energy trend forecast based on policies and targets announced by governments, shows that the demand for nuclear power in 2017-40 will decrease in advanced economies by 60 Mtoe (millions of tons of oil equivalent), whereas it will increase in developing economies by 344 Mtoe. Of approximately 30 countries which are currently considering, planning, or starting nuclear power programs, many are post-colonial and post-socialist countries located in areas, including Central Asia, Eastern Europe, the Middle East, and South and Southeast Asia, where the United States is competing with other major and regional powers for greater influence. Added to this geopolitical layer is the nuclear supply game. While many Western conglomerates have recently decided to exit from nuclear exports due to swelling construction costs, Russian and Chinese state-owned companies have aggressively sold nuclear power plants to emerging countries, a move backed by their governments as part of their global strategies. Although Russia and China have generally cooperated with the United States in controlling nuclear exports, the recent U.S. withdrawal from the Iran nuclear deal has pitted Washington against Moscow and Beijing over the latter’s continued negotiations with Iran for nuclear cooperation. Given the growing tension between the United States on the one hand and Russia and China on the other, the expansion of civilian nuclear programs in key strategic regions is likely to be fraught with serious risks of an international crisis and even an armed conflict. The fundamental solution to the nuclear dilemma in the changing climate is simple: carry out the pledge made by all parties to the NPT, that is, to “pursue negotiations in good faith on effective measures relating to cessation of the nuclear arms at an early date and to nuclear disarmament, and on a treaty on general and complete disarmament under strict and effective international control” (Article VI). A breakthrough toward this goal came in July 2017 when the United Nations General Assembly voted to adopt the first legally binding international agreement that prohibited nuclear weapons. All of the nuclear weapon states and most of their allies, however, refused to participate in the treaty negotiations. Recently, Christopher Ashley Ford, assistant secretary of state for nonproliferation, has called the treaty as a “well-intended mistake,” insisting that a “better way” was to work within the NPT framework while taking steps to improve “the actual geopolitical conditions that countries face in the world.” If the IPCC is correct in its claim that we have only a little more than a decade to stop potentially catastrophic climate change, it is unlikely that the “pragmatic, conditions-focused program” described by Ford will significantly reduce risks of nuclear proliferation and militarized counter-proliferation in time. If so, then we must realize that the promotion of civilian nuclear power in a world of nuclear apartheid – a world in which the United States and its allies are not hesitant to use force to disarm and topple a hostile regime with nuclear ambition – may have no less catastrophic consequences for human society than climate change. Toshihiro Higuchi an assistant professor in the Edmund A. Walsh School of Foreign Service at Georgetown University. He is a historian of U.S. foreign relations in the 19th and 20th century. His research interests rest with science and politics in managing the trans-border and global environment. [1] John Mueller, Atomic Obsession: Nuclear Alarmism from Hiroshima to Al-Qaeda (Oxford; New York: Oxford University Press, 2010), 161-179.

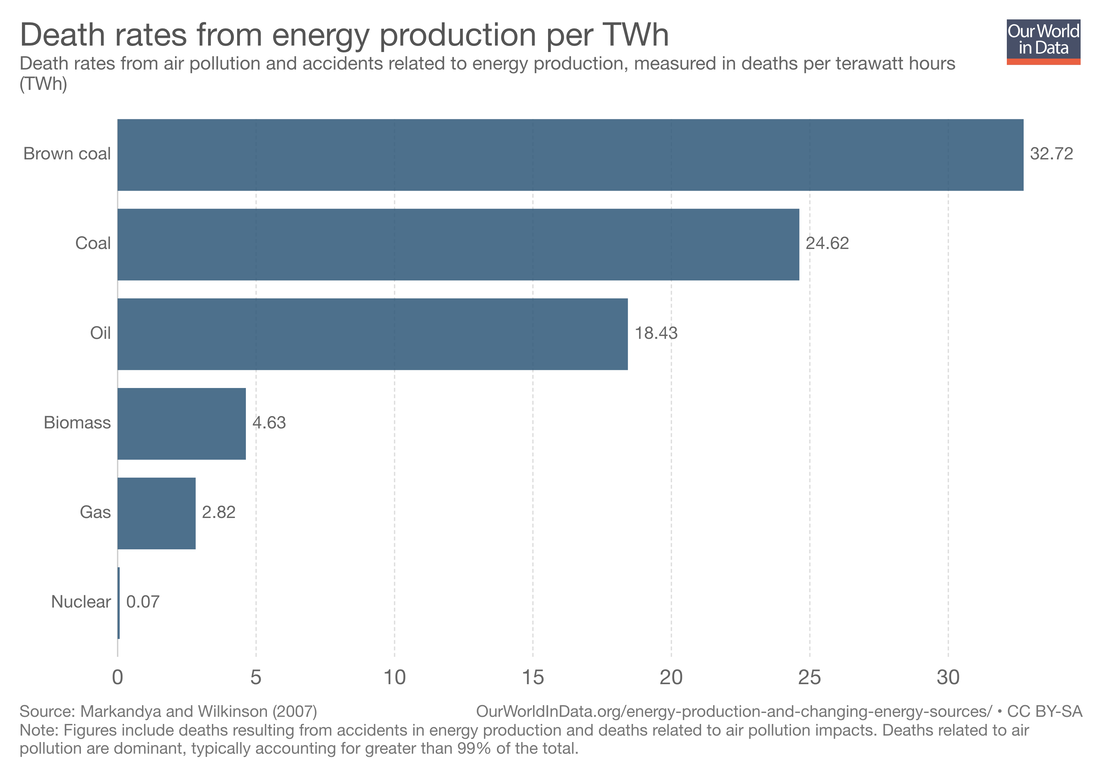

[2] Shane J. Maddock, Nuclear Apartheid: The Quest for American Atomic Supremacy from World War II to the Present (Chapel Hill: University of North Carolina Press, 2010), 1-2. [3] Dan Reiter, “Preventive Attacks against Nuclear Programs and the ‘Success’ at Osiraq,” Nonproliferation Review 12, no. 2 (2005): 355-371; Leonard S. Spector and Avner Cohen, “Israel’s Airstrike on Syria’s Reactor: Implications for the Nonproliferation Regime,” Arms Control Today 38, no. 6 (2008): 15-21. [4] Shane J. Maddock, Nuclear Apartheid: The Quest for American Atomic Supremacy from World War II to the Present (Chapel Hill: University of North Carolina Press, 2010), 1-2. [5] Francis J. Gavin, Nuclear Statecraft: History and Strategy in America’s Atomic Age (Ithaca, NY: Cornell University Press, 2012), 75-76. [6] William Burr and Jeffrey T. Richelson, “Whether to ‘Strangle the Baby in the Cradle’: The United States and the Chinese Nuclear Program, 1960-64,” International Security 25, no. 3 (2000/01): 55, 61-62. Also see Noam Kochavi, A Conflict Perpetuated: China Policy during the Kennedy Years (Westport, CT: Praeger, 2002). [7] Ibid., 68-69. [8] Ibid., 73. [9] Ibid., 54. [10] Document 49, Memorandum for the Record, September 15, 1964, in Foreign Relations of the United States, 1964-1968, vol. 30 (Washington: U.S.G.P.O., 1998). [11] Gavin, 75-103. [12] Bill Clinton, My Life (New York: Vintage, 2005), 591. [13] Michael R. Gordon, “North Korea’s Huge Military Spurs New Strategy in South,” New York Times, February 6, 1994, 1. [14] Clinton, My Life, 591. [15] William J. Perry, My Journey at the Nuclear Brink (Stanford, CA: Stanford University Press, 2015), 106. [16] Joel S. Wit, Daniel B. Poneman, and Robert L. Gallucci, Going Critical: The First North Korean Nuclear Crisis (Washington: Brookings Institution Press, 2003), 180. [17] Clinton, My Life, 603. [18] Perry, My Journey at the Nuclear Brink, 106. Dr. Robynne Mellor. This is the third post in a collaborative series titled “Environmental Historians Debate: Can Nuclear Power Solve Climate Change?” hosted by the Network in Canadian History & Environment, the Climate History Network, and ActiveHistory.ca. Shortly before uranium miner Gus Frobel died of lung cancer in 1978 he said, “This is reality. If we want energy, coal or uranium, lives will be lost. And I think society wants energy and they will find men willing to go into coal or uranium.”[1] Frobel understood that economists and governments had crunched the numbers. They had calculated how many miners died comparatively in coal and uranium production to produce a given amount of energy. They had rationally worked out that giving up Frobel’s life was worth it. I have come across these tables in archives. They lay out in columns the number of deaths to expect per megawatt year of energy produced. They weigh the ratios of deaths in uranium mines to those in coal mines. They coolly walk through their methodology in making these conclusions. These numbers will show you that fewer people died in uranium mines to produce a certain amount of energy. But the numbers do not include the pages and pages I have read of people remembering spouses, parents, siblings, children who died in their 30s, 40s, 50s, and so on. The numbers do not include details of these miners’ hobbies or snippets of their poetry; they don’t reveal the particulars of miners’ slow and painful wasting away. Miners are much easier to read about as death statistics. The erasure of these people trickles into debates about nuclear energy today. Any argument that highlights the dangers of coal mining but ignores entirely the plight of uranium miners is based on this reasoning. Rationalizations that say coal is more risky are based on the reduction of lives to ratios. If we are going to make these arguments, we must first acknowledge entirely what we are doing. We must be okay with what Gus Frobel said and meant: that someone is going to have to assume the risk of energy production and we are just choosing whom. We must realize that it is no accident that these Cold War calculations permeate our discourse today, and what that means moving forward. Promoters of nuclear energy have always tapped into fears about the environment in order to get us to stop worrying and learn to love the power plant. The awesome power of the atom announced itself to the world in a double flash of death and destruction when the United States dropped nuclear bombs on Hiroshima and Nagasaki in August 1945. Following the end of World War II, growing tensions between the United States and the Soviet Union and the consequent Cold War helped spur on a proliferation of nuclear weapons production. As nuclear technology became more important and sought after, governments around the world fought against nuclear energy’s devastating first impressions, which were difficult to dislodge from the minds of the public. From the earliest days, in order to combat the atom’s fearsome reputation and put a more positive spin on things, policymakers began pushing its potential peaceful applications. Nuclear technology and the environment were intertwined in many complex and mutually reinforcing ways. From as early as the 1940s, as historian Angela Creager has shown, the US Atomic Energy Commission used the potential ecological and biological application of radioisotopes as proof of the atom’s promising, non-militant prospects. By the 1950s, many hailed nuclear power as a way to escape resource constraint, underlining the comparatively small amount of uranium needed to produce the same amount of energy as coal. Using uranium was a way to conserve oil and coal for longer. In the 1960s, as the popular environmental movement grew, nuclear boosters appealed to the public’s concern for the planet by emphasizing the clean-burning qualities of nuclear energy. Environmentalism spread around the world, with environmental protection slowly being enshrined in law in several different countries. Environmental concern and protection also became an important part of the Cold War battle for hearts and minds. Nuclear advocates successfully appealed to environmentalist sentiments by avoiding certain problems, such as the intractable waste that the nuclear cycle produced, and emphasizing others, namely, the way it did not pollute the air. The main arguments of Cold War-era nuclear champions live on to this day. For many pro-nuclear environmentalists, who found these arguments appealing, the reasons to support nuclear energy were and continue to be: less uranium is needed than coal to produce the same amount of energy, nuclear energy is clean burning, radiation is “natural” and not something to be feared, and using nuclear energy will give us time to figure out different solutions to the energy crisis, which was once thought of as fossil fuel shortage and now leans more towards global warming. In broad strokes, then, these arguments are a Cold War holdover, and so are the anachronistic blind spots that accompany them. They portray nuclear power production as a single snapshot of a highly complex cycle. Nuclear is framed as “clean burning” for a reason; the period when it is burning is the only point when it can be considered clean. This reasoning made more sense when first promulgated because there was a hubris that accompanied nuclear technology, and part of this hubris was to assume that all of the issues that arose due to nuclear technology could and would be solved. Though that confidence is long-gone in general, it still lurks as an assumption that undergirds the argument for nuclear energy. One of the biggest problems that we were once sure we could solve is nuclear waste disposal. This problem has not been solved. It becomes more and more complex all the time, and the complexities tied up in the problem continue to multiply. Nuclear waste storage is still a stopgap measure, and most waste is still held on or near the surface in various locations, usually near where it is produced. The best long-term solution is a deep geological repository, but there are no such storage facilities for high-level radioactive waste yet. Several countries that have tried to build permanent repositories have faced both political and geological obstacles, such as the Yucca Mountain project in the United States, which the government defunded in 2012. Finland’s Onkalo repository is the most promising site. Many people who pay attention to these issues commend the Finnish government for successfully communicating with, and receiving consent from, the local community. But questions remain about why and how the people alive today can make decisions for people who will live on that land for the next 100,000 years. This timescale opens up various other questions about how to communicate risk through the millennia. Either way, we will not know if Onkalo is ultimately successful for a really long time, while the kitty litter accident at the Waste Isolation Pilot Plant in New Mexico, USA, where radioactive waste blew up in 2014, hints at how easily things can go wrong and defy careful models of risk. Promoters continue to use language that clouds this issue. Words such as “storage” and “disposal” obfuscate the inadequacies tied up in these so-called solutions. The truth is, disposal amounts to trying to keep waste from migrating by putting it somewhere and then trying to model the movements of the planet thousands of years into the future to make sure it stays where we put it. It is a catch-22. By ignoring the disposal problem, we kick the same can down the road that was kicked to us. By developing a disposal system, we just kick it really, really far into the future. Either way, there is an antiquated optimism that still persists in the belief that,one way or another, we will work it out, or have successfully planned for every contingency with our current solutions. Even if they do so inadequately, advocates of nuclear power often do acknowledge the back-end of the nuclear cycle. They usually only do so to dismiss it, but at least it is addressed. By contrast, they entirely ignore the front-end of the cycle. This tendency is particularly strange because when uranium is judged against fossil fuels, the ways that coal and oil are extracted enter the conversation while uranium, in contrast, is rarely considered in such terms. We think of coal and oil as things that come from the earth, uranium also is mined and its processing chain is just as complex as the other forms of fuel we seek to replace with it. Discussions of nuclear energy hardly ever mention uranium mining, possibly because uranium mining increasingly occurs in marginalized landscapes that are out of sight and out of mind (northern Saskatchewan in Canada and Kazakhstan are currently the biggest producers). But even for those who do pay attention to uranium mining, the problems associated with it are officially understood as something we have “figured out.” The prevailing narrative is that, yes, many uranium miners died from lung cancer linked to their work in uranium mines, and yes, there was a lot of waste produced and then inadequately disposed of due to the pressures and expediencies of the Cold War nuclear arms race. But when officials acknowledged these problems, they implemented regulations and fixed them. It follows that, because there is no longer a nuclear arms race, and because health and environmental authorities understand and accept the risks associated with mining activities, they have appropriately addressed and mitigated the problems linked to uranium production. Moreover, nuclear power generation, because it is separate from the arms race and the nefarious human radiation experiments that accompanied it, is safer and better for miners and communities that surround mines. Some aspects of this narrative are true. Uranium miners around the world did labor with few protections through at least the late 1960s, after which conditions improved moderately in some places. Several governments introduced and standardized maximum radon progeny (the decay products of uranium that cause cancer among miners) exposure levels. More mines had ventilation, monitoring increased, and many places banned miners from smoking underground. By the 1970s and 1980s, many countries considered the health problem solved. The issue with this portrayal is that the effectiveness of the introduction of these regulations is not very clear. Allowing a few years for the implementation of regulations, most countries did not have mines at regulated exposure levels until at least the mid-1970s. If we then allow for at least a fifteen-year latency period of lung cancer—which is the accepted minimum even with very high exposures—then lung cancer would not begin to show until, at the very least, around the late 1980s or early 1990s. By this period, however, the uranium-mining industry was collapsing. The Three Mile Island accident in 1979, the Chernobyl accident 1986, and the end of the Cold War arms race meant that plans for nuclear energy stalled and the demand for uranium plummeted. The uranium that did continue to be produced came from new mining regions and new cohorts of workers, or it affected people and places that the public and media ignored, or technology shifted and so fewer people faced the risks of underground uranium mining. There is little information about how and if the risks miners faced changed. There is also a dearth of information about how these post-regulation miners compare to their pre-regulation counterparts. One preliminary examinationof Canadian uranium miners, however, shows that miners who began work after 1970 had similar increased risk of mortality from lung cancer as those who began work in earlier decades. This suggests that there was either ineffective radon progeny reduction and erroneous reporting of radon progeny levels in mines or that there is something about the health risks in mines that are not quite understood. There is another relatively well-known narrative about uranium mining that some commenters point to as something we have figured out and corrected. Due to the extremely effective activism of the Navajo Nation, beginning in the 1970s and continuing through to the present, many people are aware of the hardships Navajo uranium miners faced and, to a lesser degree, the continued legacy of abandoned mines and tailings piles with which they have to contend. High-profile advocates for the Navajo, such as former secretary of the interiorStewart Udall and several journalistic and scholarly books on Navajos and uranium mining, have added to this awareness. Few people realize when pointing to the Navajo case that there is still a lot of confusion surrounding the long-term effects of uranium mining on Navajo land. It is an ongoing problem with unsatisfactory answers. Moreover, even though Navajo activists were adept at attracting attention to the problems they faced, many other uranium-mining communities cannot, do not want to, or have not been able to garner the same attention. Uranium mining happened and continues to happen around the world, even though the health risks are poorly understood. It is changing human bodies and landscapes to this day and affecting thousands of miners and communities. Those who work in mines are still making the trade-off between the employment the mine offers on the one hand, and the higher risk of lung cancer on the other. The environmental effects of uranium mining also are poorly understood and inadequately managed with a view to the long-term. When mines are in operation, the waste from uranium mills, called tailings, are usually stored in wet ponds or dry piles. Those who operate uranium mills try to keep these tailings from moving, and there are often government authorities that regulate these efforts, but tailings still seep into water, spread into soil, and migrate through food chains. These problems relate to mines and mills in operation, but there are also several problems that companies and governments face with regards to mines and mills that are no longer in operation. The production of uranium has led to landscapes with several abandoned mines that are neglected, as well as millions of tons of radioactive and toxic tailings. There are no good numbers for worldwide uranium tailings, but the International Atomic Energy Agency has estimatedthat the United States alone has produced 220 million tons of mill tailings and 220 million tons of uranium mine wastes. Waste from uranium production is managed in similar ways around the world. Using the same euphemistic language employed for nuclear waste coming out of the back-end of the nuclear cycle, tailings from uranium mills are often “disposed.” What disposal usually means is gathering tailings in one area, creating some kind of barrier to prevent erosion—this barrier can be vegetation, water, or rock—and then monitoring the tailings indefinitely to ensure they do not move. The question that follows is whether or not these tailings are harmful, and the truly unsatisfactory answer is that we do not know. Studies of communities surrounding uranium tailings that consider how tailings affect community health are scarce, while those that do exist are conflicting, inconclusive, and often problematic. While some studies, with a particular focus on cancer and death, argue that there are no increased illnesses linked to living in former uranium-mining areas, others have connected wastes from uranium production to various ailments, including kidney disease, hypertension, diabetes, and compromised immune system function. Now, half of all uranium production around the world uses in situ leaching or in situ recovery to extract uranium. Basically, uranium companies inject an oxidizing agent into an ore body, dissolve the uranium, and then pump the solution out and mill it without first having to mine it. The official line of thinking is that there are negligible environmental impacts stemming from this form of extraction. It certainly reduces risks for miners, but it is unlikely that it does not affect the environment. The environmentalist argument for nuclear energy, particularly the clean-burning component, is very appealing in a time when our biggest concern is climate change. Still, nuclear power is a band-aid technofix with many unknowns. The discussion surrounding nuclear energy has never fully grappled with the entire scope of the nuclear cycle, nor has it addressed the unique aspects of production of energy from metals that does not have parallels with fossil fuels. Making an argument about nuclear energy means examining all its risks in comparison with fossil fuels, and then coming to terms with the wealth of unknowns. It also means remembering and keeping in mind the bodies and landscapes making this option possible. To be a nuclear power advocate, especially as an environmentalist, one most also be an advocate for the safety of all nuclear workers. The problems uranium miners and uranium mining communities faced were never fully resolved and they are not fully understood. To promote nuclear power means to pay attention to the people and places that produce uranium and fighting to make sure they receive the protections they deserve for helping us carve our way out of this current problem. Robynne Mellor received her PhD in environmental history from Georgetown University, and she studies the intersection of the environment and the Cold War. Her research focuses on the environmental history of uranium mining in the United States, Canada, and the Soviet Union. She tweets at @RobynneMellor. [1] Gus Frobel, quoted in Lloyd Tataryn, Dying for a Living (Deneau and Greenberg Pubishers, 1979), 100.

Only Dramatic Reductions in Energy Use Will Save The World From Climate Catastrophe: A Prophecy2/27/2019

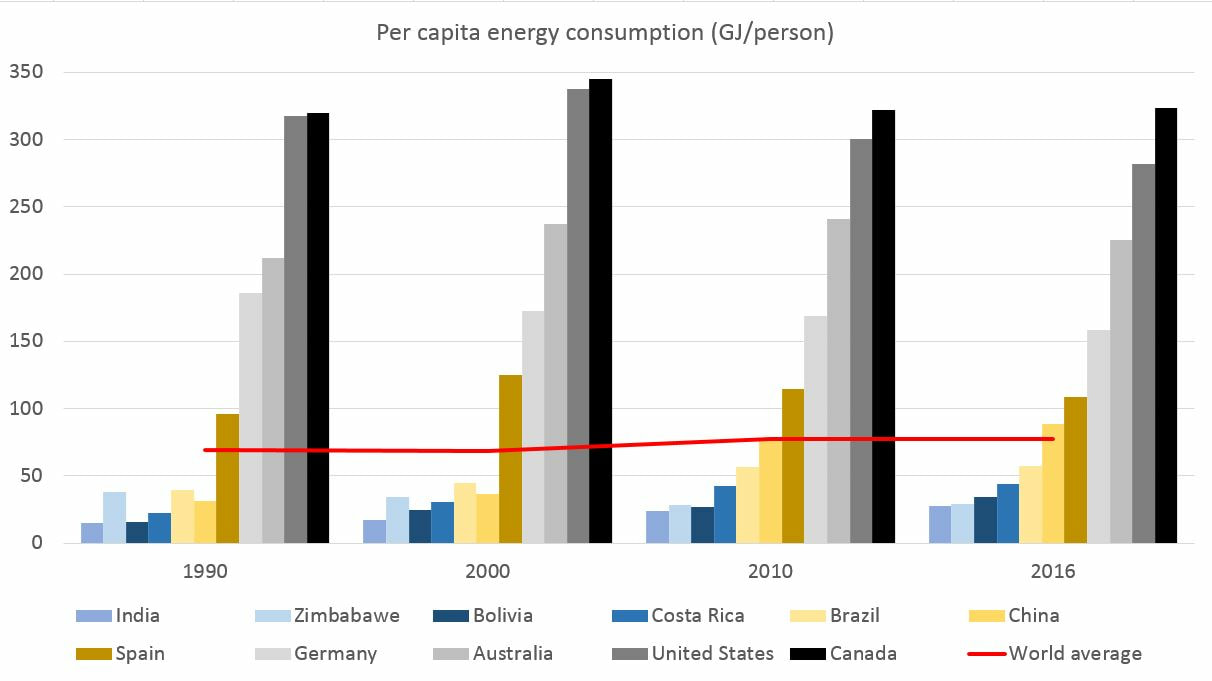

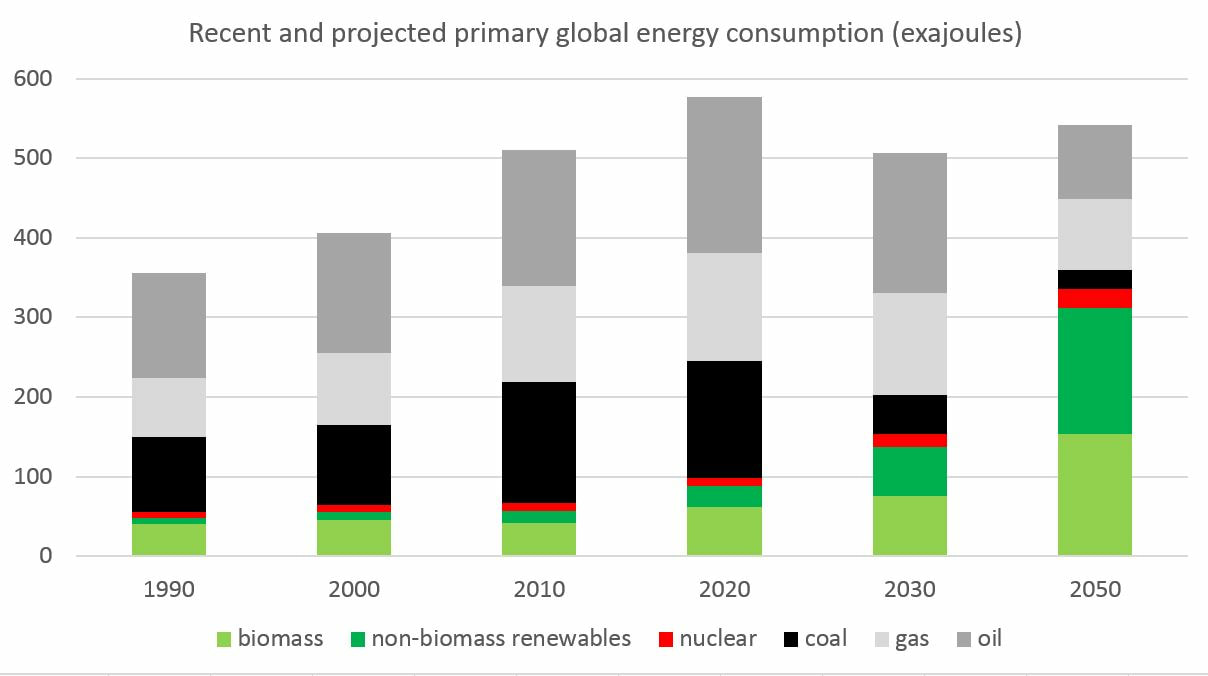

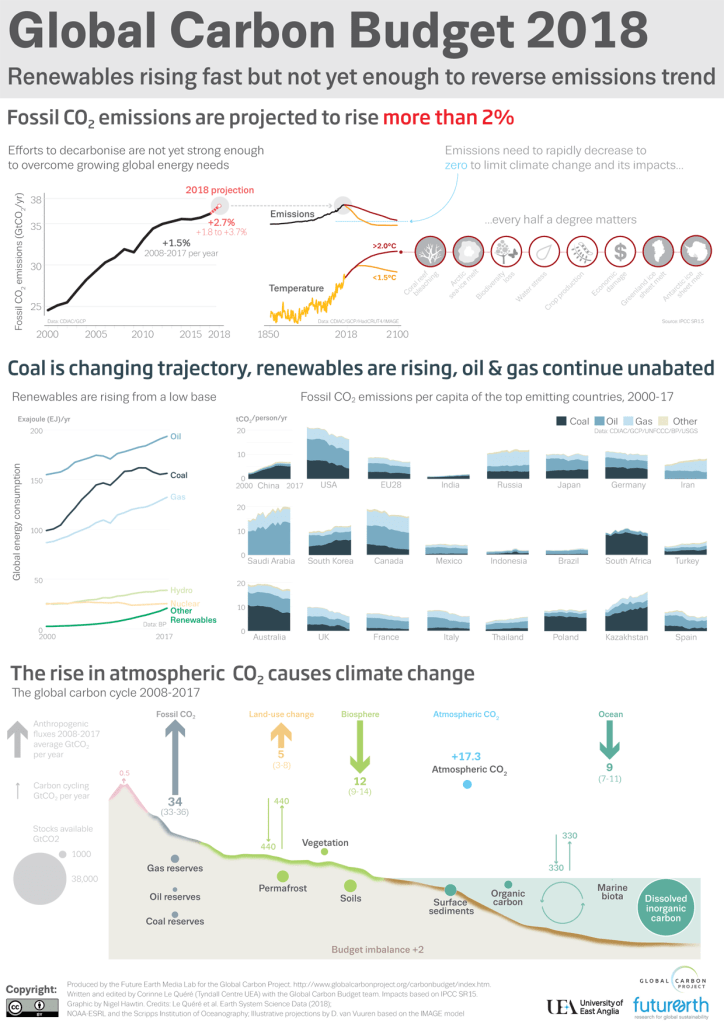

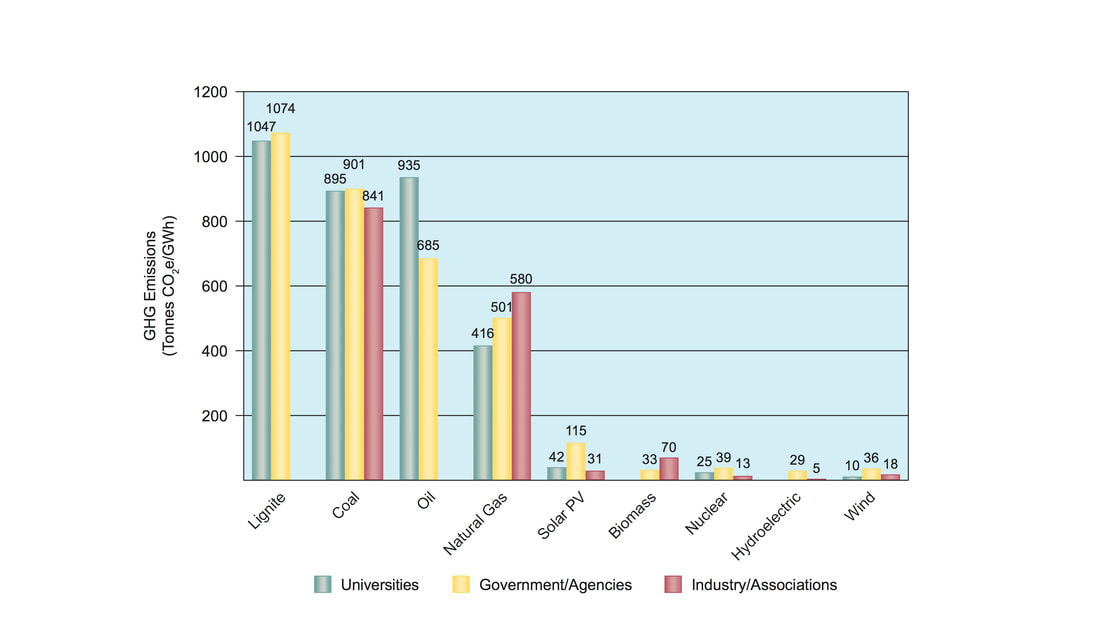

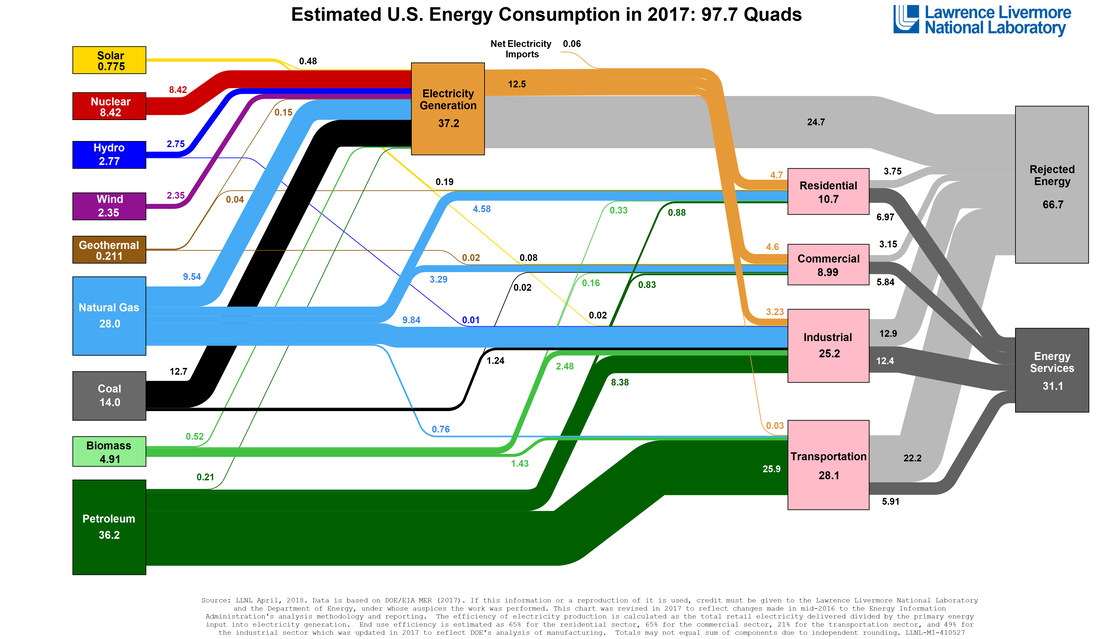

Prof. Andrew Watson, University of Saskatchewan. This is the third post in a collaborative series titled “Environmental Historians Debate: Can Nuclear Power Solve Climate Change?” hosted by the Network in Canadian History & Environment, the Climate History Network, and ActiveHistory.ca. There is no longer any debate. Humanity sits at the precipice of catastrophic climate change caused by anthropogenic greenhouse gas (GHG) emissions. Recent reports from the Intergovernmental Panel on Climate Change (IPCC)[1] and the U.S. Global Change Research Program (USGCRP)[2] provide clear assessments: to limit global warming to 1.5ºC above historic levels, thereby avoiding the most harmful consequences, governments, communities, and individuals around the world must take immediate steps to decarbonize their societies and economies. Change is coming regardless of how we proceed. Doing nothing guarantees large-scale resource conflicts, climate refugee migrations from the global south to the global north, and mass starvation. Dealing with the problem in the future will be exceedingly more difficult, not to mention expensive, than making important changes immediately. The only question is what changes are necessary to address the scale of the problem facing humanity? Do we pursue strategies that allow us to maintain our current standard of living, consuming comparable amounts of (zero-carbon) energy? Or do we accept fundamental changes to humanity’s relationship to energy? In his new book, The Wizard and the Prophet: Two Remarkable Scientists and Their Conflicting Visions of the Future of Our Planet, Charles C. Mann uses the life, work, and ideologies of Norman Borlaug (the Wizard) and William Vogt (the Prophet) to offer two typologies of twentieth century environmental science and thought. Borlaug represents the school of thought that believed technology could solve all of humanity’s environmental problems, which Mann refers to as “techno-optimism.” Vogt, by contrast, represents a fundamentally different attitude that saw only a drastic reduction in consumption as the key to solving environmental problems, which Mann (borrowing from demographer Betsy Hartmann) refers to as “apocalyptic environmentalism.”[3] In the industrialized countries of the world, the techno-optimist approach enjoys the greatest support. Amongst those who think “technology will save us,” decarbonizing the economy means replacing fossil fuel energy with “clean” energy (i.e. energy that does not emit GHGs). Hydropower has nearly reached it global potential, and simply cannot replace fossil fuel energy. Solar, wind, and to some extent geothermal, are rapidly growing technological options for replacing fossil fuel energy. And as this series reveals, some debate exists over whether nuclear can ever play a meaningful role in a twenty-first century energy transition. The quest for new clean energy pathways aims to rid the developed world of the blame for causing climate change without the need to fundamentally change the way of life responsible for climate change. In short, those advocating for clean energy hope to cleanse their moral culpability as much as the planet’s atmosphere. This is the crux of the climate change crisis and the challenge of how to respond to it. It is not a technical problem. It is a moral and ethical problem – the biggest the world has ever faced. The USGCRP’s Fourth National Climate Assessment warns that the risks from climate change “are often highest for those that are already vulnerable, including low-income communities, some communities of color, children, and the elderly.”[4] Similarly, the IPCC’s Global Warming of 1.5ºC report insists that “the worst impacts tend to fall on those least responsible for the problem, within states, between states, and between generations.”[5] Furthermore, the USGCRP points out, “Marginalized populations may also be affected disproportionately by actions to address the underlying causes and impacts of climate change, if they are not implemented under policies that consider existing inequalities.” Indeed, the IPCC reports, “the worst-affected states, groups and individuals are not always well-represented” in the process of developing climate change strategies. The climate crisis has always been about the vulnerabilities created by energy inequalities. Decarbonizing the industrialized and industrializing parts of the world has the potential to avoid making things any worse for the most marginalized segments of the global population, but it wouldn’t necessarily make anything better for them either. At the same time, decarbonization strategies imagine an energy future in which people, communities, and countries with a high standard of living are under no obligation to make any significant sacrifices to their large energy footprints. Over the last thirty years, industrialized countries, such as Germany, the United States, and Canada have consistently consumed considerably more energy per capita than non-industrialized or industrializing countries (Figure 1). In 2016, industrialized countries in North America and Western Europe consumed three to four times as much energy per capita as the global average, while non-industrialized countries consumed considerably less than the average. Most of the research that has modelled 1.5ºC-consistent energy pathways for the twenty-first century assume that decarbonisation means continuing to use the same amount of, or only slightly less, energy (Figure 2).[6] Most of these models project that solar and wind energy will comprise a major share of the energy budget by 2050 (nuclear, it should be noted, will not). Curiously, the models also project a major role for biofuels as well. Most alarmingly, however, most models assume major use of carbon capture and storage technology, both to divert emissions from biofuels and to actively pull carbon out of the atmosphere (known as carbon dioxide reduction, or negative emissions). The important point here, however, is not the technological composition of these energy pathways, but the continuity of energy consumption over the course of the twenty-first century. In case it is not already clear, I do not think technology will save us. Solar and wind energy technology has the potential to provide an abundance of energy, but it won’t be enough to replace the amount of fossil fuel energy we currently consume, and it certainly won’t happen quickly enough to avoid warming greater than 1.5ºC. Biofuels entail a land cost that in many cases involves competition with agriculture and places potentially unbearable pressure on fresh water resources. Carbon capture and storage assumes that pumping enormous amounts of carbon underground won’t have unintended and unacceptable consequences. Nuclear energy might provide a share of the global energy budget, but according to many models, it will always be a relatively small share. Techno-optimism is a desperate hope that the problem can be solved without fundamental changes to high-energy standards of living. The current 1.5ºC-consistent energy pathways include no meaningful changes in the amount of overall energy consumed in industrialized and industrializing countries. The studies that do incorporate “lifestyle changes” into their models feature efficiencies, such as taking shorter showers, adjusting indoor air temperature, or reducing usage of luxury appliances (e.g. clothes dryers); none of which present a fundamental challenge to a western standard of living.[7] Decarbonization models that replace fossil fuel energy with clean energy reflect a desire to avoid addressing the role of energy inequities in the climate change crisis. Climate change is a problem of global inequality, not just carbon emissions. Those of us living in the developed and developing countries of the world would like to pretend that the problem can be solved with technology, and that we would not then need to change our lives all that much. In a decarbonized society, the wizards tell us, our economy could continue to operate with clean energy. But it can’t. Any ideas to the contrary are simply excuses for perpetuating a world of incredible energy inequality. We need to heed the prophets and use dramatically less energy. We need to accept extreme changes to our economy, our standard of living, and our culture. Andrew Watson is an assistant professor of environmental history at the University of Saskatchewan. [1] IPCC, 2018: Global warming of 1.5°C. An IPCC Special Report on the impacts of global warming of 1.5°C above pre-industrial levels and related global greenhouse gas emission pathways, in the context of strengthening the global response to the threat of climate change, sustainable development, and efforts to eradicate poverty [V. Masson-Delmotte, P. Zhai, H. O. Pörtner, D. Roberts, J. Skea, P.R. Shukla, A. Pirani, W. Moufouma-Okia, C. Péan, R. Pidcock, S. Connors, J. B. R. Matthews, Y. Chen, X. Zhou, M. I. Gomis, E. Lonnoy, T. Maycock, M. Tignor, T. Waterfield (eds.)]. In Press.

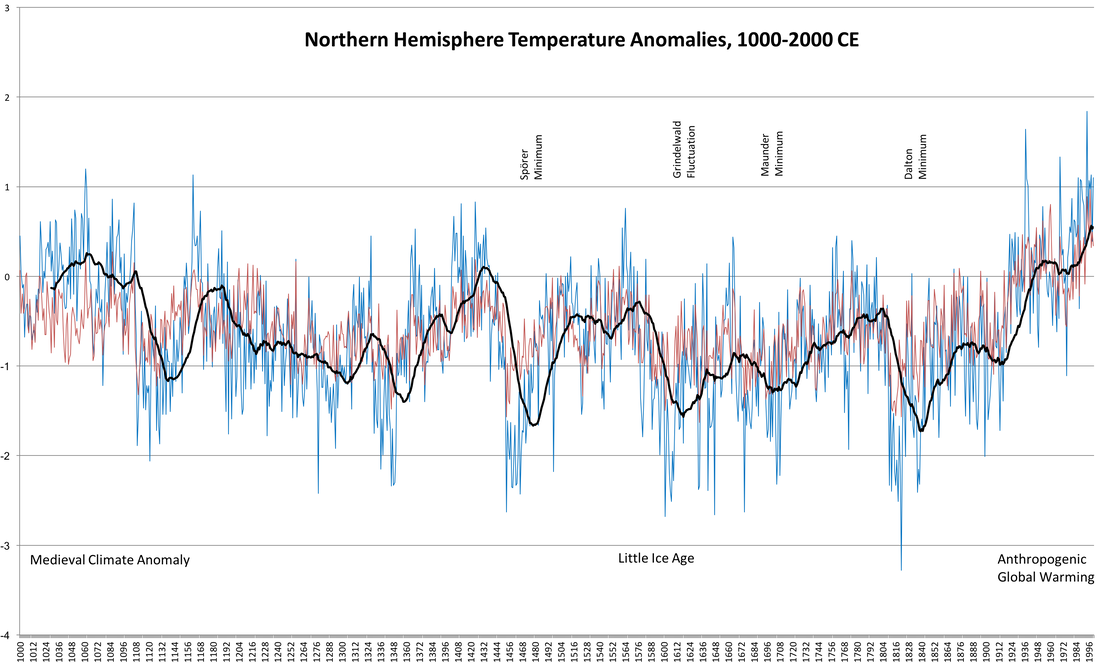

[2] USGCRP, 2018: Impacts, Risks, and Adaptation in the United States: Fourth National Climate Assessment, Volume II[Reidmiller, D.R., C.W. Avery, D.R. Easterling, K.E. Kunkel, K.L.M. Lewis, T.K. Maycock, and B.C. Stewart (eds.)]. U.S. Global Change Research Program, Washington, DC, USA. doi: 10.7930/NCA4.2018. [3] Charles C. Mann, The Wizard and the Prophet: Two Remarkable Scientists and Their Conflicting Visions of the Future of Our Planet (Picador, 2018), 5-6. [4] USGCRP, Fourth National Climate Assessment, Volume II, Chapter 1: Overview. [5] IPCC, Global warming of 1.5°C, Chapter 1. [6] IPCC, Global warming of 1.5°C; Detlef P. van Vuuren, et al., “Alternative pathways to the 1..5°C target reduce the need for negative emission technologies,” Nature Climate Change, Vol.8 (May 2018): 391-397; Joeri Rogelj, et al., “Scenarios towards limiting global mean temperature increase below 1.5°C,” Nature Climate Change, Vol.8 (April 2018): 325-332. [7] Mariësse A.E. van Sluisveld, et al., “Exploring the implications of lifestyle change in 2°C mitigation scenarios using the IMAGE integrated assessment model,” Technological Forecasting and Social Change, Vol.102 (2016): 309-319. Prof. Dagomar Degroot, Georgetown University. Roughly 11,000 years ago, rising sea levels submerged Beringia, the vast land bridge that once connected the Old and New Worlds. Vikings and perhaps Polynesians briefly established a foothold in the Americas, but it was the voyage of Columbus in 1492 that firmly restored the ancient link between the world’s hemispheres. Plants, animals, and pathogens – the microscopic agents of disease – never before seen in the Americas now arrived in the very heart of the western hemisphere. It is commonly said that few organisms spread more quickly, or with more horrific consequences, than the microbes responsible for measles and smallpox. Since the original inhabitants of the Americas had never encountered them before, millions died. The great environmental historian Alfred Crosby first popularized these ideas in 1972. It took over thirty years before a climatologist, William Ruddiman, added a disturbing new wrinkle. What if so many people died so quickly across the Americas that it changed Earth’s climate? Abandoned fields and woodlands, once carefully cultivated, must have been overrun by wild plants that would have drawn huge amounts of carbon dioxide out of the atmosphere. Perhaps that was the cause of a sixteenth-century drop in atmospheric carbon dioxide, which scientists had earlier uncovered by sampling ancient bubbles in polar ice sheets. By weakening the greenhouse effect, the drop might have exacerbated cooling already underway during the “Grindelwald Fluctuation:” an especially frigid stretch of a much older cold period called the “Little Ice Age." Last month, an extraordinary article by a team of scholars from the University College London captured international headlines by uncovering new evidence for these apparent relationships. The authors calculate that nearly 56 million hectares previously used for food production must have been abandoned in just the century after 1492, when they estimate that epidemics killed 90% of the roughly 60 million people indigenous to the Americas. They conclude that roughly half of the simultaneous dip in atmospheric carbon dioxide cannot be accounted for unless wild plants grew rapidly across these vast territories. On social media, the article went viral at a time when the Trump Administration’s wanton disregard for the lives of Latin American refugees seems matched only by its contempt for climate science. For many, the links between colonial violence and climate change never appeared clearer – or more firmly rooted in the history of white supremacy. Some may wonder whether it is wise to quibble with science that offers urgently-needed perspectives on very real, and very alarming, relationships in our present. Yet bold claims naturally invite questions and criticism, and so it is with this new article. Historians – who were not among the co-authors – may point out that the article relies on dated scholarship to calculate the size of pre-contact populations in the Americas, and the causes for their decline. Newer work has in fact found little evidence for pan-American pandemics before the seventeenth century. More importantly, the article’s headline-grabbing conclusions depend on a chain of speculative relationships, each with enough uncertainties to call the entire chain into question. For example, some cores exhumed from Antarctic ice sheets appear to reveal a gradual decline in atmospheric carbon dioxide during the sixteenth century, while others apparently show an abrupt fall around 1590. Part of the reason may have to do with local atmospheric variations. Yet the difference cannot be dismissed, since it is hard to imagine how gradual depopulation could have led to an abrupt fall in 1590. To take another example, the article leans on computer models and datasets that estimate the historical expansion of cropland and pasture. Models cited in the article suggest that the area under human cultivation steadily increased from 1500 until 1700: precisely the period when its decline supposedly cooled the Earth. An increase would make sense, considering that the world’s human population likely rose by as many as 100 million people over the course of the sixteenth century. Meanwhile, merchants and governments across Eurasia depleted woodlands to power new industries and arm growing militaries. Changes in the extent and distribution of historical cropland, 3000 BCE to the present, according to the HYDE 3.1 database of human-induced global land use change. In any case, models and datasets may generate tidy numbers and figures, but they are by nature inexact tools for an era when few kept careful or reliable track of cultivated land. Models may differ enormously in their simulations of human land use; one, for example, shows 140 million more hectares of cropland than another for the year 1700. Remember that, according to the new article, the abandonment of just 56 million hectares in the Americas supposedly cooled the planet just a century earlier! If we can make educated guesses about land use changes across Asia or Europe, we know next to nothing about what might have happened in sixteenth-century Africa. Demographic changes across that vast and diverse continent may well have either amplified or diminished the climatic impact of depopulation in the Americas. And even in the Americas, we cannot easily model the relationship between human populations and land use. Surging populations of animals imported by Europeans, for example, may have chewed through enough plants to hold off advancing forests. Moreover, the early death toll in the Americas was often also especially high in communities at high elevations: where the tropical trees that absorb the most carbon could not go. In short, we cannot firmly establish that depopulation in the Americas cooled the Earth. For that reason, it is missing the point to think of the new article as either “wrong” or “right;” rather, we should view it as a particularly interesting contribution to an ongoing academic conversation. Journalists in particular should also avoid exaggerating the article’s conclusions. The co-authors never claim, for example, that depopulation “caused” the Little Ice Age, as some headlines announced, nor even the Grindelwald Fluctuation. At most, it worsened cooling already underway during that especially frigid stretch of the Little Ice Age. For all the enduring questions it provokes, the new article draws welcome attention to the enormity of what it calls the “Great Dying” that accompanied European colonization, which was really more of a “Great Killing” given the deliberate role that many colonizers played in the disaster. It also highlights the momentous environmental changes that accompanied the European conquest. The so-called “Age of Exploration” linked not only the Americas but many previously isolated lands to the Old World, in complex ways that nevertheless reshaped entire continents to look more like Europe. We are still reckoning with and contributing to the resulting, massive decline in plant and animal biomass and diversity. Not for nothing do some date the “Anthropocene,” the proposed geological epoch distinguished by human dominion over the natural world, to the sixteenth century. All of these issues also shed much-needed light on the Little Ice Age. Whatever its cause, we now know that climatic cooling had profound consequences for contemporary societies. Cooling and associated changes in atmospheric and oceanic circulation provoked harvest failures that all too often resulted in famines. In community after community, the malnourished repeatedly fell victim to outbreaks of epidemic disease, and mounting misery led many to take up arms against contemporary governments. Some communities and societies were resilient, even adaptive in the face of these calamities, but often partly by taking advantage of the less fortunate. Whether or not the New World genocide led to cooling, the sixteenth and seventeenth centuries offer plenty of warnings for our time. My thanks to Georgetown environmental historians John McNeill and Timothy Newfield for their help with this article, to paleoclimatologist Jürg Luterbacher for answering my questions about ice cores, and to colleagues who responded to my initial reflections on social media. Works Cited: